Benjamin Graham, David Novotny

3DV 2020

This is the official implementation of RidgeSfM: Structure from Motion via Robust Pairwise Matching Under Depth Uncertainty in PyTorch.

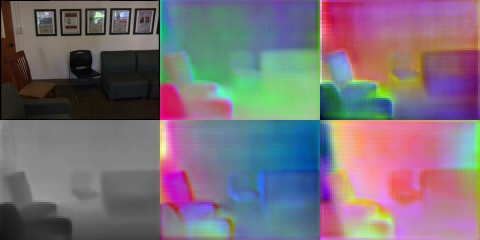

Below we illustrate the depth uncertainty factors of variation for a frame from scene 0708.

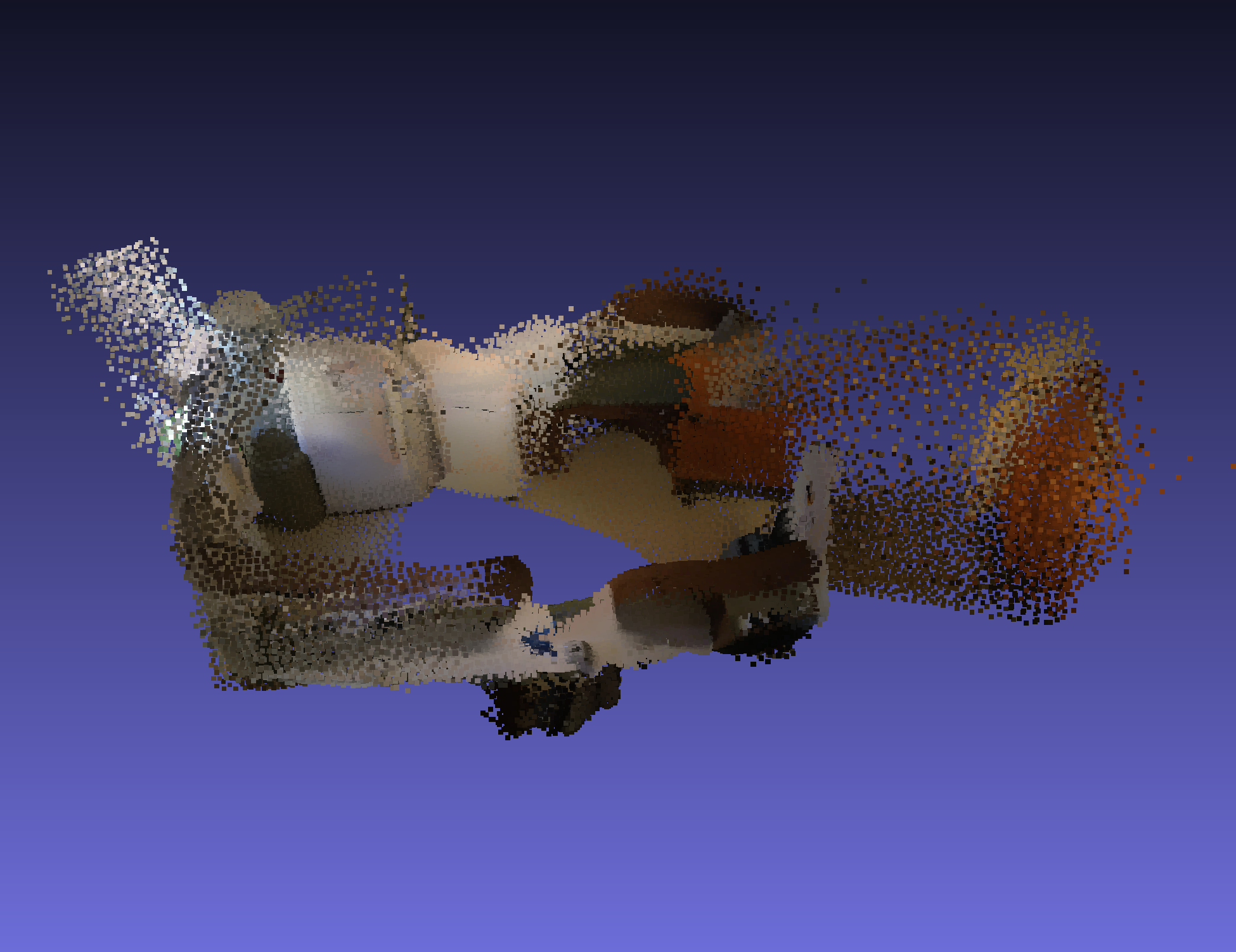

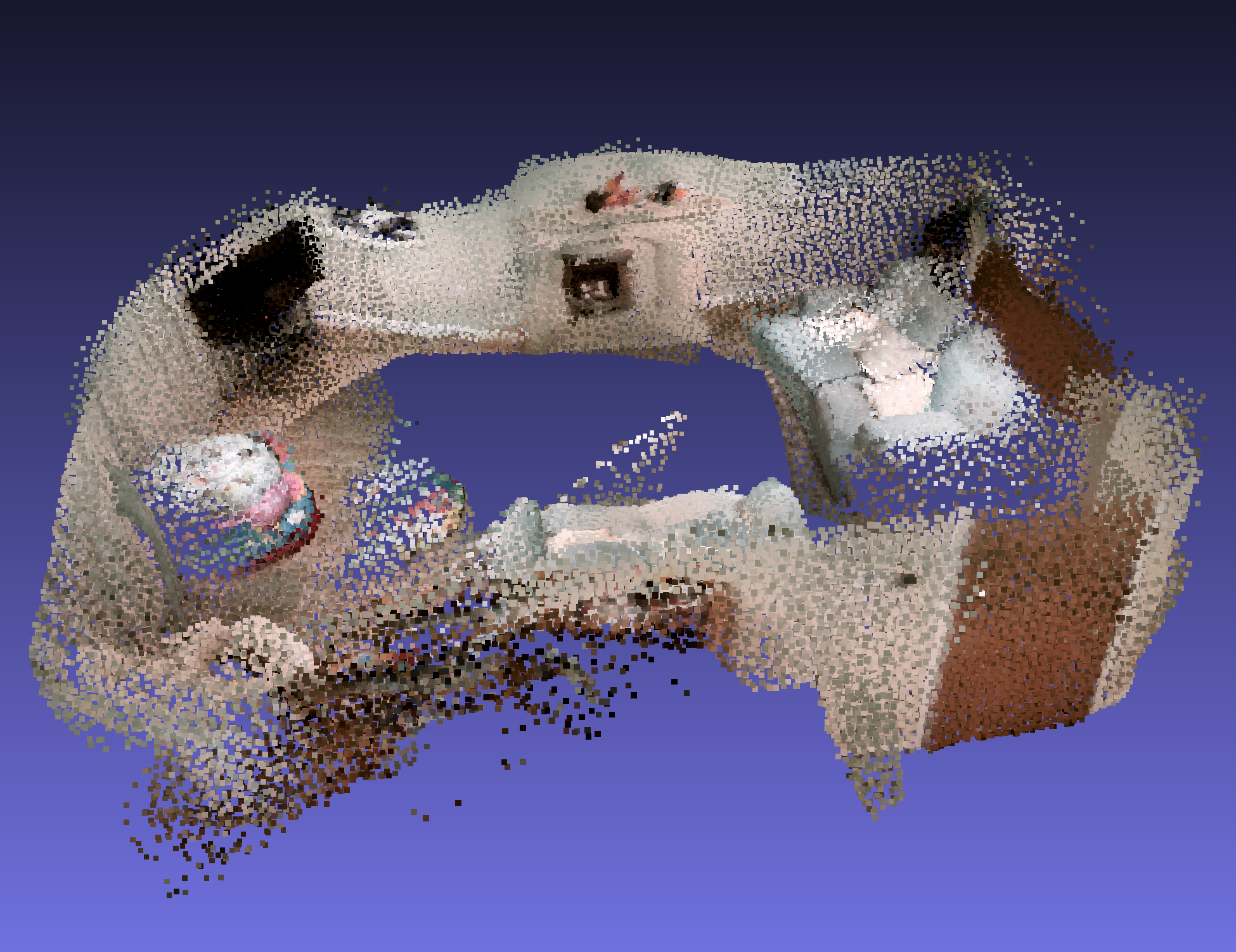

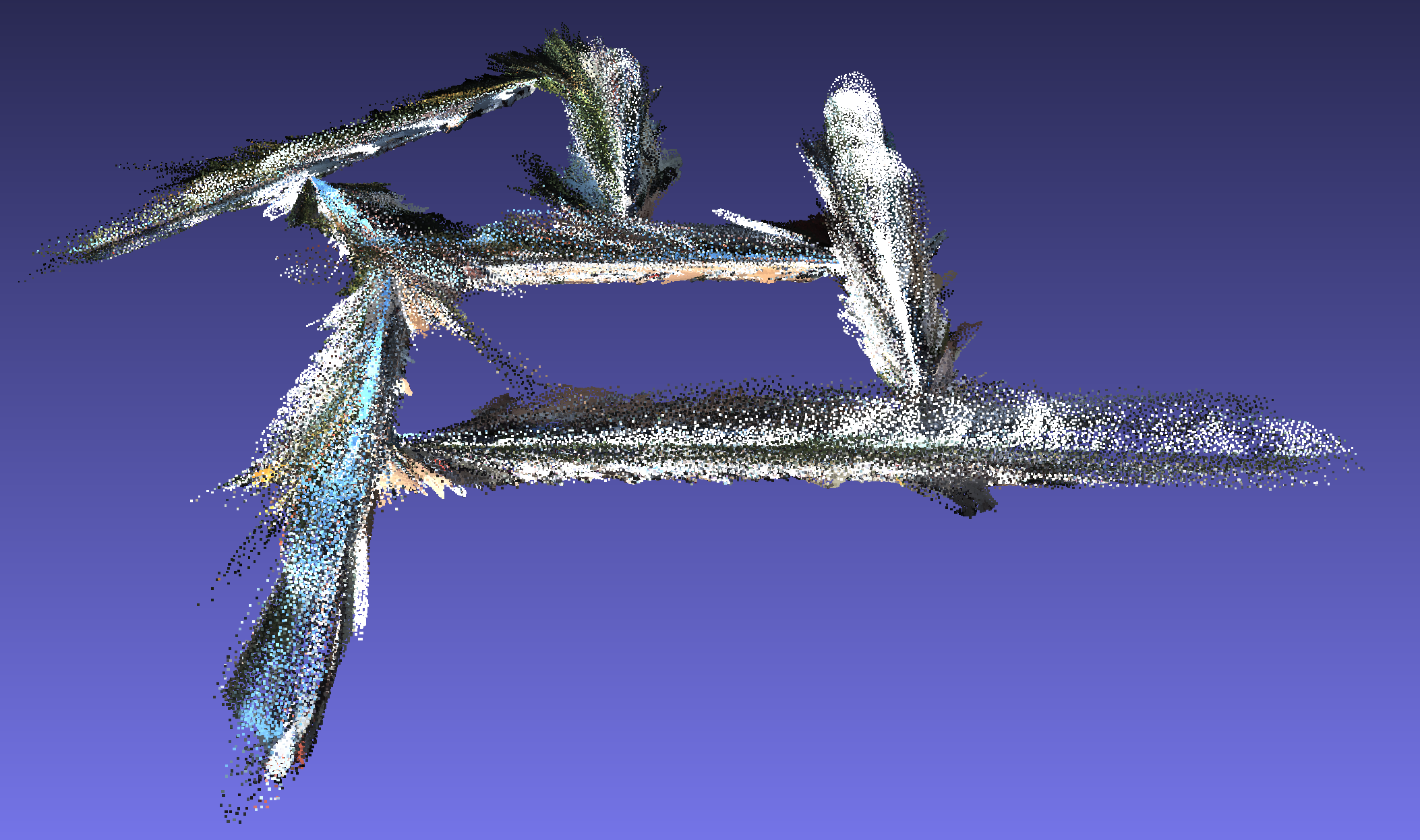

We applied RidgeSfM to a short video taken using a mobile phone camera. There is no ground truth pose, so the bottom right hand corner of the video is blank.

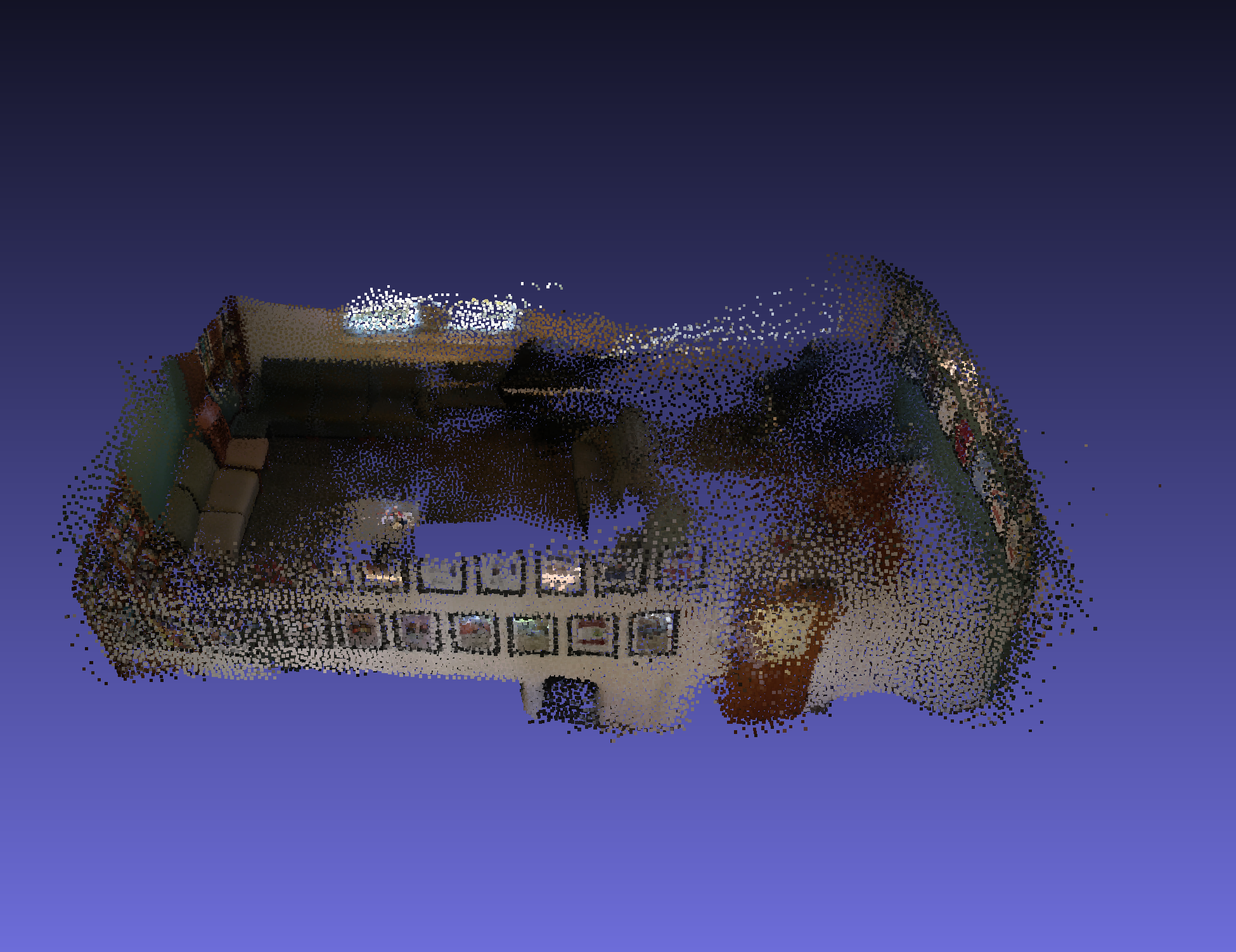

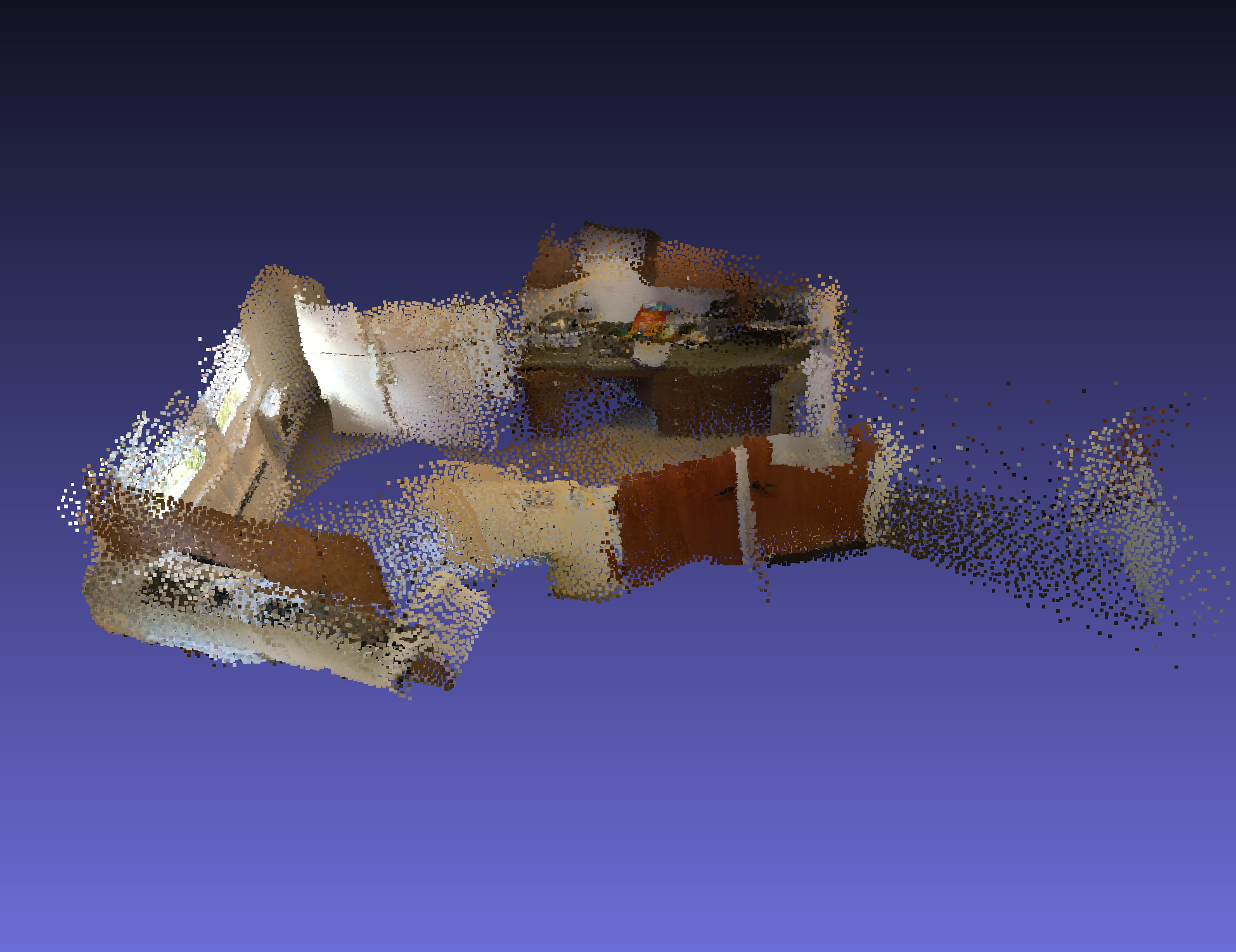

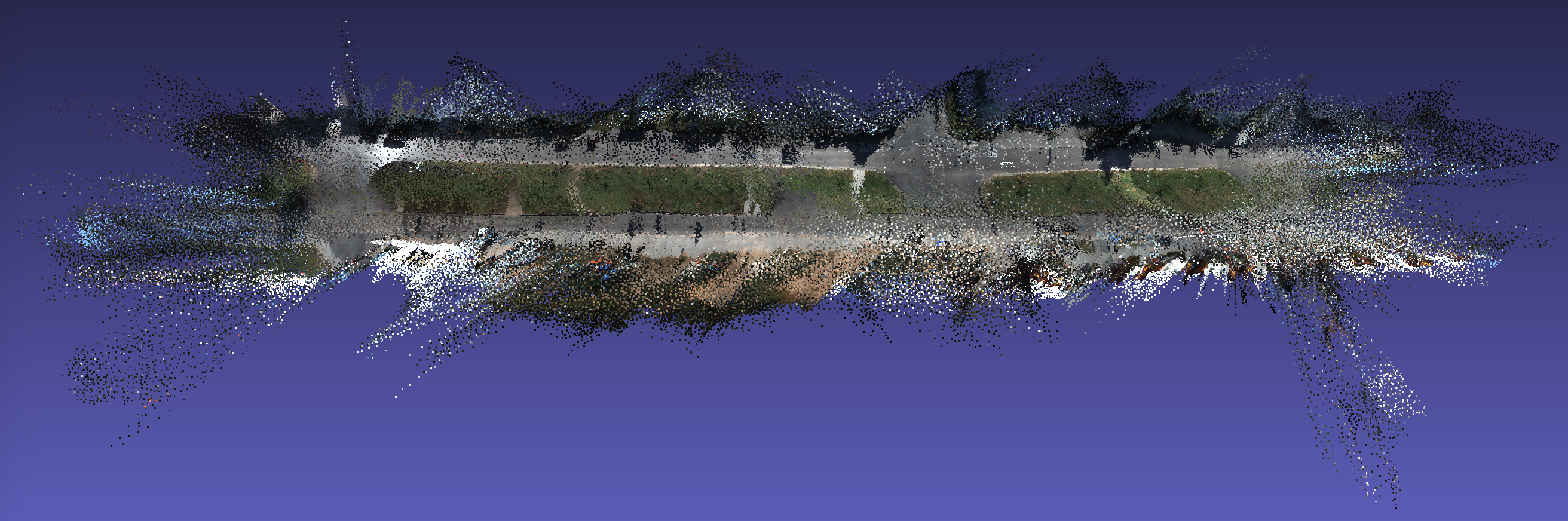

We trained a depth prediction network on the KITTI depth prediction training set. We then processed videos from the KITTI Visual Odometry dataset. We used the 'camera 2' image sequences, cropping the input to RGB images of size 1216x320. We used R2D2 as the keypoint detector. We used a frame skip rate of k=3. The scenes are larger spatially, so for visualization we increased the number of K-Means centroids to one million.

- Download the ScanNet dataset to

ridgesfm/data/scannet_sens/[train|test]/ - Download SensReader to

ridgesfm/data - Download SuperPoint to ridgesfm/

wget https://github.com/magicleap/SuperGluePretrainedNetwork/blob/master/models/weights/superpoint_v1.pth?raw=true -O ridgesfm/weights/superpoint_v1.pth

wget https://raw.githubusercontent.com/magicleap/SuperGluePretrainedNetwork/master/models/superpoint.py -O ridgesfm/superpoint.py

- Run

bash prepare_scannet.shinridgesfm/data/ - Run

python ridgesfm.py scene.n=0 scene.frameskip=10

Try process your own video,

- calibrate your camera using

calibrate/calibrate.ipynb - then run

python ridgesfm.py scenes=calibrate/ scene.n=0 scene.frameskip=10

RidgeSfM is CC-BY-NC licensed, as found in the LICENSE file. Terms of use. Privacy

If you find this code useful in your research then please cite:

@InProceedings{ridgesfm2020,

author = "Benjamin Graham and David Novotny",

title = "Ridge{S}f{M}: Structure from Motion via Robust Pairwise Matching Under Depth Uncertainty",

booktitle = "International Conference on 3D Vision (3DV)",

year = "2020",

}