[Paper] [🤗 Huggingface Dataset] [🤗 Checkpoints] [Twitter] [Website]

DOVE 🕊️, a new objective for aligning LLMs that optimizes preferences over joint instruction-response pairs.

Code for the Paper "Comparing Bad Apples to Good Oranges: Aligning Large Language Models via Joint Preference Optimization".

🔔 If you have any questions or suggestions, please don't hesitate to let us know. You can comment on the Twitter, or post an issue on this repository.

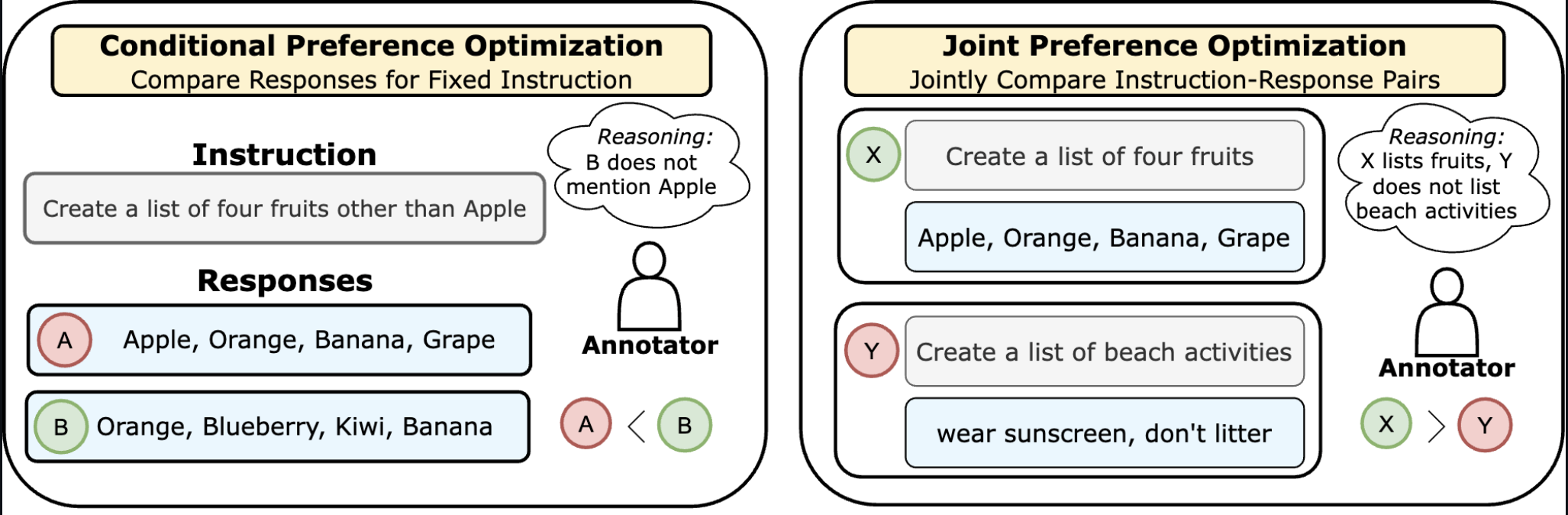

Traditional conditional feedback approaches are limited in capturing the complex, multifaceted nature of human preferences! Hence, we collect human and AI preferences jointly over instruction-response pairs i.e., (I1,R1) vs (I2, R2). Joint preferences subsume conditional preferences when I1=I2. To learn from joint preferences, we introduce a new preference optimization objective. Intuitively, it upweights the joint probability of the preferred instruction-response pair over the rejected instruction-response pair. If instructions are identical, then DOVE boils down to DPO!

Basic installation:

conda create -n dove python=3.10

conda activate dove

pip install -r requirements.txt

- We upload the sample data used for the project in this repo in this folder.

- SFT data,

train_sft.jsonl/val_sft.jsonl, files for openai_tldr and anthropic_helpful. - Conditional rankings data,

train_conditional_pref.jsonl/val_conditional_pref.jsonl, from ChatGPT-0125 for openai_tldr and anthropic_helpful. - Joints rankings data over non-identical instructions,

train_joint_pref.jsonl, from ChatGPT-0125 for openai_tldr and anthropic_helpful. - By default, the DOVE approach can work on both the conditional and joint rankings. Hence, we train our models on the combination of the conditional and joint rankings.

🤗 Data is uploaded on Huggingface: Link

We upload the trained SFT and DOVE checkpoints to huggingface 🤗.

| Model | Link |

|---|---|

| SFT (TLDR) | Link |

| SFT (Anthropic-Helpful) | Link |

| DOVE-LORA (TLDR) | Link |

| DOVE-LORA (Anthropic-Helpful) | Link |

Note: The LORA checkpoints are just the adapters parameters. You need to pass the path to the SFT model in the argument base_model_name_or_path in the adapter_config.json in the LORA folders.

We provide the steps to acquire conditional rankings and joint rankings over instruction-response pairs.

- Make it clear that the joint rankings are acquired over non-identical instructions.

- Assume that you have a jsonl file where each line is of the form:

{'instruction': [instruction], 'response_0': [response 0], 'response_1': [response_1]}- Run the following python command:

OPENAI_API_KEY=[OAI KEY] python dove/ai_feedback.py --mode single --input_data <data_file.jsonl> --output_data <output_data_file.jsonl>- Assume that you have a jsonl file where each line is of the form:

{'instruction_0': [instruction_0], 'response_0': [response 0], 'instruction_1': [instruction_1], 'response_1': [response_1]}- Run the following python command:

OPENAI_API_KEY=[OAI KEY] python dove/ai_feedback.py --mode pair --input_data <data_file.jsonl> --output_data <output_data_file.jsonl>- In the output file, our AI feedback appends a

feedbackattribute to each instance. Here,feedback = 0indicates that the first response is preferred.feedback = 1indicates that the second response is preferred.feedback=2implies that both responses are equally good/bad. - Once the output data files are ready, you can process to be of the form (say

pref.jsonl):

{'i_chosen': [chosen instruction], 'r_chosen': [chosen response], 'i_reject': [rejected instruction], 'r_reject': [rejected response]}You will remove the responses that are considered equal while creating this file.

- Download the base Mistral-7B model from Huggingface https://huggingface.co/mistralai/Mistral-7B-v0.1.

- We present the sample data for SFT in data folder for the openai TL;DR and anthropic-helpful datasets. The file names will be

train_sft.jsonlorval_sft.jsonl. - Follow the steps mentioned in this sft readme to install the relevant dependencies and perform full-finetuning of the Mistral-7B model.

- While we perform full-finetuning, huggingface TRL (here) supports finetuning 7B models with low-rank adaptation. Feel free to check them out.

- We provide the SFT models for the TLDR and anthropic-helpful dataset in the ModelZOO.

- Once you have a SFT model, you can use our inference.py to sample from it.

- We provide the test data files for tldr and helpful datasets. Specifically, these files are of the form:

{'instruction': [instruction], 'response': [response]}- Run the following python command:

CUDA_VISIBLE_DEVICES=1 python dove/inference.py --model_path <path to sft model> --test_file [path to test file] --output_file [output file name] --temp 0.001 (default sampling temperature)Note: We explicitly add the summarization format used to finetune SFT in the above script. Feel free to edit them. 4. The above code will generate a file of the form:

{'instruction': [instruction], 'outputs': [outputs]}- We provide the sample data for conditional rankings (two responses, identical instrucution) and joint rankings over non-identical instructions for both datasets.

- For example, conditional rankings data for anthropic-helpful is train_pref and val_pref.

- Joint rankings over non-identical instructions are present in train_joint_pref file.

- As conditional rankings is the special case of the joint rankings when the instructions are identical, DOVE can utilize the diverse set of preferences.

- Hence, the user can train on just the conditional rankings, joint rankings (non-identical instructions), merge both the conditional rankings and joint rankings (proposed setup in the paper).

- We will start with the SFT model and align it with the DOVE algorithm. Run the following for alignment:

CUDA_VISIBLE_DEVICES=1 python train.py --model_name_or_path <path to sft model> --dataset_name <path to train_pref file> --eval_dataset_name <path to val_pref file> --per_device_train_batch_size 8 --gradient_accumulation_steps 4 --output_dir <location where you want save the model ckpt> --joint_distribution True --max_steps 1000- Note 1: You can use the same command to align the LLM with DPO instead of DOVE by removing

--joint distribution Truecommand. - Note 2: We recommend increasing the number of steps when you merge the conditional and joint rankings data to keep the number of epochs same. On a single GPU the number of epochs = (dataset size/(batch_size * gradient_accumulation_steps)).

- This code will save the LORA adapters (instead of the complete model) in the

output_dir.

- Once you have a SFT model, you can use our inference.py to sample from it.

- We provide the test data files for tldr and helpful datasets. Specifically, these files are of the form:

{'instruction': [instruction], 'response': [response]}- Run the following python command:

CUDA_VISIBLE_DEVICES=1 python dove/inference.py --model_path <path to DOVE model (path to output dir)> --test_file [path to test file] --output_file [output file name] --temp 0.001 (default sampling temperature)Note: The trained LORA checkpoint for DOVE automatically picks up the path to the SFT model as it is mentioned its adapter_config.json. Note: We explicitly add the summarization format used to finetune SFT in the above script. Feel free to edit them. 4. The above code will generate a file of the form:

{'instruction': [instruction], 'outputs': [outputs]}- Sample outputs are presented in outputs.

- We aim to assess the win-rate of the model generations when compared against the gold responses.

- The code is mostly the same as AI feedback generation.

- Run the following command:

OPENAI_API_KEY=[OAI KEY] python dove/auto_eval.py --input_data <generations file from previous section> --model_name <model_name> --test_data <test file with gold responses> --master_data <output file name (say master.json)>