YOLOv5 Runtime Stack

Documentation • Installation Instructions • Deployment • Contributing • Reporting Issues

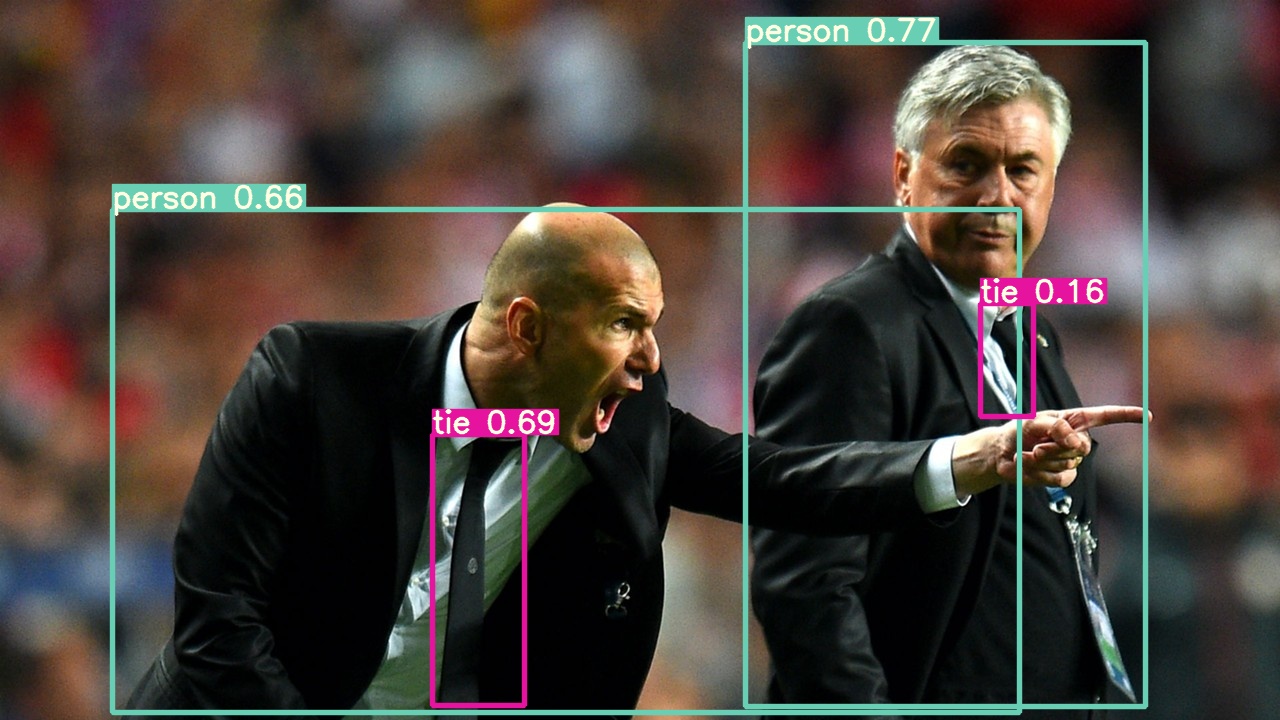

What it is. Yet another implementation of Ultralytics's YOLOv5. yolort aims to make the training and inference of the object detection integrate more seamlessly together. yolort now adopts the same model structure as the official YOLOv5. The significant difference is that we adopt the dynamic shape mechanism, and within this, we can embed both pre-processing (letterbox) and post-processing (nms) into the model graph, which simplifies the deployment strategy. In this sense, yolort makes it possible to be deployed more friendly on LibTorch, ONNXRuntime, TVM and so on.

About the code. Follow the design principle of detr:

object detection should not be more difficult than classification, and should not require complex libraries for training and inference.

yolort is very simple to implement and experiment with. You like the implementation of torchvision's faster-rcnn, retinanet or detr? You like yolov5? You love yolort!

- Dec. 27, 2021. Add

TensorRTC++ interface example. Thanks to Shiquan. - Dec. 25, 2021. Support exporting to

TensorRT, and inferencing withTensorRTPython interface. - Sep. 24, 2021. Add

ONNXRuntimeC++ interface example. Thanks to Fidan. - Feb. 5, 2021. Add

TVMcompile and inference notebooks. - Nov. 21, 2020. Add graph visualization tools.

- Nov. 17, 2020. Support exporting to

ONNX, and inferencing withONNXRuntimePython interface. - Nov. 16, 2020. Refactor YOLO modules and support dynamic shape/batch inference.

- Nov. 4, 2020. Add

LibTorchC++ inference example. - Oct. 8, 2020. Support exporting to

TorchScriptmodel.

There are no extra compiled components in yolort and package dependencies are minimal, so the code is very simple to use.

-

Above all, follow the official instructions to install PyTorch 1.7.0+ and torchvision 0.8.1+

-

Installation via Pip

Simple installation from PyPI

pip install -U yolort

Or from Source

# clone yolort repository locally git clone https://github.com/zhiqwang/yolov5-rt-stack.git cd yolov5-rt-stack # install in editable mode pip install -e .

-

Install pycocotools (for evaluation on COCO):

pip install -U 'git+https://github.com/ppwwyyxx/cocoapi.git#subdirectory=PythonAPI' -

To read a source of image(s) and detect its objects 🔥

from yolort.models import yolov5s # Load model model = yolov5s(pretrained=True, score_thresh=0.45) model.eval() # Perform inference on an image file predictions = model.predict("bus.jpg") # Perform inference on a list of image files predictions = model.predict(["bus.jpg", "zidane.jpg"])

The models are also available via torch hub, to load yolov5s with pretrained weights simply do:

model = torch.hub.load("zhiqwang/yolov5-rt-stack:main", "yolov5s", pretrained=True)The following is the interface for loading the checkpoint weights trained with ultralytics/yolov5. See our how-to-align-with-ultralytics-yolov5 notebook for more details.

from yolort.models import YOLOv5

# 'yolov5s.pt' is downloaded from https://github.com/ultralytics/yolov5/releases/download/v5.0/yolov5s.pt

ckpt_path_from_ultralytics = "yolov5s.pt"

model = YOLOv5.load_from_yolov5(ckpt_path_from_ultralytics, score_thresh=0.25)

model.eval()

img_path = "test/assets/bus.jpg"

predictions = model.predict(img_path)We provide a notebook to demonstrate how the model is transformed into torchscript. And we provide an C++ example of how to infer with the transformed torchscript model. For details see the GitHub Actions.

On the ONNXRuntime front you can use the C++ example, and we also provide a tutorial export-onnx-inference-onnxruntime for using the ONNXRuntime.

On the TensorRT front you can use the C++ example, and we also provide a tutorial onnx-graphsurgeon-inference-tensorrt for using the TensorRT.

Now, yolort can draw the model graph directly, checkout our model-graph-visualization notebook to see how to use and visualize the model graph.

- The implementation of

yolov5borrow the code from ultralytics. - This repo borrows the architecture design and part of the code from torchvision.

If you use yolort in your publication, please cite it by using the following BibTeX entry.

@Misc{yolort2021,

author = {Zhiqiang Wang, Shiquan Yu, Fidan Kharrasov},

title = {yolort: A runtime stack for object detection on specialized accelerators},

howpublished = {\url{https://github.com/zhiqwang/yolov5-rt-stack}},

year = {2021}

}See the CONTRIBUTING file for how to help out. BTW, leave a 🌟 if you liked it, and this is the easiest way to support us :)