The goal of this project is to build a multi-layer perceptron from scratch to create a model that predicts the outcome of a breast cancer diagnosis.

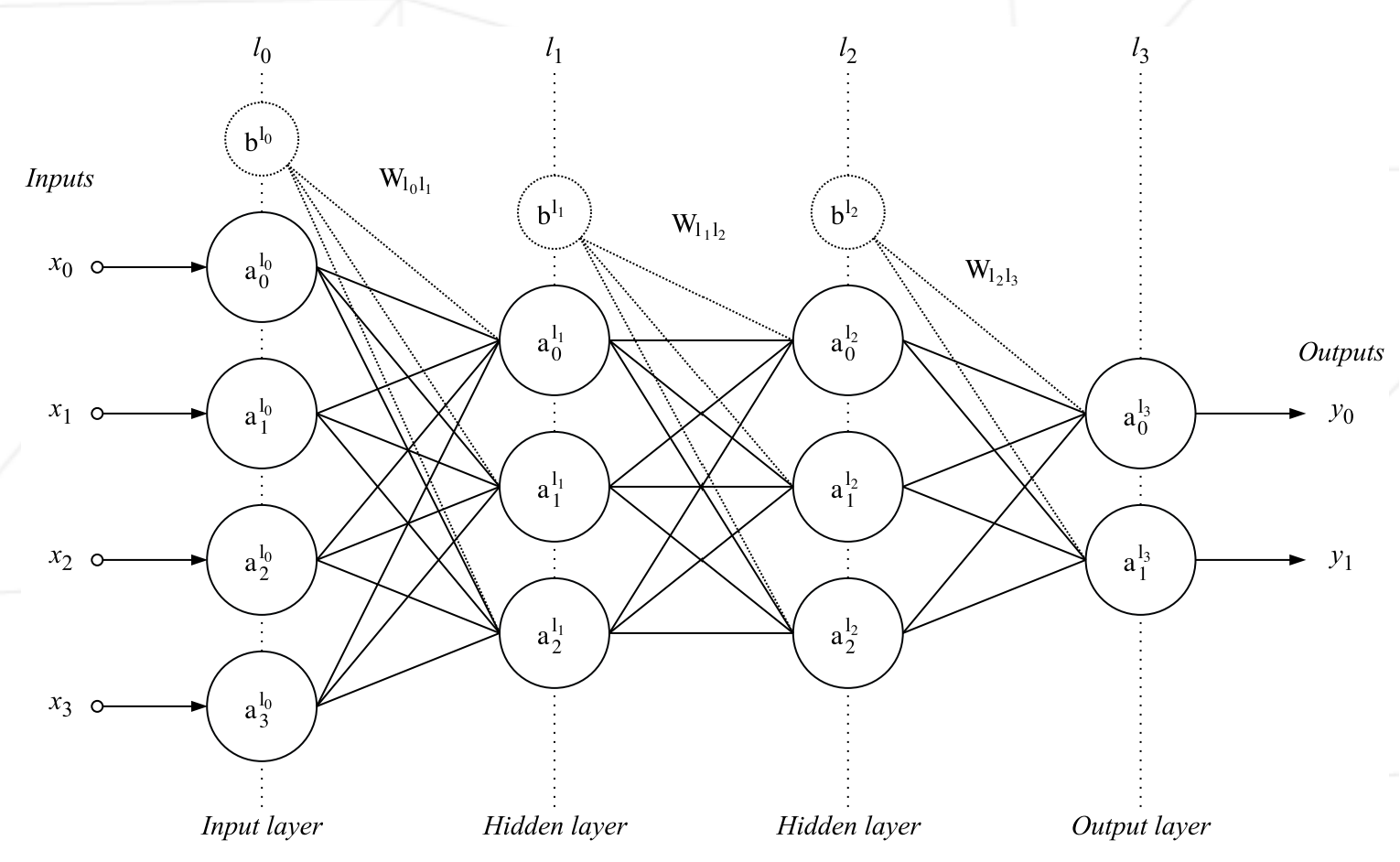

The multilayer perceptron is a feedforward network (meaning that the data flows from the input layer to the output layer) defined by the presence of one or more hidden layers as well as an interconnection of all the neurons of one layer to the next.

The diagram above represents a network containing 4 dense layers (also called fully

connected layers). Its inputs consist of 4 neurons and its output of 2 (perfect for binary classification). The weights of one layer to the next are represented by two dimensional matrices noted

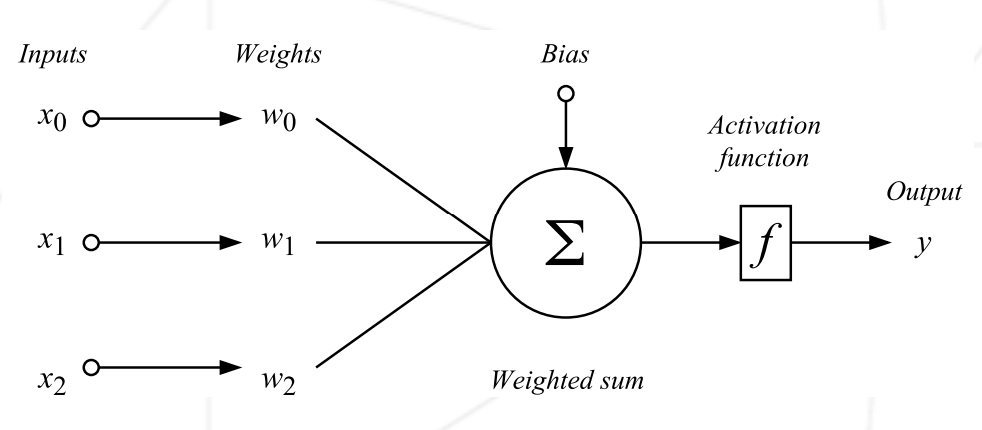

The perceptron is the type of neuron that the multilayer perceptron is composed of. They are defined by the presence of one or more input connections, an activation function and a single output. Each connection contains a weight (also called parameter) which is learned during the training phase.

Two steps are necessary to get the output of a neuron. The first one consists in computing the weighted sum of the outputs of the previous layer with the weights of the input connections of the neuron, which gives

The second step consists in applying an activation function on this weighted sum, the output of this function being the output of the perceptron, and can be understood as the threshold above which the neuron is activated.

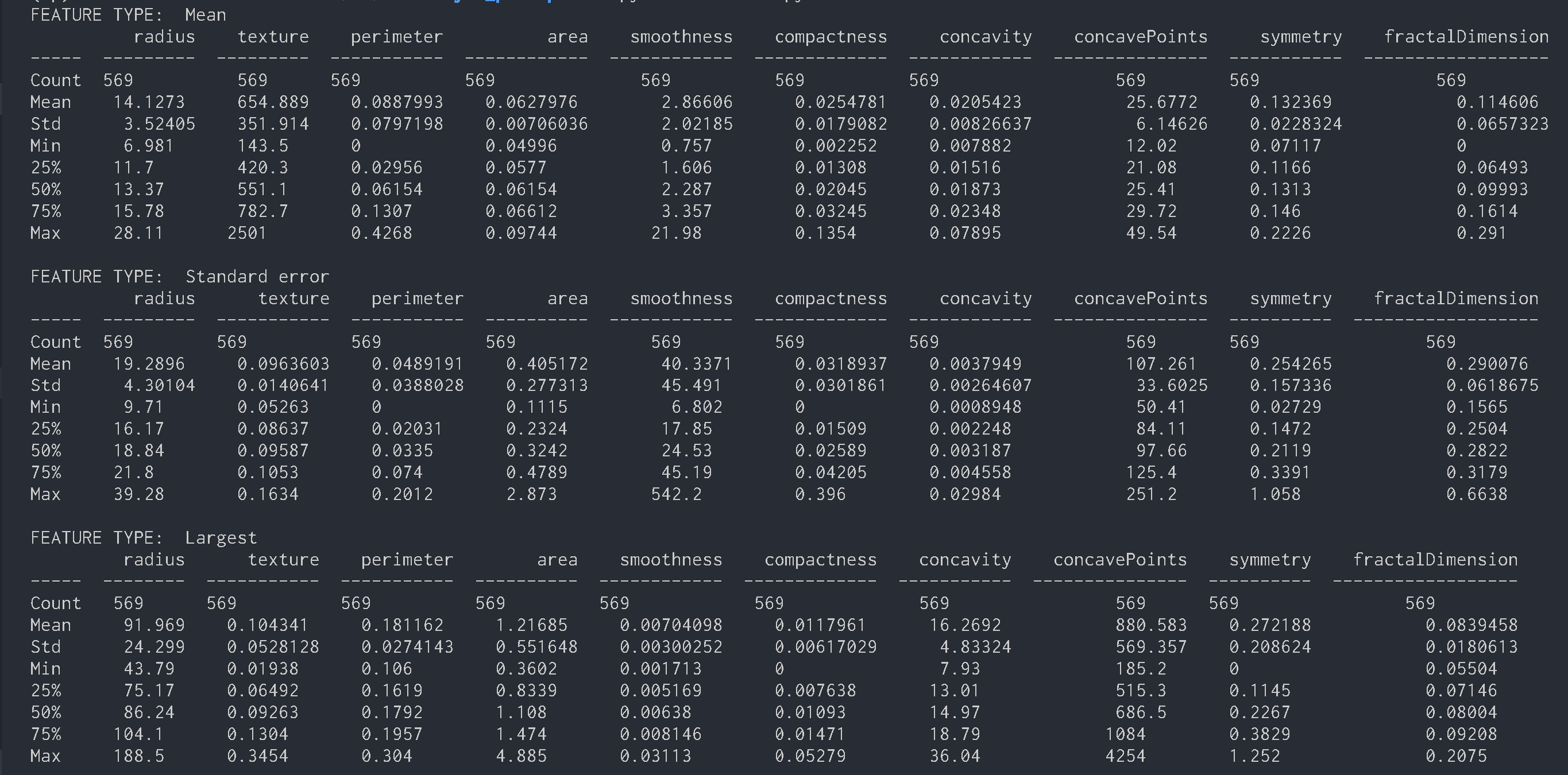

We use the dataset describe the characteristics of a cell nucleus of breast mass extracted with fine-needle aspiration for the training. It is a csv file of 32 columns, the column diagnosis being the label we want to learn given all the other features of an example, it can be either the value M or B (for malignant or benign).

The description of each columns is as follows.

- Column1 ->

ID number - Column2 ->

Diagnosis (M = malignant, B = benign) - Column3~32 ->

Ten real-valued features are computed for each cell nucleus

a) radius (mean of distances from center to points on the perimeter)

b) texture (standard deviation of gray-scale values)

c) perimeter

d) area

e) smoothness (local variation in radius lengths)

f) compactness (perimeter^2 / area - 1.0)

g) concavity (severity of concave portions of the contour)

h) concave points (number of concave portions of the contour)

i) symmetry

j) fractal dimension ("coastline approximation" - 1)

The mean, standard error, and "worst" or largest (mean of the three largest values) of these features were computed for each image, resulting in 30 features. For instance, field 3 is Mean Radius, field 13 is Radius SE, field 23 is Worst Radius.

For more information, you can check dataset/wdbc.names file.

The following commands can be used to obtain statistical information about the data.

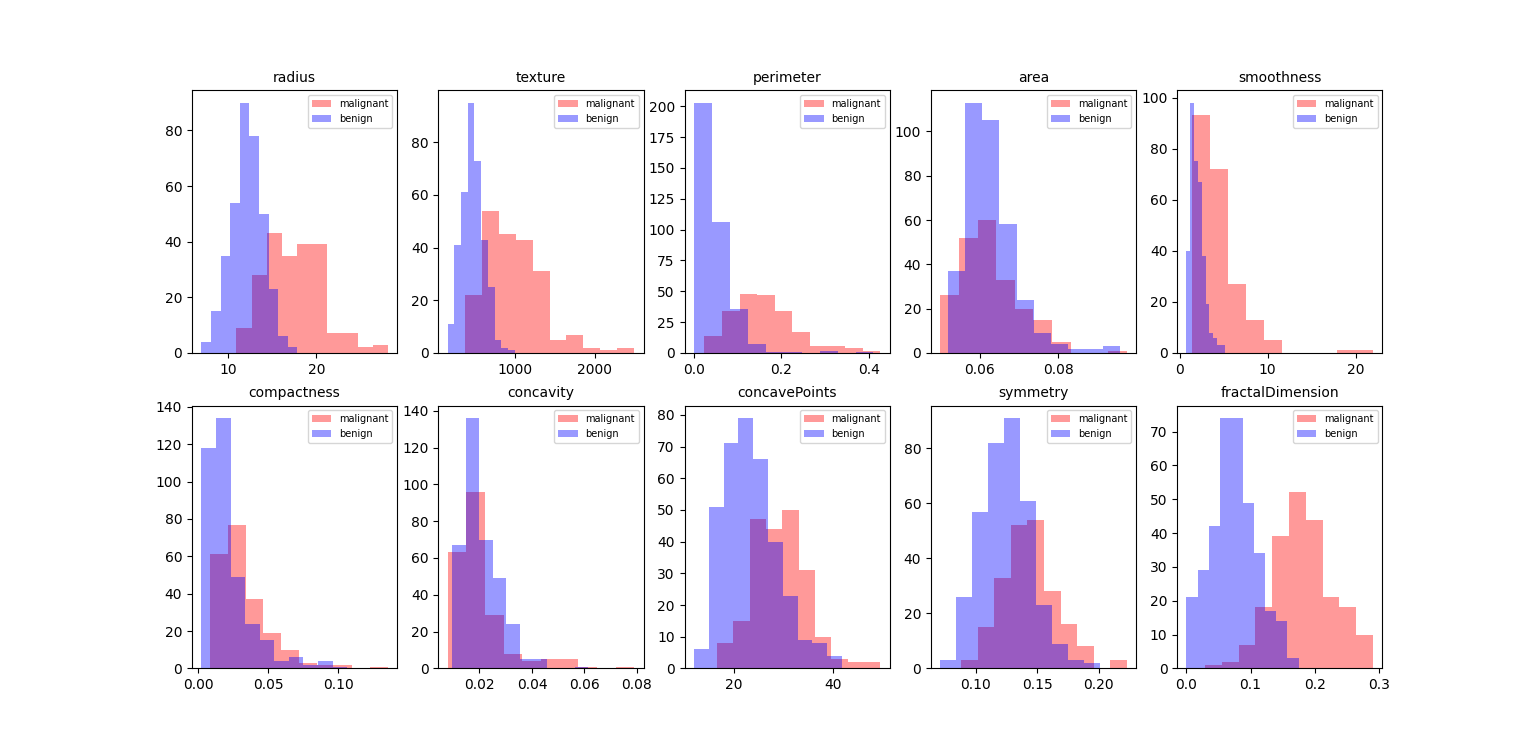

python3 srcs/describe.py dataset/wdbc.csvThe following commands can be used to obtain a histogram about the data.

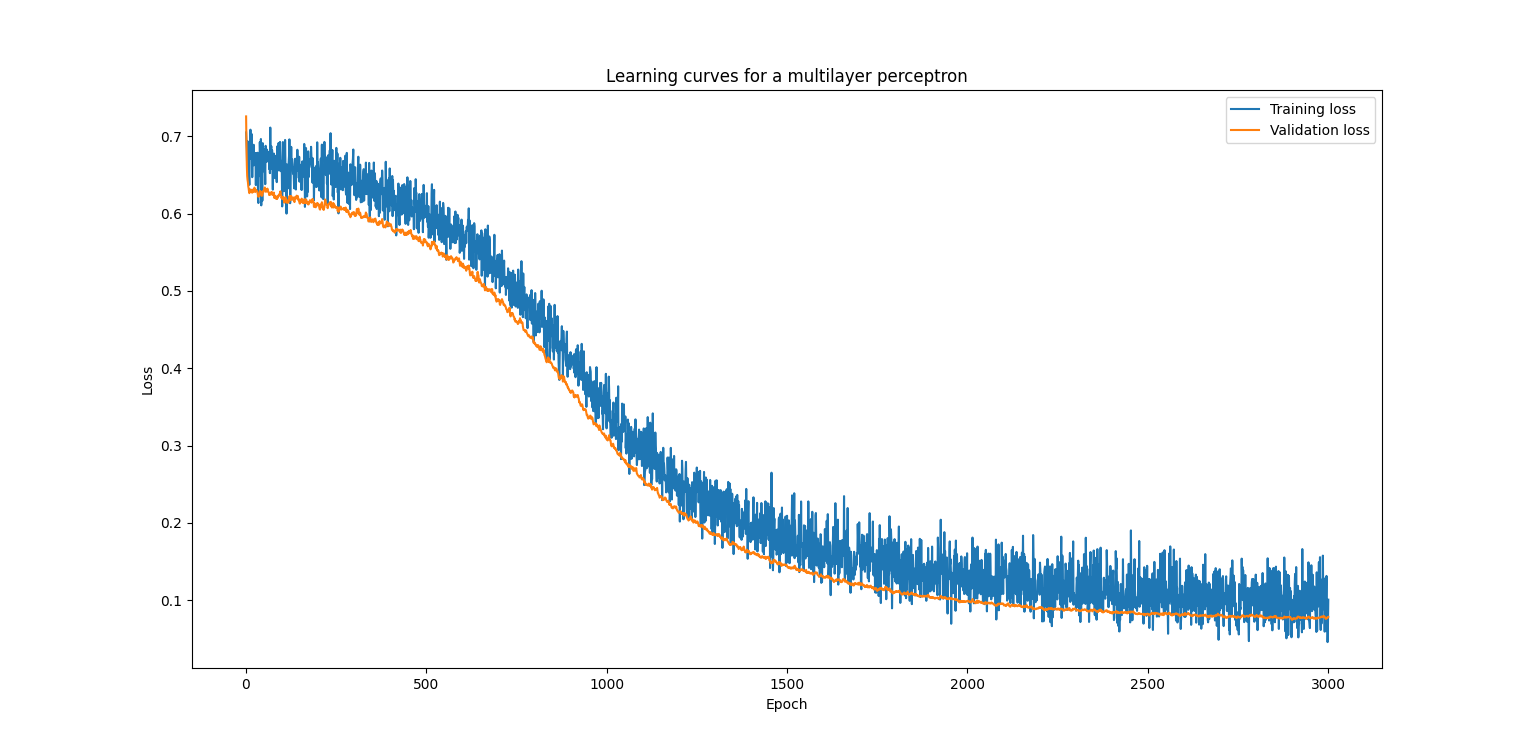

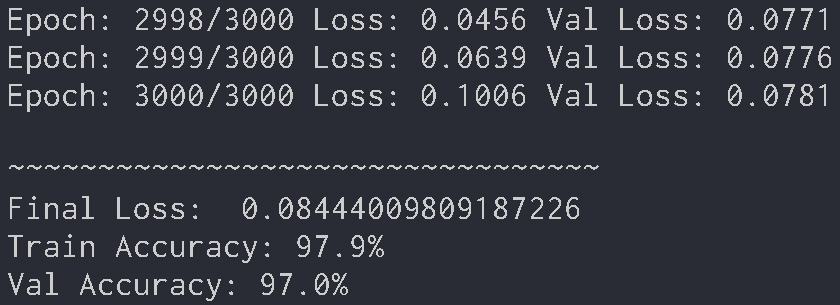

python3 srcs/histogram.py dataset/wdbc.csvTwo hidden layers are included in the training. I also use the binary cross entropy to calculate the loss.

According to the learning rate, parameters are updated using gradient descent after the gradient is obtained by backpropagation.

The networks used for the training are as follows.

- Affine

- Sigmoid

- Affine

- Sigmoid

- Affine

- Softmax

- BinaryCrossEntropy

It is more versatile to organize the layers in class as follows.

class Sigmoid:

def __init__(self):

self.out = None

def forward(self, x, is_train):

res = 1 / (1 + np.exp(-x))

if is_train:

self.out = res

return res

def backward(self, dx):

res = dx * (1 - self.out) * self.out

return resThe computation of forward and backward for each layer can be performed with the following for statement.

def predict(self, x, layers, is_train):

input_arr = x

for layer in layers:

res = layer.forward(input_arr, is_train)

input_arr = res

return res

def backward(self, label, layers):

dx = label

for layer in reversed(layers):

res = layer.backward(dx)

dx = resIf the input value is

-

Affine layer

$w$ is a weight and$b$ is a bias.

- Sigmoid layer

-

Softmax with loss layer

$t$ is a label.

The training was conducted under the following conditions.

- Iterations ->

15000 - Batch size ->

100 - Learning rate ->

0.0001 - Hidden layer size ->

50

You can train a model about the data by running below command.

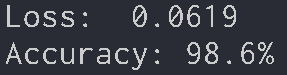

python3 srcs/train.py --train_data_path dataset/wdbc.csv --output_param_path model/param.jsonYou can predict the test data and evaluate your model by running below command.

python3 srcs/predict.py --test_data_path dataset/wdbc_test.csv --param_path model/param.json