-

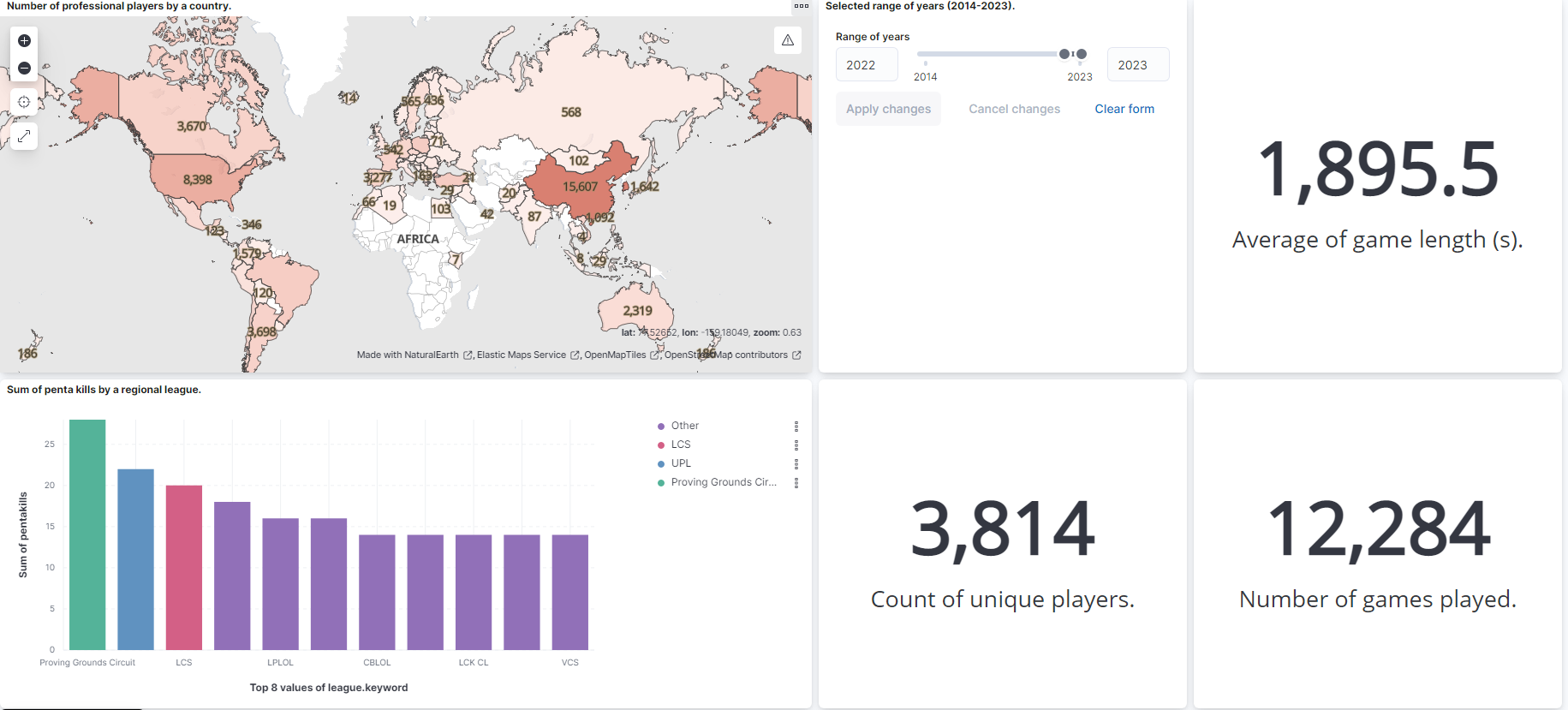

This project aims to perform ETL on professional League of Legends e-sport match data and prepare it for further analysis.

-

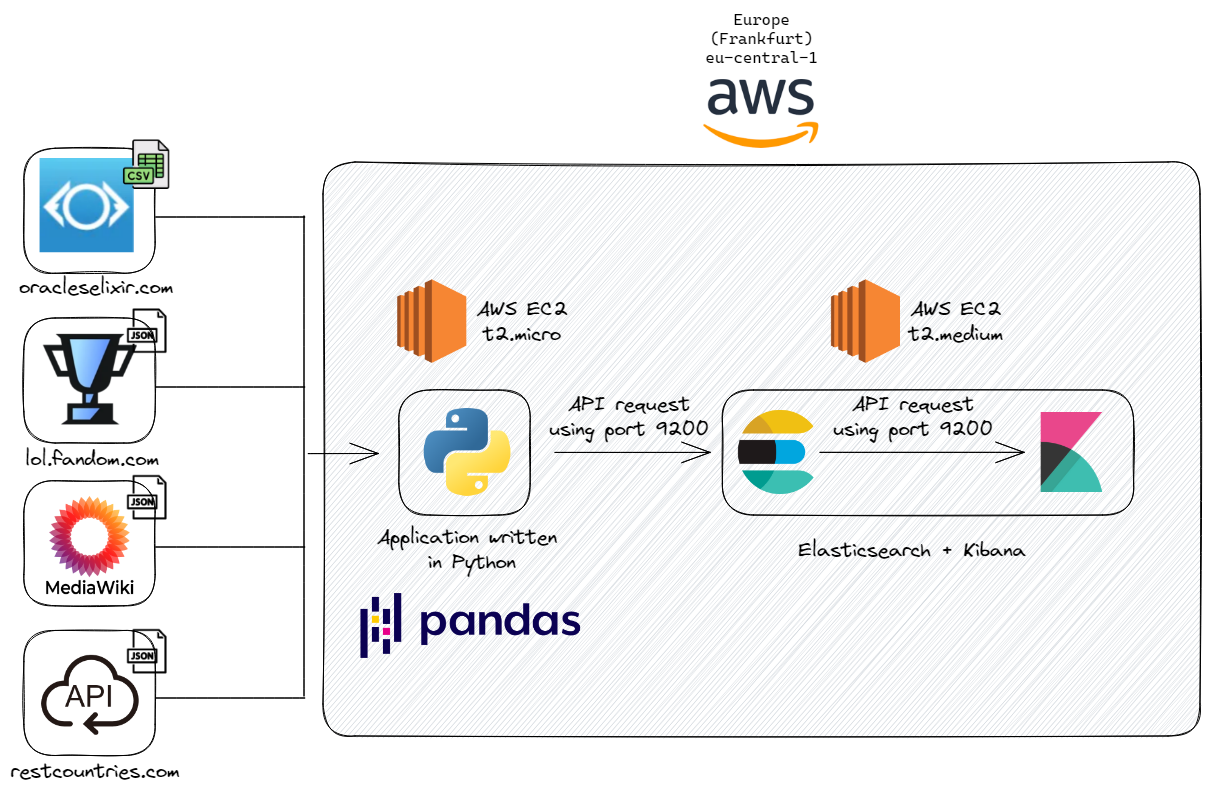

The primary data source is Oracle`s Elixir, which provides professional League of Legends e-sport match data from 2014 to nowadays. It's updated daily, and the matches from the corresponding calendar year have a separate .csv file. Definitions for the data in these files can be found or inferred from the information on the Definitions page. To better understand the dataset, it's worth adding that each game consists of twelve rows (two teams and five players per team).

-

Additional e-sport players' data is extracted from Leaguepedia API. This step allows us to easily enrich the data by fetching other external resources. For example, geographic data enrichment involves adding country code or latitude and longitude to an existing dataset, enabling Kibana's Maps to visualize and explore the data.

-

For the purpose of the project, it's important to mention that

gameandmatchhave different meanings. A match comprises games, while a League of Legends game can be won by destroying the enemy's Nexus. It can take multiple games to win the entire match. Usually, LoL e-sport matches follow theBest-of-Nformat (where N is the maximum number of games), where you need to win more than half of them. For example, for Bo5 matches, the scores 3-0 or 2-3 are valid.

Diagram created using Excalidraw.

Diagram created using Excalidraw.

- Extract the match data from Oracle's Elixir.

- Enrich by extracting and transforming the data:

- players' information using Leaguepedia API,

- country geographic coordinates from GeoData MediaWiki,

- numeric country code ISO 3166-1 using Rest Countries.

- Deploy the application and database on AWS EC2 instances.

- Load the data to Elasticsearch.

- Explore the data and create a dashboard using Kibana.

Please contact me directly to gain access to the database and related dashboards (Viewer role).

📦Esports_data_pipeline

┣ 📂Elasticsearch

┃ ┣ 📜dashboards.txt # - Backup for dashboards created in Kibana.

┃ ┣ 📜index_template.txt # - Template settings and mappings for indices.

┃ ┣ 📜mapping.txt

┃ ┗ 📜visualizations.txt

┣ 📂images

┃ ┣ 📜architecture_diagram_v2.png

┃ ┣ 📜diagram_architecture.jpg

┃ ┗ 📜general_info_dashboard.png

┣ 📂src

┃ ┣ 📜data_enricher.py # - Data enrichment with additional extractions and data transformations.

┃ ┣ 📜data_extractor.py # - Extraction of main data.

┃ ┣ 📜elasticsearch_connector.py # - Connection between Python and Elasticsearch and data loading.

┃ ┗ 📜utils.py # - Utility functions related to the project.

┣ 📜.env

┣ 📜.gitignore

┣ 📜READme.md

┣ 📜config.py # - Configuration file that contains constant variables.

┣ 📜main.py

┗ 📜requirements.txt

The focal part of the code is located in main.py file.

if __name__ == "__main__":

elasticsearch_connector = ElasticsearchConnector(local=False)

data = GetData(year=["2022"])

data_enricher = DataEnricher(data)

enriched_data = data_enricher.enrich_data(data.df_matches)

elasticsearch_connector.send_data(message_list=enriched_data, batch_size=5000)

All relevant classes and function definitions designed for the project's purpose has been explicitly described below.

elasticsearch_connector = ElasticsearchConnector()

ElasticsearchConnector class - sets up and manages the connection between Python and Elasticsearch. Making the connection with a database more convenient by using Python Elasticsearch Client - a wrapper around Elasticsearch’s REST API. The client also contains a set of helpers for tasks like bulk indexing, which increases the performance of loading the data to the database. Specify if the connection to Elasticsearch is made on localhost by setting the argument local=True. By default, the constructor method __init__(self, local: bool = False): connects to the URL provided inside config.py.

data = GetData(year=[2023], limits=None)

GetData class - downloads the .csv files with e-sport matches data:

yearto provide the list of years from 2014 to 2023 (included). Takes a current year as a default argument.limitsnarrows down the number of rows we want to extract from a data frame. By default, it takesNone, so the entire data frame is processed instead.

The e-sport matches data is downloaded and stored as pandas.DataFrame object.

Next, it converts the provided list of years to find matching URL links stored inside config.py. Each csv file is stored on Google Drive, and it has a unique id.

Using helper function convert_years_to_url(years: list, current_year: int) -> list: which converts and then returns the unique list of URLs. If the provided year is not inside CSV_FILES dictionary.

Afterwards auxiliary function merge_csv_files concatenates the files and stores them as a single data frame. Method get_player_name(self) -> list: returns, unique, non-empty lists of players from downloaded matches data.

data_enricher = DataEnricher(data)

enriched_data = data_enricher.enrich_data(data.df_matches)

DataEnricher class - enriches the data by handling additional extractions and data transformations. enrich_data() is a main method that transforms and prepares the data for being loaded into the database. Most of the data extractions are done in class constructor.

extract_players(self) -> list: - Extracts player data from Leaguepedia API.

get_country_coordinates(self) -> dict- Collects geographical coordinates as a GeoPoint(longitude, latitude) for unique list of countries using MediaWiki API.

In case when the coordinates are not found for a specific country, the function get_country_coordinates(self) -> dict backfills the data by sending a GET request to Rest Countries API. Finally, appends to the main data frame in the function append_coordinates_to_country().

get_country_codes(self) -> dict:- Fetches country codes in ISO 3166-1 numeric encoding system from Rest Countries API. It allows to later visualize the geo-data in Kibana.

def enrich_data(self, main_df: pd.DataFrame) -> list:

append_player_info(main_df, self.complementary_dict)

append_country_to_player(main_df, self.complementary_dict)

self.append_geo_coordinates(main_df)

self.append_country_codes(main_df)

append_id(main_df)

return convert_to_json(main_df)

append_player_info(df: pd.DataFrame, players_dict: dict):- Adds the column with player info dictionary for each player occurrence in a 'playername' column.

append_country_to_player(df: pd.DataFrame, player_dict: dict) -> pd.DataFrame: - Adds a new column with a country name for a player.

append_geo_coordinates(self, df_match: pd.DataFrame) -> pd.DataFrame: - Adds a new column with geographic coordinates (longitude and latitude).

append_country_codes(self, df_match: pd.DataFrame) -> pd.DataFrame: - Adds a new column with country codes in ISO 3166-1 numeric encoding system.

add_id_column() - ensures a unique id for each row of the data by concatenating gameid and participantid for a given game.

convert_to_json(df: pd.DataFrame) -> list:- Converts a dataframe to json. It helps loading the data into Elasticsearch by using pandas.DataFrame.to_json with atable argument fororient to fit a dictionary like schema.

send_data(message_list=enriched_data, batch_size=5000, index: str = f"esports-data-{TODAY}")

Takes three keyword arguments:

-

message_listlist of dictionaries containing the data. -

batch_sizesplits the list of items into batches of specified size.Both of these arguments are passed to

create_msg_batches(messages: list, batch_size: int) -> list, which utilizes the bulk helper function to optimize the speed of loading the data into Elasticsearch. -

indexspecifies the name of an index where the data is loaded. It's recommended to provide the index name matching the pattern "esports-data*", which uses created template settings and mappings for indices. This step ensures the consistent data types for provided mappings when loading into Elasticsearch.

AWS EC2 is the Amazon Web Service compute service that enables to create and run virtual machines in the cloud. You can follow the official installation guide to set up the EC2. For optimal performance of Elasticsearch and Kibana, it is recommended to use a machine with a minimum of 4GB of RAM and two virtual CPU cores. Choosing the t2.medium instance type during the installation process enables one to meet the requirements.

To connect to Linux EC2 instance, you can use SSH. For Unix-like operating systems, you can use the terminal. For Windows, follow one of these guides; OpenSSH or PuTTY.

To reduce the costs of a running instance, make sure to stop it after working with the application. Keeping t2.medium instance running for the entire month will exceed the AWS free tier limits.

Install Elasticsearch using a suitable installation guide; if you follow this setup guide, you should install Elasticsearch with RPM. The same goes for Kibana installation. Finally, create the enrollment token to configure Kibana instances to communicate with an existing Elasticsearch cluster using this installation guide.

In order to load the data inside Elasticsearch you need to create the .env file inside the main directory, like in the provided project structure. Example .env file with Elasticsearch credentials will look like this:

URL = "X.XX.XXX.XXX"

PORT = 9200

ELASTIC_USERNAME = "XXXXXXX"

ELASTIC_PASSWORD = "XXXXXXX"

To ensure a proper setup, you need to keep quotation marks " " for those values.

URL = "X.XX.XXX.XXX" - The IP address of your hostname, for example, the URL of AWS EC2 with Elasticsearch setup. It can be omitted if running the script on a local environment with Elasticsearch installed; in that case, you need to specify the argument for ElasticsearchConnector(local=True) inside main.py.

PORT = 9200 - Default port 9200 to communicate with Elasticsearch, it is used for all API calls over HTTP. This includes search and aggregations, and anything else that uses the HTTP request.

ELASTIC_USERNAME = "XXXXXXX" - default username.

ELASTIC_PASSWORD = "XXXXXXX" - password provided during Elasticsearch installation.

In order to download and run the project on your local machine follow these steps:

git clone https://github.com/HerrKurz/Esports_Data_Pipeline.git

Creates a copy of the repository.

cd Esports_Data_Pipeline/

Moves inside directory.

python3 -m venv venv

Creates a virtual environment, that allows you to manage separate package installations for this project.

source venv/bin/activate

Activate a virtual environment which puts the virtual environment-specific python and pip executables into your shell’s PATH.

pip install -r requirements.txt

Install the packages with for a specified version.

python3 main.py

Finally, run the script.

Note that it might be necessary to execute python instead of python3, depending on unix-like distributions.

- Use a data orchestration tool to improve control over data flow Apache Airflow or Prefect. Improve logging and automate the script to run the pipeline daily.

- Update the setup process using Terraform to reuse and provision infrastructure as a code.

- Add teams' data from Leaguepedia API.

- Backfill missing players' data using Liquipedia API.

- Use social media links associated with a player/team to gain insight into their social media reach, following etc.

- Calculate the relative skill levels of players using the Elo rating system.

- Create a classification model that determines whether given team will win the upcoming match.