[2024-03-12] 🔥🔥🔥 The evaluation code is released now! Feel free to evaluate your own Video LLMs.

- TempCompass encompasses a diverse set of temporal aspects (left) and task formats (right) to comprehensively evaluate the temporal perception capability of Video LLMs.

-

We construct conflicting videos to prevent the models from taking advantage of single-frame bias and language priors.

-

🤔 Can your Video LLM correctly answer the following question for both two videos?

What is happening in the video?

A. A person drops down the pineapple

B. A person pushes forward the pineapple

C. A person rotates the pineapple

D. A person picks up the pineapple

To begin with, clone this repository and install some packages:

git clone https://github.com/llyx97/TempCompass.git

cd TempCompass

pip install -r requirements.txt1. Task Instructions

The task instructions can be found in questions/.

2. Videos

Run the following commands. The videos will be saved to videos/.

cd utils

python download_video.py # Download raw videos

python process_videos.py # Construct conflicting videosWe use Video-LLaVA as an example to illustrate how to conduct MLLM inference on our benchmark.

Run the following commands. The prediction results will be saved to predictions/video-llava/<task_type>.

cd run_video_llava

python inference_dataset.py --task_type <task_type> # select <task_type> from multi-choice, yes_no, caption_matching, captioningAfter obtaining the MLLM predictions, run the following commands to conduct automatic evaluation. Remember to set your own $OPENAI_API_KEY in utils/eval_utils.py.

-

Multi-Choice QA

python eval_multi_choice.py --video_llm video-llava -

Yes/No QA

python eval_yes_no.py --video_llm video-llava -

Caption Matching

python eval_caption_matching.py --video_llm video-llava -

Caption Generation

python eval_captioning.py --video_llm video-llava

The results of each data point will be saved to auto_eval_results/video-llava/<task_type>.json and the overall results on each temporal aspect will be printed out as follows:

{'action': 70.4, 'direction': 32.2, 'speed': 38.2, 'order': 41.4, 'attribute_change': 39.9, 'avg': 44.7}

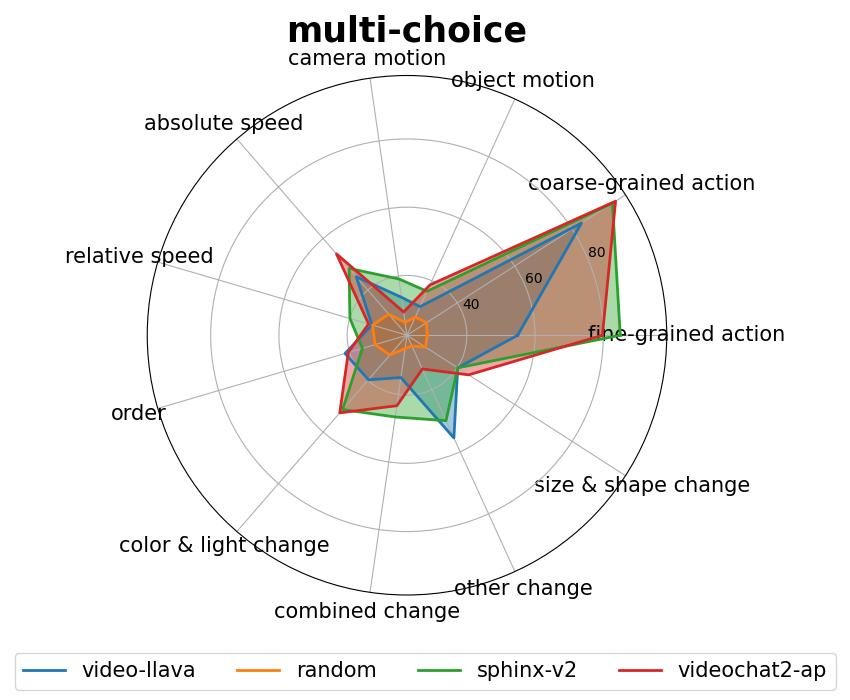

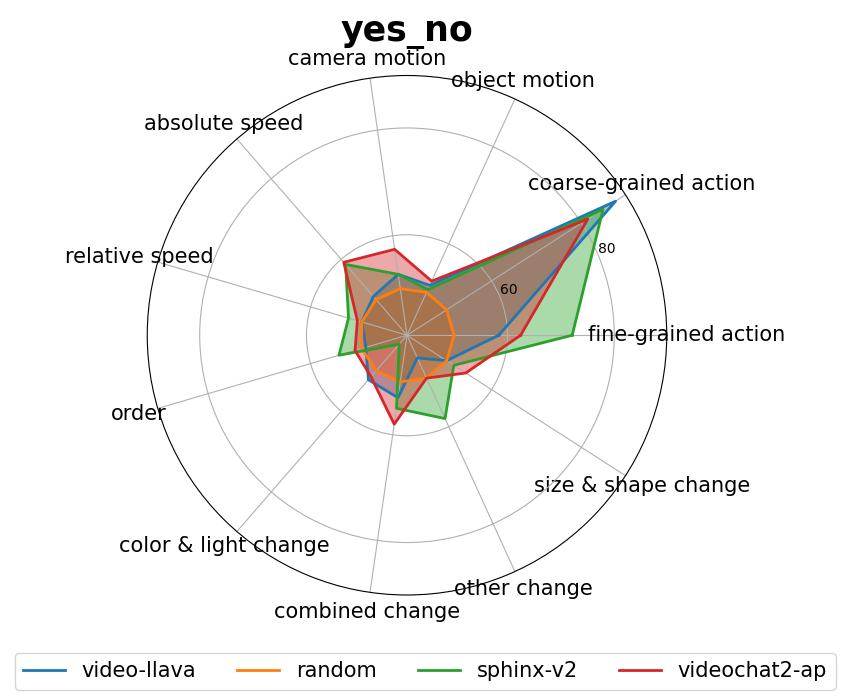

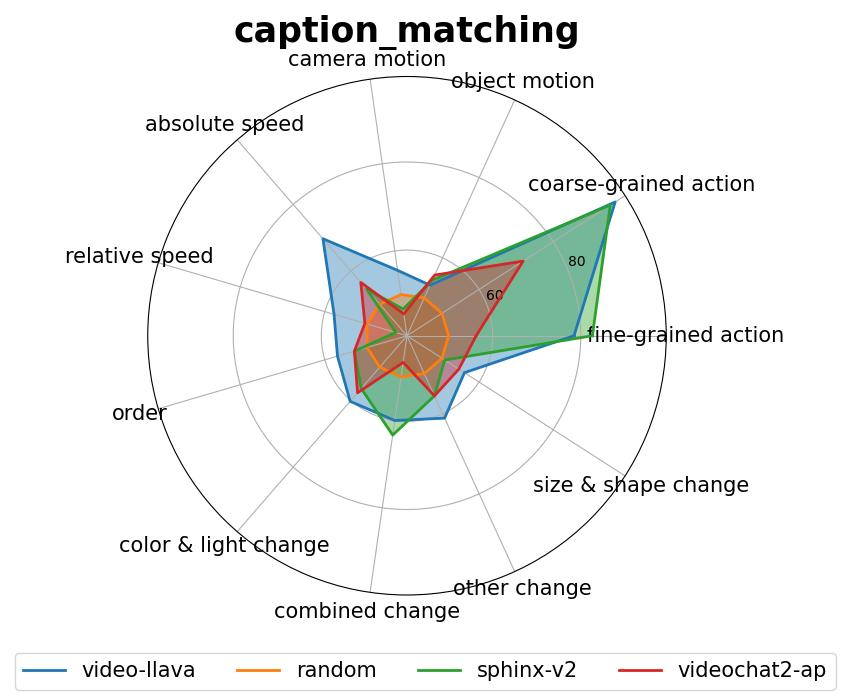

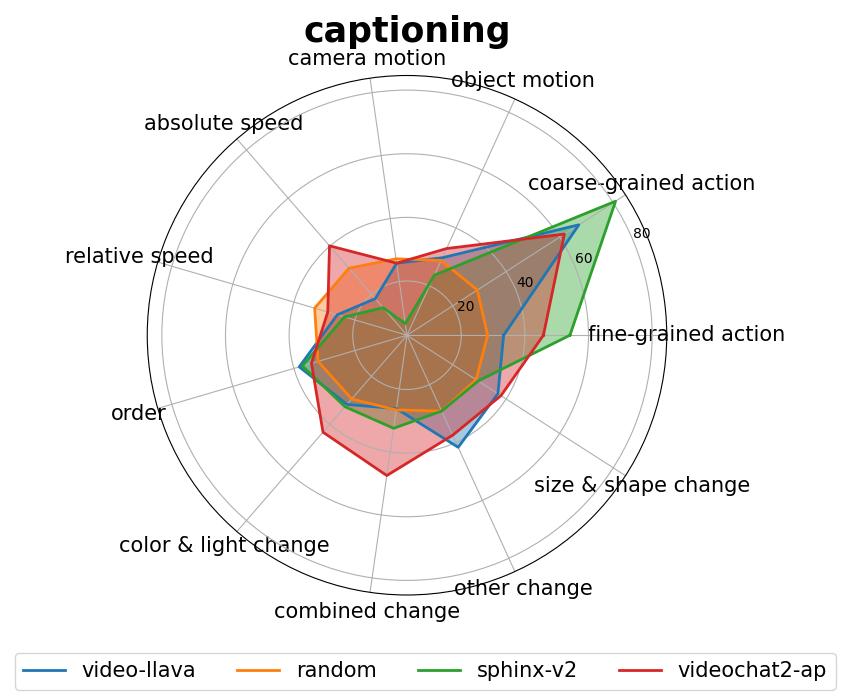

{'fine-grained action': 54.9, 'coarse-grained action': 83.2, 'object motion': 31.7, 'camera motion': 33.7, 'absolute speed': 46.0, 'relative speed': 33.2, 'order': 41.4, 'color & light change': 39.7, 'size & shape change': 40.2, 'combined change': 35.0, 'other change': 55.6}

Match Success Rate=37.9

The following figures present results of Video LLaVA, VideoChat2, SPHINX-v2 and the random baseline. Results of more Video LLMs and Image LLMs can be found in our paper.

- Upload scripts to collect and process videos.

- Upload the code for automatic evaluation.

- Upload the code for task instruction generation.

@article{liu2024tempcompass,

title = {TempCompass: Do Video LLMs Really Understand Videos?},

author = {Yuanxin Liu and Shicheng Li and Yi Liu and Yuxiang Wang and Shuhuai Ren and Lei Li and Sishuo Chen and Xu Sun and Lu Hou},

year = {2024},

journal = {arXiv preprint arXiv: 2403.00476}

}