🚀 DUET: A Tuning-Free Device-Cloud Collaborative Parameters Generation Framework for Efficient Device Model Generalization (WWW 2023)

PyTorch implementation of DUET: A Tuning-Free Device-Cloud Collaborative Parameters Generation Framework for Efficient Device Model Generalization (Zheqi Lv et al., WWW 2023) on Sequential Recommendation task based on DIN, GRU4Rec, SASRec.

- Citation

- Description

- Abstract

- Introduction

- Method

- Implementation

- Environment

- Folder Structure

- Data Preprocessing

- Train & Inference

Please cite this repository and paper if you use any of the code/diagrams here, Thanks! 📢📢📢

To cite, please use the following BibTeX entry:

@inproceedings{lv2023duet,

title={DUET: A Tuning-Free Device-Cloud Collaborative Parameters Generation Framework for Efficient Device Model Generalization},

author={Lv, Zheqi and Zhang, Wenqiao and Zhang, Shengyu and Kuang, Kun and Wang, Feng and Wang, Yongwei and Chen, Zhengyu and Shen, Tao and Yang, Hongxia and Ooi, Beng Chin and others},

booktitle={Proceedings of the ACM Web Conference 2023},

pages={3077--3085},

year={2023}

}@article{lv2023duetgithub,

title={DUET: A Tuning-Free Device-Cloud Collaborative Parameters Generation Framework for Efficient Device Model Generalization(Github)},

author={{Lv}, Z.}

howpublished = {https://github.com/HelloZicky/DUET},

year={2023}

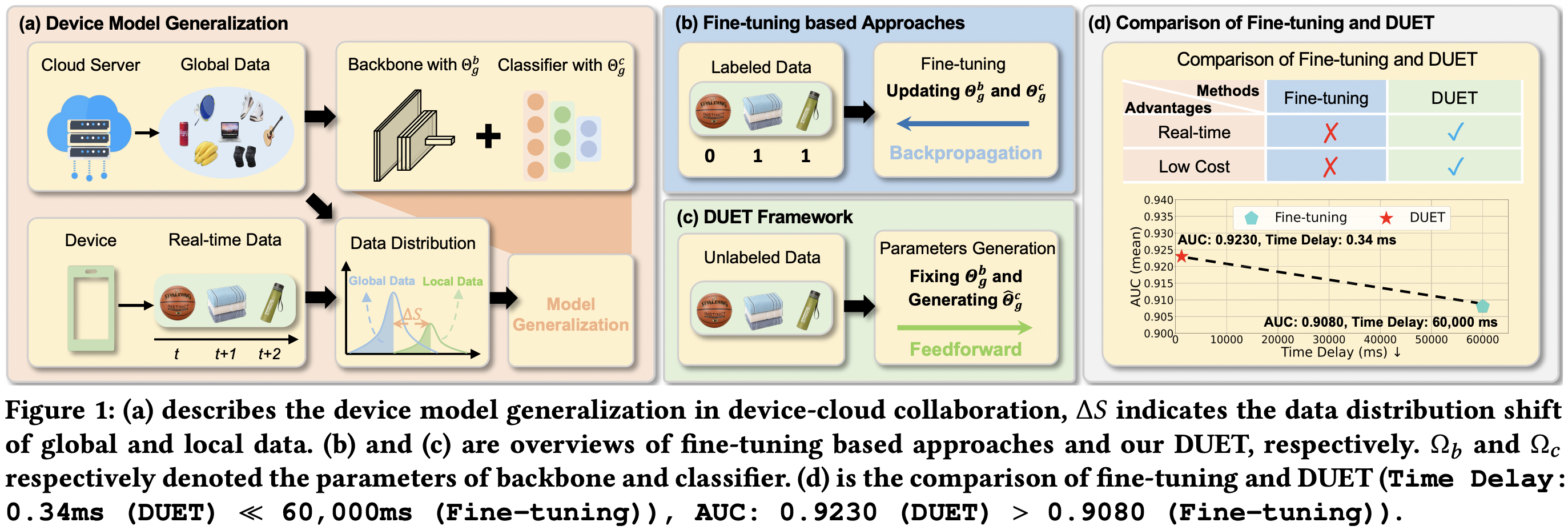

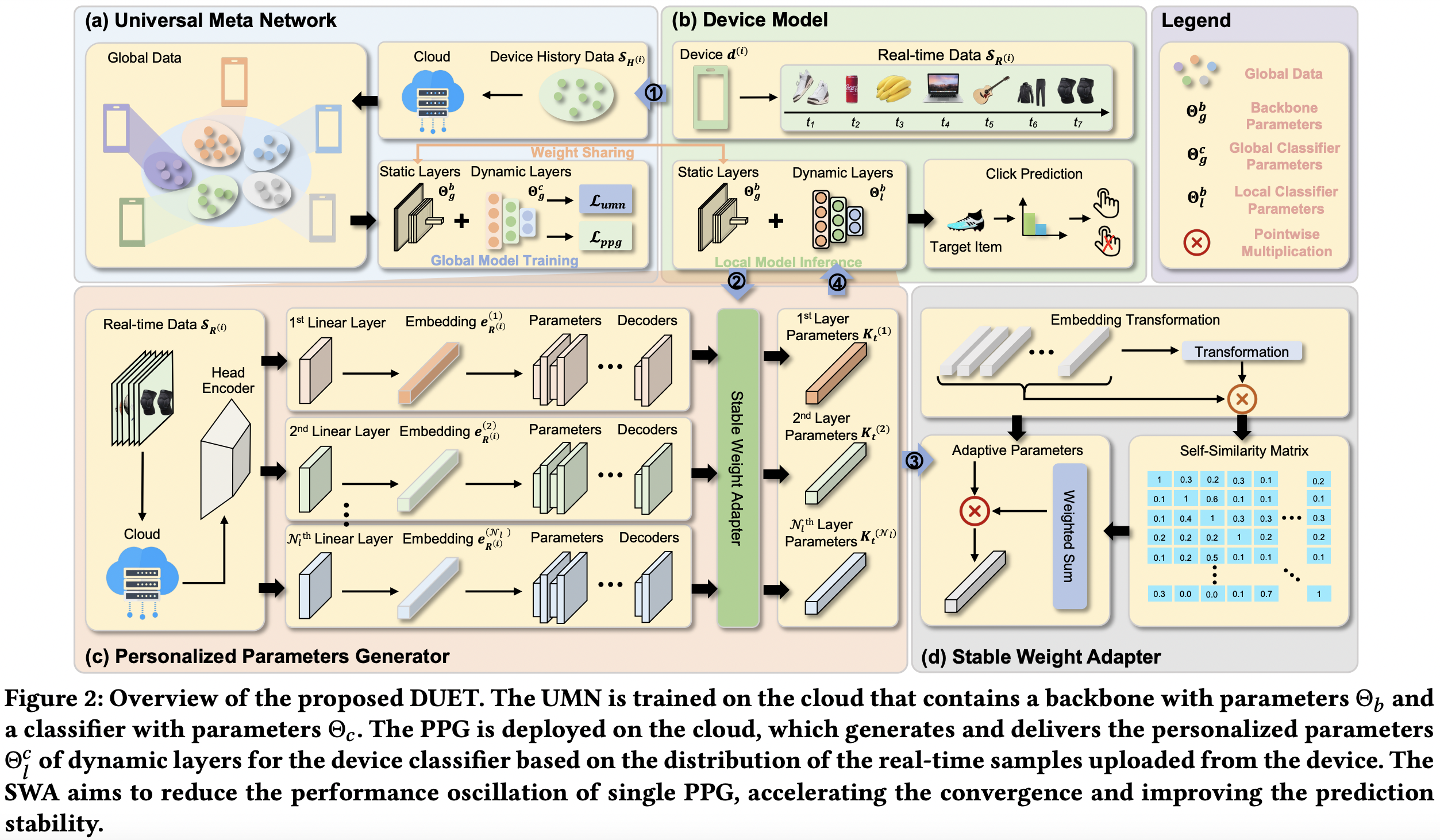

}Device Model Generalization (DMG) is a practical yet underinvestigated research topic for on-device machine learning applications. It aims to improve the generalization ability of pre-trained models when deployed on resource-constrained devices, such as improving the performance of pre-trained cloud models on smart mobiles. While quite a lot of works have investigated the data distribution shift across clouds and devices, most of them focus on model fine-tuning on personalized data for individual devices to facilitate DMG. Despite their promising, these approaches require on-device re-training, which is practically infeasible due to the overfitting problem and high time delay when performing gradient calculation on real-time data. In this paper, we argue that the computational cost brought by fine-tuning can be rather unnecessary. We consequently present a novel perspective to improving DMG without increasing computational cost, i.e., device-specific parameter generation which directly maps data distribution to parameters. Specifically, we propose an efficient Device-cloUd collaborative parametErs generaTion framework (DUET). DUET is deployed on a powerful cloud server that only requires the low cost of forwarding propagation and low time delay of data transmission between the device and the cloud. By doing so, DUET can rehearse the devicespecific model weight realizations conditioned on the personalized real-time data for an individual device. Importantly, our DUET elegantly connects the cloud and device as a “duet” collaboration, frees the DMG from fine-tuning, and enables a faster and more accurate DMG paradigm. We conduct an extensive experimental study of DUET on three public datasets, and the experimental results confirm our framework’s effectiveness and generalisability for different DMG tasks.

DUET_Repository

├── data

│ └── {dataset_name}

│ ├── ood_generate_dataset_tiny_10_30u30i

│ │ └── generate_dataset.py

│ └── raw_data_file

└── code

└── DUET

├── loader

├── main

├── model

├── module

├── scripts

├── util

└── README.md pip install -r requirement.txtYou can choose to download Preprocessed Data or process it yourself.

cd data/{dataset_name}/ood_generate_dataset_tiny_10_30u30ipython generate_dataset.pyCode for parallel training and inference are aggregated in the repository.

cd code/DUET/scripts

bash _0_0_train.sh

bash _0_0_movielens_train.shTraining and inference with a specific generalization method (${type}), a specific dataset (${dataset}) and a specific base model (${model})

cd code/DUET/scripts

bash ${type}.sh ${dataset} ${model} ${cuda_num}for example,

cd code/DUET/scripts

bash _0_func_base_train.sh amazon_beauty sasrec 0

bash _0_func_finetune_train.sh amazon_beauty sasrec 0

bash _0_func_duet_train.sh amazon_beauty sasrec 0