Categorical Inference Poisoning: Verifiable Defense Against Black-Box DNN Model Stealing Without Constraining Surrogate Data and Query Times

Pytorch implementation of the categorical inference poisoning (CIP) framework proposed in the paper.

"Victim model" is equivalent to "to-be-protected model" or "original model" that has been trained using the original training data for the targeted functionality (i.e., classification tasks in this work).

cd model_train

python main.pyThe preparation and the structure of dataset:

dataset

|----MNIST

| |----train

| | |----1.png

| | |----2.png

| | |----...

| |----test

|----MNIST_half

| |----train

| | |----1.png

| | |----2.png

| | |----...

| |----test

|----MNIST_surrogate

| |----train

| | |----1.png

| | |----2.png

| | |----...

| |----test

|----Cifar100

|...

|----FOOD101

The MNIST_half is the half of MNIST_all, and it's used to test the IDA and DQA (The number of each category in MNIST_half is half that of MNIST). Note that it's necessary to set the type of attack before testing:

parser.add_argument('--attack',type=str,default='Datafree', choices=['Knockoff','Datafree','IDA'], help='choosing the attack forms')and the final pt file will be saved in

trained

|----Datafree

| |----without_fine-tuning.pt

|----Knockoff

|----IDA

cd ood_detection

python detection_train.pyBefore the fine-tuning, the dataset of tinyimage and the testing OOD datasets should be prepared:

dataset

|----Open-set test

| |----DTD

| | |----1.png

| | |...

| |----Place365

|----tiny_images.bin

The path setting of tiny_images is in the 13th and 28th lines in the file

CIP/Utils/tinyimages_80mn_loader.py

Note that the OOD datasets like DTD are used to test the performance of OOD detector and you could use any another type of OOD datasets even the noises. The final pt file will be saved in

trained

|----Datafree

| |----after_fine-tuning.pt

|----Knockoff

|----IDA

python Detection_test.pyA excel fie will be generated automatically which is used to record the energy threshold values.

| dataset | FPR_energy | open-set_energy | |

|---|---|---|---|

| 0 | Mnist | E1 | E2 |

cd Knockoff

python main.pyData preparation of KnockffNets:

dataset

|----Imagenet_100K

| |----1.png

| |----2.png

| |...

| |----100000.png

To test the KnockoffNets, we need to collect 100K ImageNets images.

And note in each attacks, we should determine whether poisoning and choose the poison methods.

parser.add_argument('--poison', type=bool, default=True, help='whether poisoning')

parser.add_argument('--method', type=str, default='CIP',choices=['CIP','DP','DAWN'])cd DF

python main.pyBefore testing we should set the path of pt file:

parser.add_argument('--pt_file',type=str,default='../Victim_Model_Train/Trained/Datafree/Mnist_resnet18_epoch_64_accuracy_99.61%.pt',help='setting the Victim model')Note when testing the DP and DAWN, we should use the model without the fine-tuning of OE. In contrast, when testing the CIP, we should use the model after the fine-tuning of OE.

cd IDA

python main.pyData preparation of IDA:

dataset

|----Cifar10_half

| |----1.png

| |----2.png

| |...

| |----100000.png

|----Cifar100_half

|----FOOD101_half

The Cifar10_half in the folder of IDA is the images complementary to that in the folder of Victim_Model_Train.

cd IDA

python main.pyBecause the data sets used by DQA and IDA are exactly the same, there is no need to set the data set separately for DQA. But the noise images should be prepared before testing:

DQA

|----Noise

| |----N1.png

| |----N2.png

| |----N3.png

| |...

| |---N60000.png

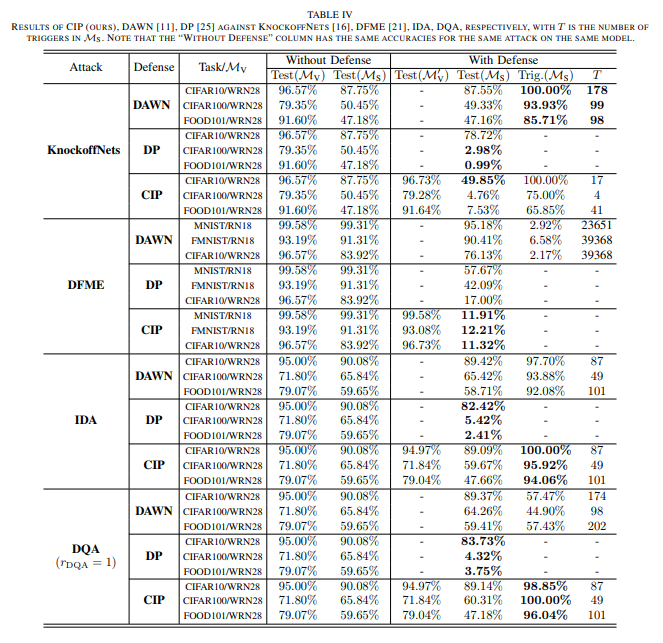

The experiment results of CIP, DAWN, DP against Knockoff, DFME, IDA, DQA:

H. Zhang, G. Hua*, X. Wang, H. Jiang, and W. Yang “Categorical inference poisoning: Verifiable defense against black-box DNN model stealing without constraining surrogate data and query times,” IEEE Transactions on Information Forensics and Security, 2023. Link