Website | [Paper]

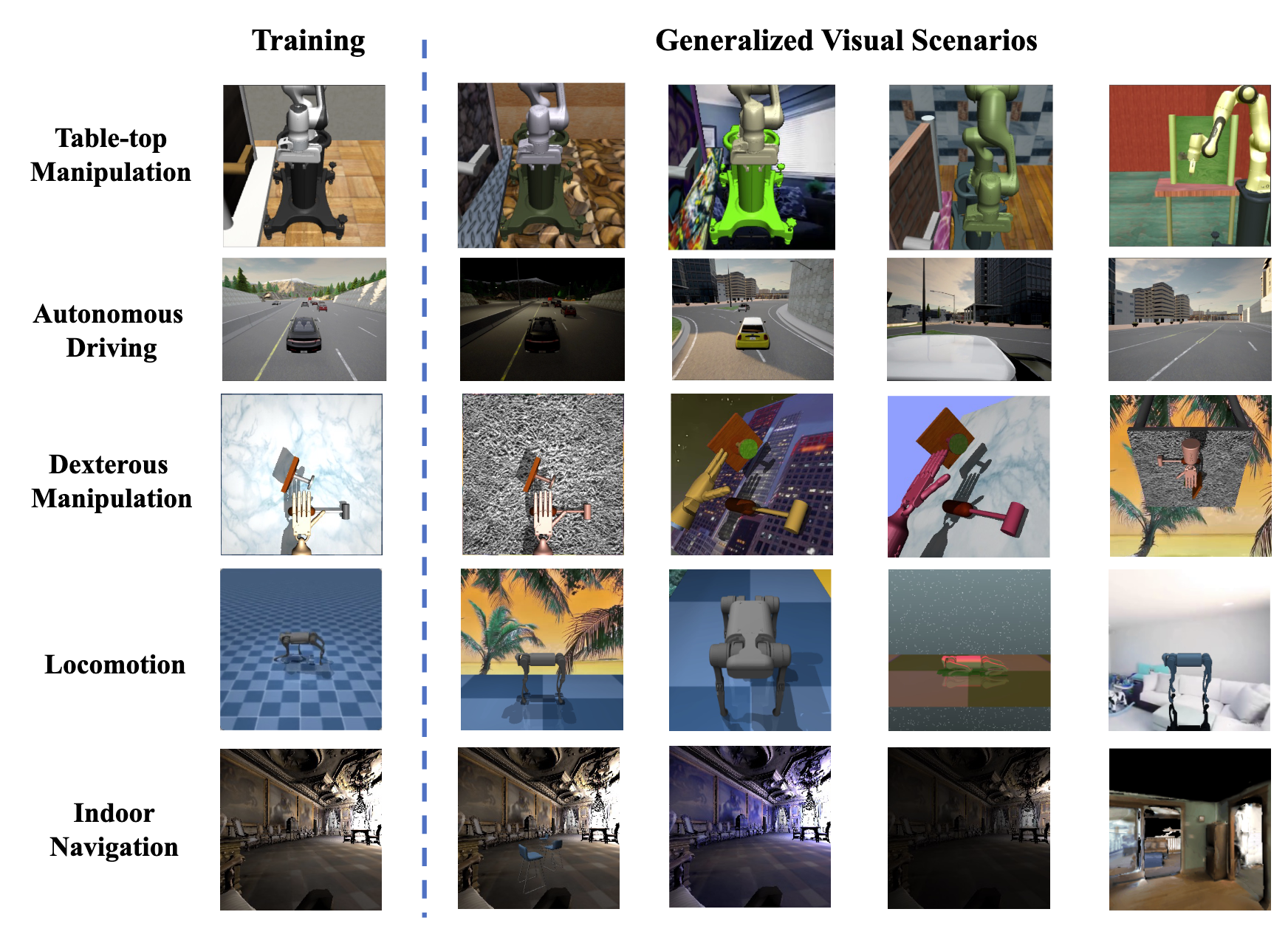

We have released RL-ViGen -- a Reinforcement Learning Benchmark for Visual Generalization. It specifically specifically designs to tackle the visual generalization problem. Our benchmark comprises five major task categories, namely, manipulation, navigation, autonomous driving, locomotion, and dexterous manipulation. Concurrently, it encompasses five classes of generalization types: visual appearances, camera views, lighting changes, scene structures, and cross-embodiments. Furthermore, RL-ViGen integrates seven of the most currently favored visual RL and generalization algorithms, solidifying its broad applicability in the field.

To install our benchmark, please follow the instructions in INSTALLATION.md.

algos: contains the implementation of different algorithms.cfgs: contains the hyper-parameters for different algorithms and each tasks.envs: various RL-ViGen benchmark environments. In addtion, each sub-folder contains specificREADME.mdfor the introduction of the environment.setup: the installation scripts for conda envs.third_party: submodules from third parties. We won't frequently change the code in this folder.wrappers: includes the wrappers for each environment.scripts: includes scripts that facilitate training and evaluation.

The algorithms will use the Places dataset for data augmentation, which can be downloaded by running

wget http://data.csail.mit.edu/places/places365/places365standard_easyformat.tar

After downloading and extracting the data, add your dataset directory to the datasets list in cfgs/aug_config.cfg.

cd RL-ViGen/

bash scripts/train.sh

cd RL-ViGen/

bash scripts/carlatrain.sh

For evaluation, you should change model_dir to your own saved model folder first. Regarding Robosuite , Habitat, and CARLA, we can run the evaluation code as follow:

cd RL-ViGen/

bash scripts/eval.sh

You should change the env, task_name, test_agent for different evaluation in the eval.sh.

For DM-Control, we can run the evaluation code as follow:

cd RL-ViGen/

bash scripts/locoeval.sh

For more details, please refer to the README.md files for each environment in the env/ directory.

alll the Adroit configureation is on the branch ViGen-adroit.

- [07-20-2023] Accelerate the speed of DMcontrol in video background setting.

- [06-01-2023] Initial code release.

Our training code is based on DrQv2. And we also thank the codebase of VRL3, DMC-GB, SECANT, and kyoran. We also thank Siao Liu for his careful checking of the code.

The majority of DrQ-v2, DMCGB, VRL3 is licensed under the MIT license. Habitat Lab, dmc2gym, mujoco-py are also licensed under the MIT license. However portions of the project are available under separate license terms: DeepMind, mj_envs, and mjrl is licensed under the Apache 2.0 license. Gibson based task datasets, the code for generating such datasets, and trained models are distributed with Gibson Terms of Use and under CC BY-NC-SA 3.0 US license. CARLA specific assets are distributed under CC-BY License. The ad-rss-lib library compiled and linked by the RSS Integration build variant introduces LGPL-2.1-only License. Unreal Engine 4 follows its own license terms.