This repository contains the code and data for the paper "Leveraging Large Language Models with Multiple Loss Learners for Few-Shot Author Profiling" by Hamed Babaei Giglou, Mostafa Rahgouy, Jennifer D’Souza, Milad Molazadeh Oskuee, Hadi Bayrami Asl Tekanlou, and Cheryl D Seals. The paper was presented at the 14th International Conference of the CLEF Association (CLEF 2023).

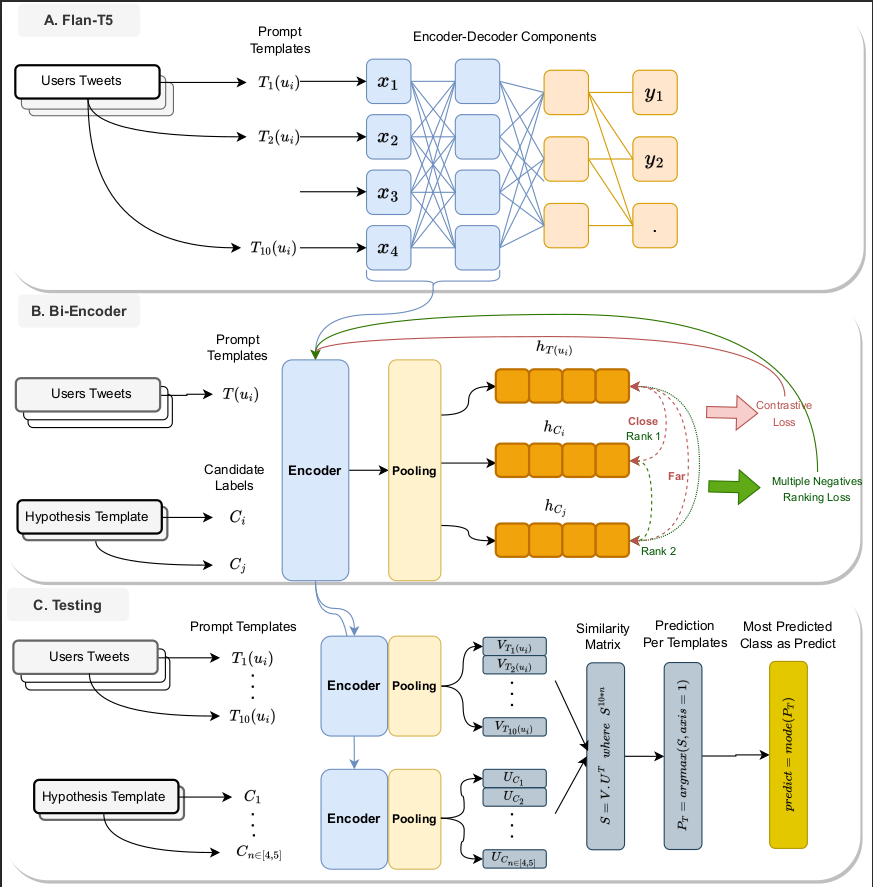

The objective of author profiling (AP) is to study the characteristics of authors through the analysis of how language is exchanged among people. Studying these attributes sometimes is challenging due to the lack of annotated data. This indicates the significance of studying AP from a low-resource perspective. This year at AP@PAN 2023 the major interest raised in profiling cryptocurrency influencers with a few-shot learning technique to analyze the effectiveness of advanced approaches in dealing with new tasks from a low-resource perspective.

dataset/: Contains the datasets used in the paper.assets/: Contains the model checkpoints used in the paper.visualization/: Contains the code for the visualizing experiments in the paper.results/: Contains the results of the experiments.

- Python 3.9 or higher

- PyTorch 1.9.x or higher

- Transformers 4.3.x or higher

- Clone the repository:

https://github.com/HamedBabaei/author-profiling-pan2023

cd author-profiling-pan2023

- Install the required packages:

pip install -r requirements.txt

- Run the experiments:

- Inference fsl

bash inference_fsl.sh

- Inference fsl

bash inference_fsl_biencoder.sh

- Baseline (random)

bash random_baseline.sh

- Baseline (Zero Shot)

bash zero_shot_baseline.sh

- Train & Test SBERT

bash train_test_runner_sbert.sh

- Train & Test flanT5

bash train_test_runner_flan_t5.sh

If you use this code in your research, please cite the following paper:

@InProceedings{giglou:2023,

author = {Hamed Babaei Giglou, Mostafa Rahgouy, Jennifer D’Souza, Milad Molazadeh Oskuee , Hadi Bayrami Asl Tekanlou and Cheryl D Seals},

booktitle = {{CLEF 2023 Labs and Workshops, Notebook Papers}},

month = sep,

publisher = {CEUR-WS.org},

title = {{Leveraging Large Language Models with Multiple Loss Learners for Few-Shot Author Profiling}},

year = 2023

}