Paper | Poster | Slides | Video | Blog | CVPR Page

This repository contains code supporting the CVPR 2024 paper Parameter Efficient Self-supervised Geospatial Domain Adaptation.

Authors: Linus Scheibenreif Michael Mommert Damian Borth

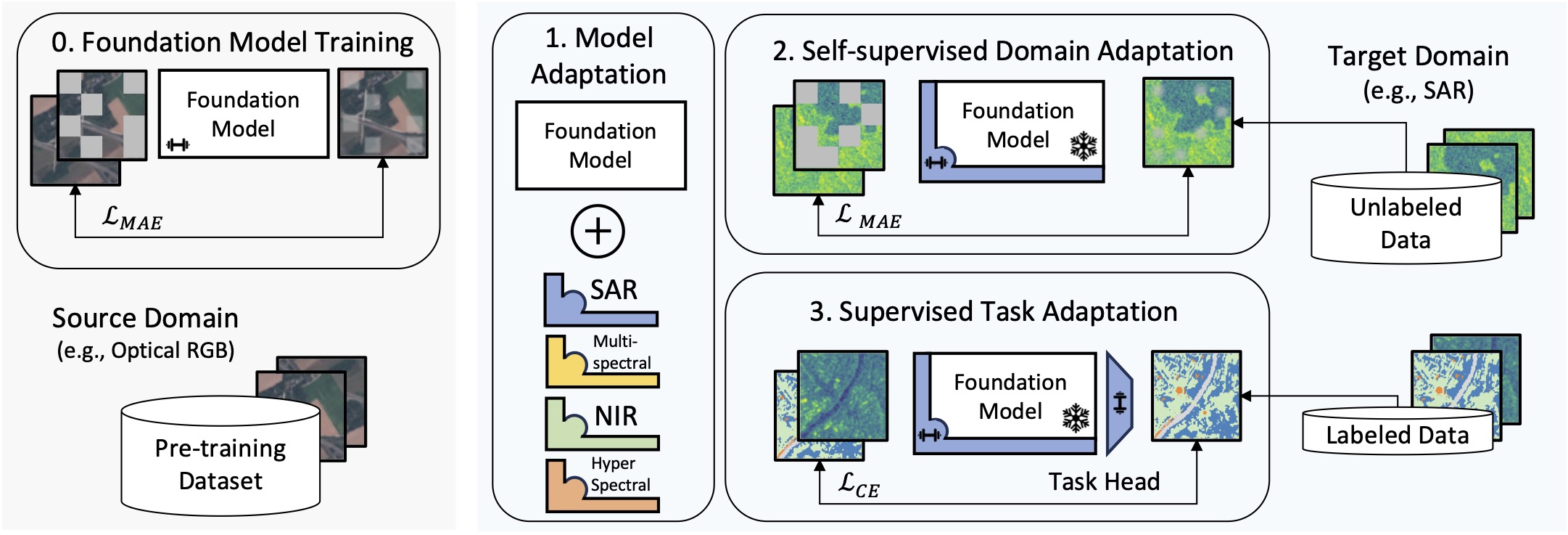

As large-scale foundation models become publicly available for different domains efficiently adapting them to individual downstream applications and additional data modalities has turned into a central challenge. For example foundation models for geospatial and satellite remote sensing applications are commonly trained on large optical RGB or multi-spectral datasets although data from a wide variety of heterogeneous sensors are available in the remote sensing domain. This leads to significant discrepancies between pre-training and downstream target data distributions for many important applications. Fine-tuning large foundation models to bridge that gap incurs high computational cost and can be infeasible when target datasets are small. In this paper we address the question of how large pre-trained foundational transformer models can be efficiently adapted to downstream remote sensing tasks involving different data modalities or limited dataset size. We present a self-supervised adaptation method that boosts downstream linear evaluation accuracy of different foundation models by 4-6% (absolute) across 8 remote sensing datasets while outperforming full fine-tuning when training only 1-2% of the model parameters. Our method significantly improves label efficiency and increases few-shot accuracy by 6-10% on different datasets.

This codebase provides scripts to add SLR adapters to existing, trained, visual foundation models before fine-tuning them on different downstream tasks. To get started, make sure that the trained weights for a visual foundation model are available in the ´checkpoints/´ directory and download a dataset for training. See below for the models and datasets used in the paper:

MAE

SatMAE

Scale-MAE

Where possible, use torchgeo implementations of remote sensing datasets. Please download the other dataset from these locations:

torchgeo==0.5.0timm==0.6.12torch==2.0.1

If you would like to reference our work, please use the following reference:

@InProceedings{Scheibenreif_2024_CVPR,

author = {Scheibenreif, Linus and Mommert, Michael and Borth, Damian},

title = {Parameter Efficient Self-Supervised Geospatial Domain Adaptation},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2024},

pages = {27841-27851}

}