- Description

- Dependencies

- Installing

- Executing Program

- Exploration Files

- Web App Pipeline Files

- Screenshots

In this project, we analyze disaster data from Figure Eight(a dataset that contains real messages and tweets that were sent during disaster events). We will also build a model for an API that categorizes these event messages so that they can sent to an appropriate disaster relief agency.

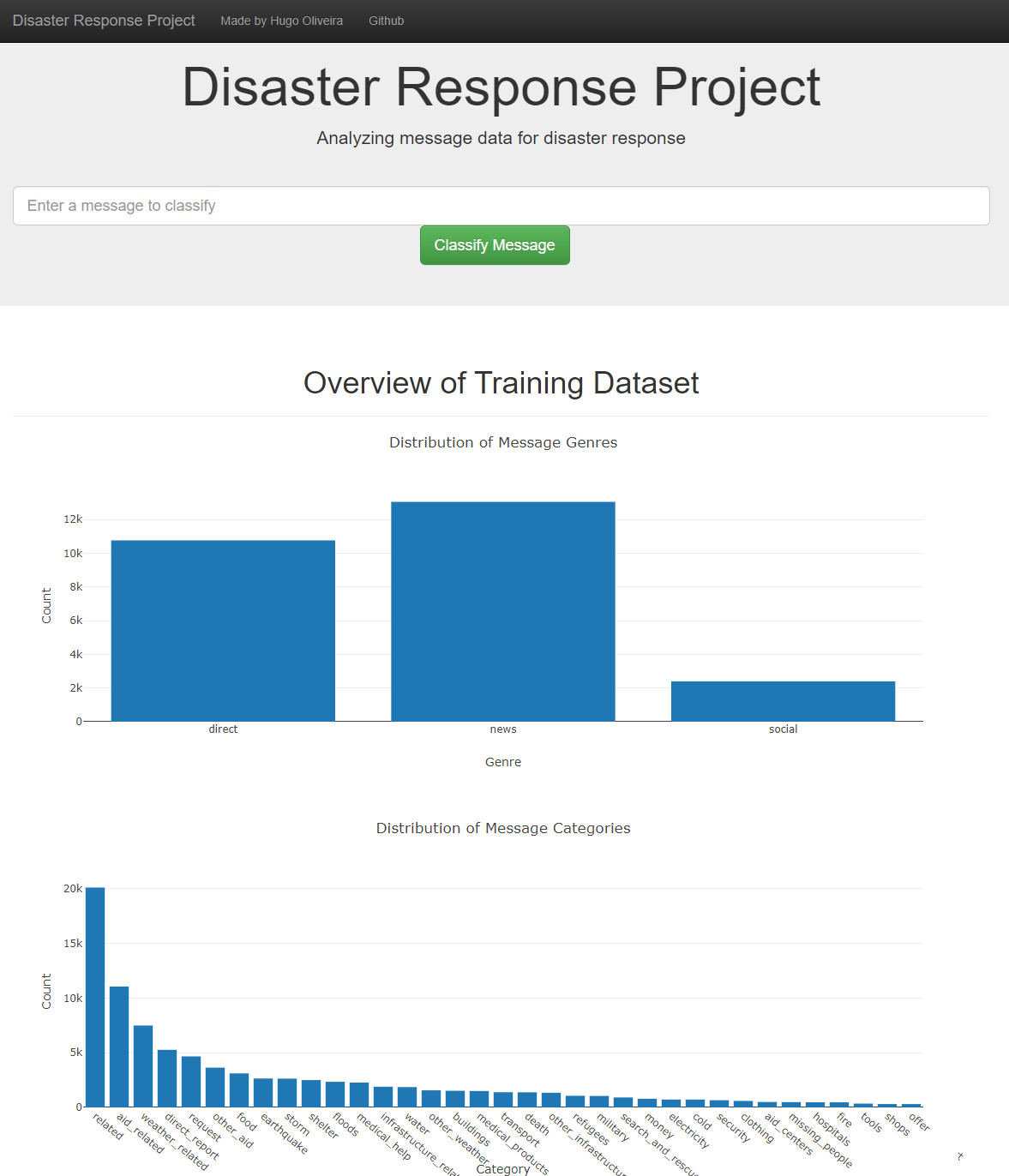

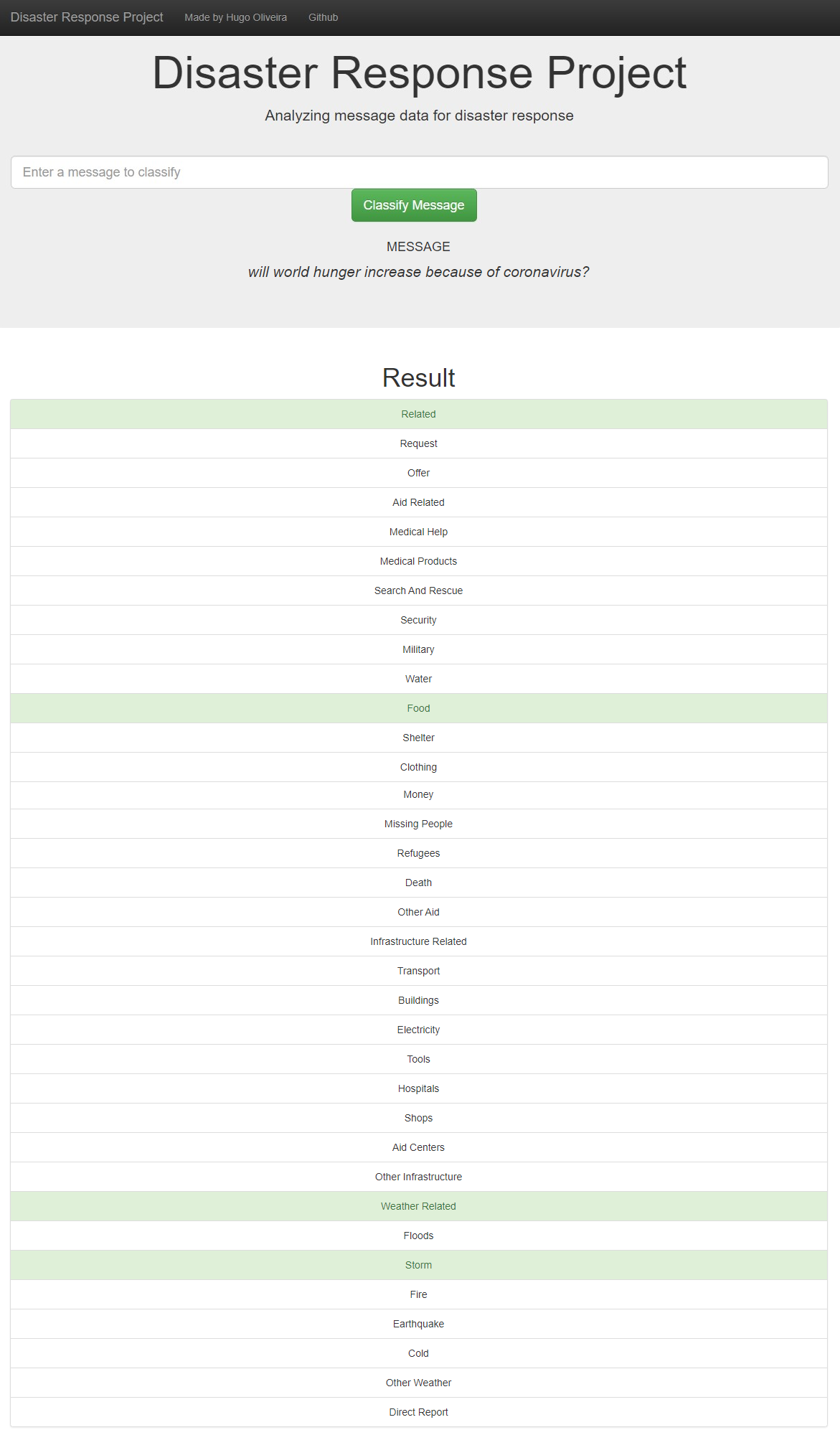

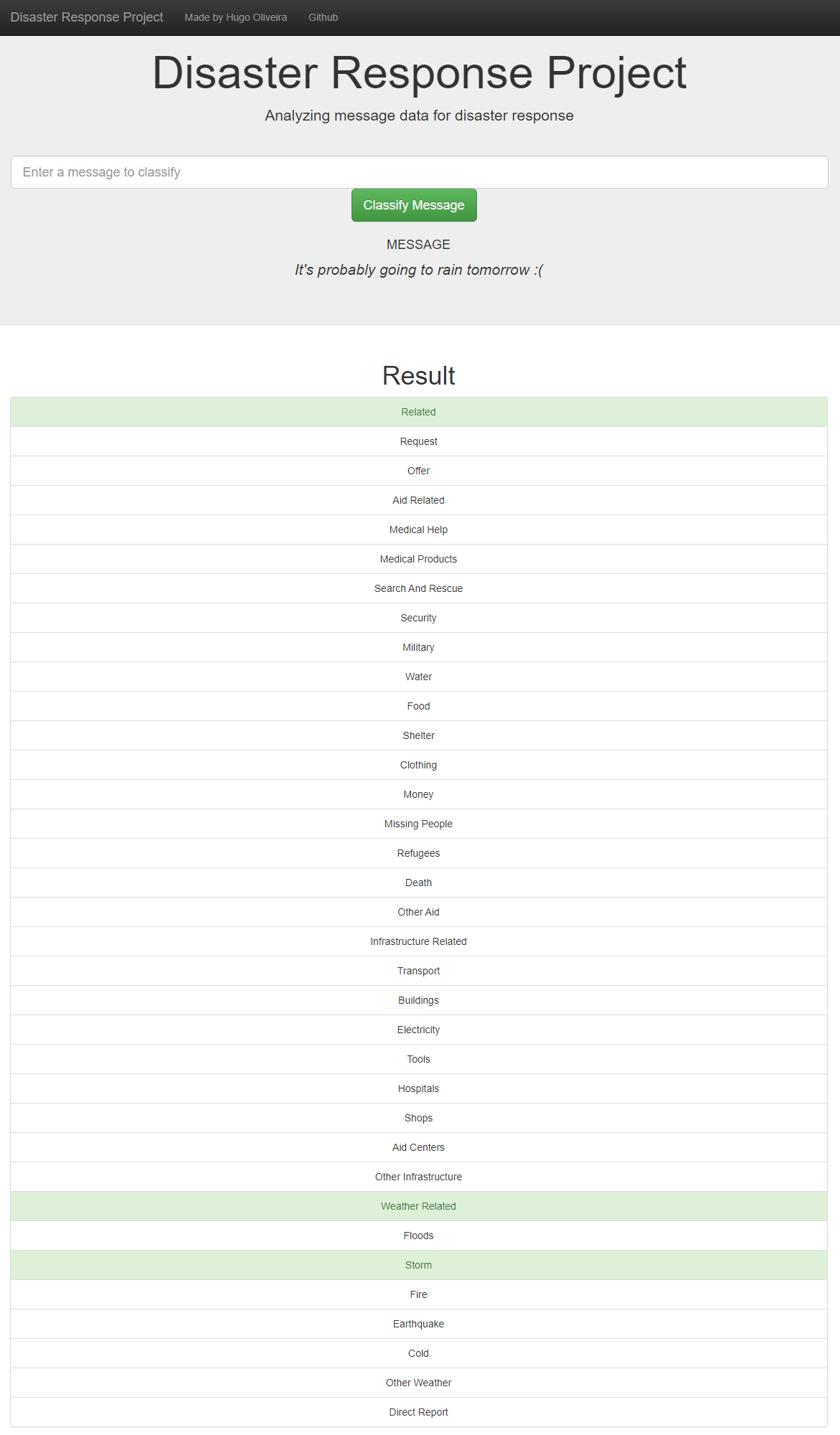

We also developed a include a web app where an emergency worker can input a new message and get classification results in several categories and display visualizations of the data.

This project is divided in the following key sections:

- An ETL pipeline that loads the messages and categories datasets, merges the two datasets, cleans the dataand stores it in a SQLite database;

- A machine learning pipeline that uses NLTK, as well as scikit-learn's Pipeline and GridSearchCV to output a final model that predicts the classes for 36 different categories (multi-output classification);

- A web app that shows the classification results and visuzlizations for any user given input, in real time.

- Python 3.5+

- Machine Learning Libraries: NumPy, Pandas, Sciki-Learn

- Natural Language Process Libraries: NLTK

- SQLlite Database Libraqries: SQLalchemy

- Model Loading and Saving Library: Pickle

- Web App and Data Visualization: Flask, Plotly

To clone the git repository:

git clone https://github.com/HROlive/disaster-response-pipeline.git

-

You can run the following commands in the project's directory to set up the database, train model and save the model.

- To run ETL pipeline to clean data and store the processed data in the database

python data/process_data.py data/disaster_messages.csv data/disaster_categories.csv data/disaster_response_db.db - To run the ML pipeline that loads data from DB, trains classifier and saves the classifier as a pickle file

python models/train_classifier.py data/disaster_response_db.db models/classifier.pkl

- To run ETL pipeline to clean data and store the processed data in the database

-

Run the following command in the app's directory to run your web app.

python run.py -

Go to http://0.0.0.0:3001/

In the data and models folder you can find two jupyter notebook that will help you understand how the model works step by step:

- ETL Preparation Notebook: learn everything about the implemented ETL pipeline

- ML Pipeline Preparation Notebook: look at the Machine Learning Pipeline developed with NLTK and Scikit-Learn

You can use ML Pipeline Preparation Notebook to re-train the model or tune it through a dedicated Grid Search section.

app/templates/*: templates/html files for web app

data/process_data.py: Extract Train Load (ETL) pipeline used for data cleaning, feature extraction, and storing data in a SQLite database

models/train_classifier.py: A machine learning pipeline that loads data, trains a model, and saves the trained model as a .pkl file for later use

app/run.py: This file can be used to launch the Flask web app used to classify disaster messages

- The homepage shows some graphs about training dataset, provided by Figure Eight

- After we input the desired message and click Classify Message, we can see the categories which the message belongs to highlighted in green