-

[2020/03/13] Our paper is accepted by TPAMI: Deep High-Resolution Representation Learning for Visual Recognition.

-

Results on more detection frameworks available. For example, the score reaches 47 under the Hybrid Task Cascade framework.

-

HRNet-Object-Detection is combined into the mmdetection codebase. More results are available at model zoo and HRNet in mmdetection.

-

HRNet with an anchor-free detection algorithm FCOS is available at https://github.com/HRNet/HRNet-FCOS

-

Multi-scale training available. We've involved SyncBatchNorm and Multi-scale training(We provided two kinds of implementation) in HRNetV2 now! After trained with multiple scales and SyncBN, the detection models obtain better performance. Code and models have been updated already!

-

HRNet-Object-Detection on the MaskRCNN-Benchmark codebase is available at https://github.com/HRNet/HRNet-MaskRCNN-Benchmark.

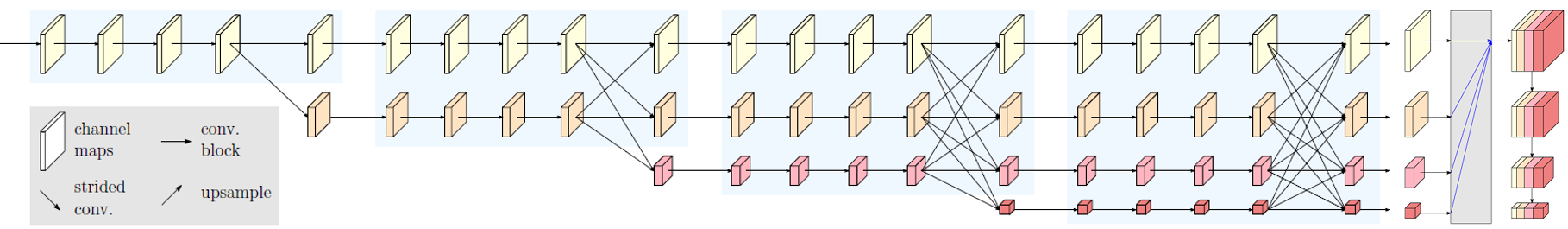

This is the official code of High-Resolution Representations for Object Detection. We extend the high-resolution representation (HRNet) [1] by augmenting the high-resolution representation by aggregating the (upsampled) representations from all the parallel convolutions, leading to stronger representations. We build a multi-level representation from the high resolution and apply it to the Faster R-CNN, Mask R-CNN and Cascade R-CNN framework. This proposed approach achieves superior results to existing single-model networks on COCO object detection. The code is based on mmdetection

HRNetV2 ImageNet pretrained models are now available! Codes and pretrained models are in HRNets for Image Classification

All models are trained on COCO train2017 set and evaluated on COCO val2017 set. Detailed settings or configurations are in configs/hrnet.

Note: Models are trained with the newly released code and the results have minor differences with that in the paper. Current results will be updated soon and more models and results are comming.

Note: Pretrained HRNets can be downloaded at HRNets for Image Classification

Note: Models with * are implemented in Official mmdetection.

| Backbone | #Params | GFLOPs | lr sched | SyncBN | MS train | mAP | model |

|---|---|---|---|---|---|---|---|

| HRNetV2-W18 | 26.2M | 159.1 | 1x | N | N | 36.1 | OneDrive,BaiduDrive(y4hs) |

| HRNetV2-W18 | 26.2M | 159.1 | 1x | Y | N | 37.2 | OneDrive,BaiduDrive(ypnu) |

| HRNetV2-W18 | 26.2M | 159.1 | 1x | Y | Y(Default) | 37.6 | OneDrive,BaiduDrive(ekkm) |

| HRNetV2-W18 | 26.2M | 159.1 | 1x | Y | Y(ResizeCrop) | 37.6 | OneDrive,BaiduDrive(phgo) |

| HRNetV2-W18 | 26.2M | 159.1 | 2x | N | N | 38.1 | OneDrive,BaiduDrive(mz9y) |

| HRNetV2-W18 | 26.2M | 159.1 | 2x | Y | Y(Default) | 39.4 | OneDrive,BaiduDrive(ocuf) |

| HRNetV2-W18 | 26.2M | 159.1 | 2x | Y | Y(ResizeCrop) | 39.7 | |

| HRNetV2-W32 | 45.0M | 245.3 | 1x | N | N | 39.5 | OneDrive,BaiduDrive(ztwa) |

| HRNetV2-W32 | 45.0M | 245.3 | 1x | Y | Y(Default) | 41.0 | |

| HRNetV2-W32 | 45.0M | 245.3 | 2x | N | N | 40.8 | OneDrive,BaiduDrive(hmdo) |

| HRNetV2-W32 | 45.0M | 245.3 | 2x | Y | Y(Default) | 42.6 | OneDrive,BaiduDrive(k03x) |

| HRNetV2-W40 | 60.5M | 314.9 | 1x | N | N | 40.4 | OneDrive,BaiduDrive(0qda) |

| HRNetV2-W40 | 60.5M | 314.9 | 2x | N | N | 41.4 | OneDrive,BaiduDrive(xny6) |

| HRNetV2-W48* | 79.4M | 399.1 | 1x | N | N | 40.9 | model |

| HRNetV2-W48* | 79.4M | 399.1 | 1x | N | N | 41.5 | model |

| Backbone | lr sched | Mask mAP | Box mAP | model |

|---|---|---|---|---|

| HRNetV2-W18 | 1x | 34.2 | 37.3 | OneDrive,BaiduDrive(vvc1) |

| HRNetV2-W18 | 2x | 35.7 | 39.2 | OneDrive,BaiduDrive(x2m7) |

| HRNetV2-W32 | 1x | 36.8 | 40.7 | OneDrive,BaiduDrive(j2ir) |

| HRNetV2-W32 | 2x | 37.6 | 42.1 | OneDrive,BaiduDrive(tzkz) |

| HRNetV2-W48* | 1x | 42.4 | 38.1 | model |

| HRNetV2-W48* | 2x | 42.9 | 38.3 | model |

Note: we follow the original paper[2] and adopt 280k training iterations which is equal to 20 epochs in mmdetection.

| Backbone | lr sched | mAP | model |

|---|---|---|---|

| HRNetV2-W18* | 20e | 41.2 | model |

| HRNetV2-W32 | 20e | 43.7 | OneDrive,BaiduDrive(ydd7) |

| HRNetV2-W48* | 20e | 44.6 | model |

| Backbone | lr sched | Mask mAP | Box mAP | model |

|---|---|---|---|---|

| HRNetV2-W18* | 20e | 36.4 | 41.9 | model |

| HRNetV2-W32* | 20e | 38.5 | 44.5 | model |

| HRNetV2-W48* | 20e | 39.5 | 46.0 | model |

| Backbone | lr sched | Mask mAP | Box mAP | model |

|---|---|---|---|---|

| HRNetV2-W18* | 20e | 37.9 | 43.1 | model |

| HRNetV2-W32* | 20e | 39.6 | 45.3 | model |

| HRNetV2-W48* | 20e | 40.7 | 46.8 | model |

| HRNetV2-W48* | 28e | 41.0 | 47.0 | model |

| Backbone | GN | MS train | Lr schd | Box mAP | model |

|---|---|---|---|---|---|

| HRNetV2p-W18* | Y | N | 1x | 35.2 | model |

| HRNetV2p-W18* | Y | N | 2x | 38.2 | model |

| HRNetV2p-W32* | Y | N | 1x | 37.7 | model |

| HRNetV2p-W32* | Y | N | 2x | 40.3 | model |

| HRNetV2p-W18* | Y | Y | 2x | 38.1 | model |

| HRNetV2p-W32* | Y | Y | 2x | 41.4 | model |

| HRNetV2p-W48* | Y | Y | 2x | 42.9 | model |

-

Procedure

- Select one scale from provided scales randomly and apply it.

- Pad all images in a GPU Batch(e.g. 2 images per GPU) to the same size (see

pad_size,1600*1000or1000*1600)

-

Code

You need to change lines below in config files

data = dict(

imgs_per_gpu=4,

workers_per_gpu=8,

pad_size=(1600, 1024),

train=dict(

type=dataset_type,

ann_file=data_root + 'annotations/instances_train2017.json',

img_prefix=data_root + 'images/train2017.zip',

img_scale=[(1600, 1000), (1000, 600), (1333, 800)],

img_norm_cfg=img_norm_cfg,

size_divisor=32,

flip_ratio=0.5,

with_mask=False,

with_crowd=True,

with_label=True),Less memory and less time, this implementation is more efficient compared to the former one

-

Procedure

- Select one scale from provided scales randomly and apply it.

- Crop images to a fixed size randomly if they are larger than the given size.

- Pad all images to the same size (see

pad_size).

-

Code

You need to change lines below in config files

imgs_per_gpu=2,

workers_per_gpu=4,

pad_size=(1216, 800),

train=dict(

type=dataset_type,

ann_file=data_root + 'annotations/instances_train2017.json',

img_prefix=data_root + 'train2017.zip',

img_scale=(1200, 800),

img_norm_cfg=img_norm_cfg,

size_divisor=1,

extra_aug=dict(

rand_resize_crop=dict(

scales=[[1400, 600], [1400, 800], [1400, 1000]],

size=[1200, 800]

)),

flip_ratio=0.5,

with_mask=False,

with_crowd=True,

with_label=True),This code is developed using on Python 3.6 and PyTorch 1.0.0 on Ubuntu 16.04 with NVIDIA GPUs. Training and testing are performed using 4 NVIDIA P100 GPUs with CUDA 9.0 and cuDNN 7.0. Other platforms or GPUs are not fully tested.

- Install PyTorch 1.0 following the official instructions

- Install

mmcv

pip install mmcv- Install

pycocotools

git clone https://github.com/cocodataset/cocoapi.git \

&& cd cocoapi/PythonAPI \

&& python setup.py build_ext install \

&& cd ../../- Install

NVIDIA/apexto enable SyncBN

git clone https://github.com/NVIDIA/apex

cd apex

python setup install --cuda_ext- Install

HRNet-Object-Detection

git clone https://github.com/HRNet/HRNet-Object-Detection.git

cd HRNet-Object-Detection

# compile CUDA extensions.

chmod +x compile.sh

./compile.sh

# run setup

python setup.py install

# or install locally

python setup.py install --userFor more details, see INSTALL.md

cd HRNet-Object-Detection

# Download pretrained models into this folder

mkdir hrnetv2_pretrainedPlease download the COCO dataset from cocodataset. If you use zip format, please specify CocoZipDataset in config files or CocoDataset if you unzip the downloaded dataset.

Please specify the configuration file in configs (learning rate should be adjusted when the number of GPUs is changed).

python -m torch.distributed.launch --nproc_per_node <GPUS NUM> tools/train.py <CONFIG-FILE> --launcher pytorch

# example:

python -m torch.distributed.launch --nproc_per_node 4 tools/train.py configs/hrnet/faster_rcnn_hrnetv2p_w18_1x.py --launcher pytorchpython tools/test.py <CONFIG-FILE> <MODEL WEIGHT> --gpus <GPUS NUM> --eval bbox --out result.pkl

# example:

python tools/test.py configs/hrnet/faster_rcnn_hrnetv2p_w18_1x.py work_dirs/faster_rcnn_hrnetv2p_w18_1x/model_final.pth --gpus 4 --eval bbox --out result.pklNOTE: If you meet some problems, you may find a solution in issues of official mmdetection repo or submit a new issue in our repo.

If you find this work or code is helpful in your research, please cite:

@inproceedings{SunXLW19,

title={Deep High-Resolution Representation Learning for Human Pose Estimation},

author={Ke Sun and Bin Xiao and Dong Liu and Jingdong Wang},

booktitle={CVPR},

year={2019}

}

@article{WangSCJDZLMTWLX19,

title={Deep High-Resolution Representation Learning for Visual Recognition},

author={Jingdong Wang and Ke Sun and Tianheng Cheng and

Borui Jiang and Chaorui Deng and Yang Zhao and Dong Liu and Yadong Mu and

Mingkui Tan and Xinggang Wang and Wenyu Liu and Bin Xiao},

journal = {TPAMI}

year={2019}

}

[1] Deep High-Resolution Representation Learning for Visual Recognition. Jingdong Wang, Ke Sun, Tianheng Cheng, Borui Jiang, Chaorui Deng, Yang Zhao, Dong Liu, Yadong Mu, Mingkui Tan, Xinggang Wang, Wenyu Liu, Bin Xiao. Accepted by TPAMI. download

Thanks @open-mmlab for providing the easily-used code and kind help!