This is the official repo for the paper "SRFormer: Empowering Regression-Based Text Detection Transformer with Segmentation".

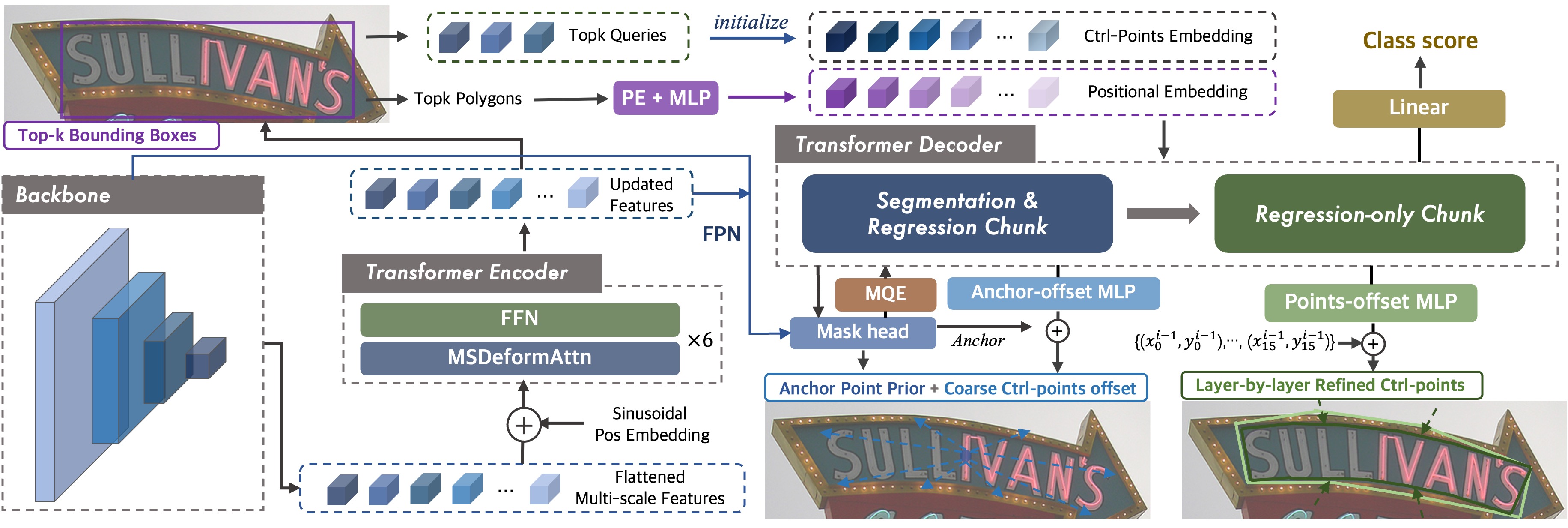

Abstract. Existing techniques for text detection can be broadly classified into two primary groups: segmentation-based methods and regression-based methods. Segmentation models offer enhanced robustness to font variations but require intricate post-processing, leading to high computational overhead. Regression-based methods undertake instance-aware prediction but face limitations in robustness and data efficiency due to their reliance on high-level representations. In our academic pursuit, we propose SRFormer, a unified DETR-based model with amalgamated Segmentation and Regression, aiming at the synergistic harnessing of the inherent robustness in segmentation representations, along with the straightforward post-processing of instance-level regression. Our empirical analysis indicates that favorable segmentation predictions can be obtained in the initial decoder layers. In light of this, we constrain the incorporation of segmentation branches to the first few decoder layers and employ progressive regression refinement in subsequent layers, achieving performance gains while minimizing additional computational load from the mask. Furthermore, we propose a Mask-informed Query Enhancement module, where we take the segmentation result as a natural soft-ROI to pool and extract robust pixel representations to diversify and enhance instance queries. Extensive experimentation across multiple benchmarks has yielded compelling findings, highlighting our method's exceptional robustness, superior training and data efficiency, as well as its state-of-the-art performance.

08/21/2023: Core code & checkpoints uploaded

08/28/2023: Update data preparation

| Benchmark | Backbone | Precision | Recall | F-measure | Pre-trained Model | Fine-tuned Model |

|---|---|---|---|---|---|---|

| Total-Text | Res50 | 92.2 | 87.9 | 90.0 | OneDrive | Seg#1; Seg#2; Seg#3 |

| CTW1500 | Res50 | 91.6 | 87.7 | 89.6 | Same as above |

Seg#1; Seg#3 |

| ICDAR19 ArT | Res50 | 86.2 | 73.4 | 79.3 | OneDrive | Seg#1 |

It's recommended to configure the environment using Anaconda. Python 3.10 + PyTorch 1.13.1 + CUDA 11.3 + Detectron2 are suggested.

conda create -n SRFormer python=3.10 -y

conda activate SRFormer

pip install torch==1.13.1+cu111 torchvision==0.10.1+cu111 -f https://download.pytorch.org/whl/torch_stable.html

pip install opencv-python scipy timm shapely albumentations Polygon3

python -m pip install detectron2

pip install setuptools==59.5.0

cd SRFormer-Text-Detection

python setup.py build develop

SynthText-150K & MLT & LSVT (images): Source

Total-Text (including rotated images): OneDrive

CTW1500 (including rotated images): OneDrive

ICDAR19 ArT (including rotated images): OneDrive

Annotations for training and evaluation: OneDrive

Organize your data as follows:

|- datasets

|- syntext1

| |- train_images

| └─ train_poly_pos.json

|- syntext2

| |- train_images

| └─ train_poly_pos.json

|- mlt

| |- train_images

| └─ train_poly_pos.json

|- valid_mlt

| |- All

| |- Arabic

| |- Bangla

| |- Chinese

| |- Japanese

| |- Korean

| |- Latin

| |- Arabic_test.json

| |- Bangla_test.json

| |- Chinese_test_json

| |- Japanese_test.json

| |- Korean_test.json

| |- Latin_test.json

| └─ mlt_valid_test.json

|- totaltext

| |- test_images_rotate

| |- train_images_rotate

| |- test_poly.json

| |─ train_poly_pos.json

| └─ train_poly_rotate_pos.json

|- ctw1500

| |- test_images

| |- train_images_rotate

| |- test_poly.json

| └─ train_poly_rotate_pos.json

|- lsvt

| |- train_images

| └─ train_poly_pos.json

|- art

| |- test_images

| |- train_images_rotate

| |- test_poly.json

| |─ train_poly_pos.json

| └─ train_poly_rotate_pos.json

|- evaluation

| |- *.zip

1. Pre-train:

To pre-train the model for Total-Text and CTW1500, the config file should be configs/SRformer/Pretrain/R_50_poly.yaml. For ICDAR19 ArT, please use configs/SRFormer/Pretrain_ArT/R_50_poly.yaml. Please adjust the GPU number according to your situation.

python tools/train_net.py --config-file ${CONFIG_FILE} --num-gpus 8

2. Fine-tune: With the pre-trained model, use the following command to fine-tune it on the target benchmark. The pre-trained models are also provided. For example:

python tools/train_net.py --config-file configs/SRFormer/TotalText/R_50_poly.yaml --num-gpus 8

python tools/train_net.py --config-file ${CONFIG_FILE} --num-gpus ${NUM_GPUS} --eval-only

For ICDAR19 ArT, a file named art_submit.json will be saved in output/r_50_poly/art/finetune/inference/. The json file can be directly submitted to the ICDAR19-ArT website for evaluation.

python demo/demo.py --config-file ${CONFIG_FILE} --input ${IMAGES_FOLDER_OR_ONE_IMAGE_PATH} --output ${OUTPUT_PATH} --opts MODEL.WEIGHTS <MODEL_PATH>

SRFormer is inspired a lot by and TESTR and DPText-DETR. Thanks for their great works!