This code builds upon the 🤗 Transformers to evaluate curriculum learning strategies for information retrieval. Specifically, the code can be used to fine-tune BERT on conversation response ranking with the option of employing curriculum learning. We adapted the script 'run_glue.py' to receive two main additional parameters:

--pacing_function PACING_FUNCTION: str with one of the predefined pacing functions: [linear, root_2, root_5, root_10, quadraticm, geom_progression, cubic, step, standard_training]. You can also modify pacing_functions.py to add your own.

--scoring_function SCORING_FUNCTION_FILE: str with the path of a file with the difficulty score for each instance in the train set. Each line must have just a float score for the training instance at that index in the training file.

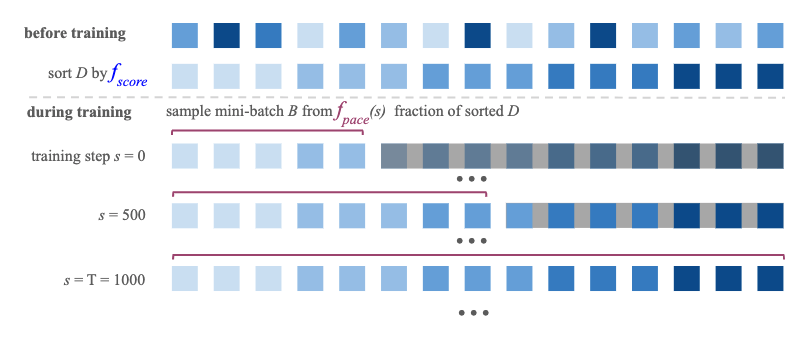

The framework is based on previous work on curriculum learning and it has two main functions: pacing functions and scoring functions. The scoring functions measure the difficulty of an instance (in our case the query is a dialogue context, i.e. set of previous utterances, and the documents are a set of candidate responses) and the pacing function determines how fast you go from easy to difficult instances during training.

This is the code used for the ECIR'20 paper "Curriculum Learning Strategies for IR: An Empirical Study on Conversation Response Ranking" [preprint]. To reproduce the results of the paper it is necessary to download the datasets: MANtIS and MSDialog, calculate the scoring functions for each training set with the 'calculate_scoring_function.py' script to use the difficulty scores as input for the BERT script ('run_glue.py'). To calculate the scoring functions based on BERT, run 'run_glue.py' with --save_aps parameter, and use the output files 'losses_' and 'preds_' respectively.

If you have any questions feel free to send me an email.

@InProceedings{10.1007/978-3-030-45439-5_46,

author="Penha, Gustavo

and Hauff, Claudia",

editor="Jose, Joemon M.

and Yilmaz, Emine

and Magalh{\~a}es, Jo{\~a}o

and Castells, Pablo

and Ferro, Nicola

and Silva, M{\'a}rio J.

and Martins, Fl{\'a}vio",

title="Curriculum Learning Strategies for IR",

booktitle="Advances in Information Retrieval",

year="2020",

publisher="Springer International Publishing",

address="Cham",

pages="699--713",

isbn="978-3-030-45439-5"

}