- "Perceptual losses for real-time style transfer and super-resolution"

- "A Learned Representation For Artistic Style"

- "Exploring the Neural Algorithm of Artistic Style"

- "Feed-forward neural doodle" blog

- "Semantic Style Transfer and Turning Two-Bit Doodles into Fine Artworks"

- "Texture Networks - Feed-forward Synthesis of Textures and Stylized Images"

- "A Neural Algorithm of Artistic Style"

- "Combining Markov Random Fields and Convolutional Neural Networks for Image Synthesis"

- "Instance Normalization - The Missing Ingredient for Fast Stylization"

- "Semantic Style Transfer and Turning Two-Bit Doodles into Fine Artworks"

This repository is an effort to learn CNN and neural style. As a result, some parts of the code could be poorly named, not commented, or even buggy. It's also my first attempt to submit a project unrelated to any course work on Github. So please bear with me (and my code).

Note that some of the code and images was taken and adapted from code on other neural style related git repo online. I did my best to cite every source I've used, but in case I missed anything or if you're one of the authors and would not like your code to be used in this repo, please let me know.

The following examples comes from stylize_example.py in the repo.

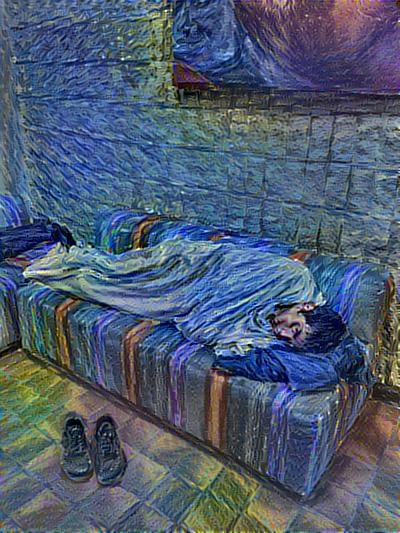

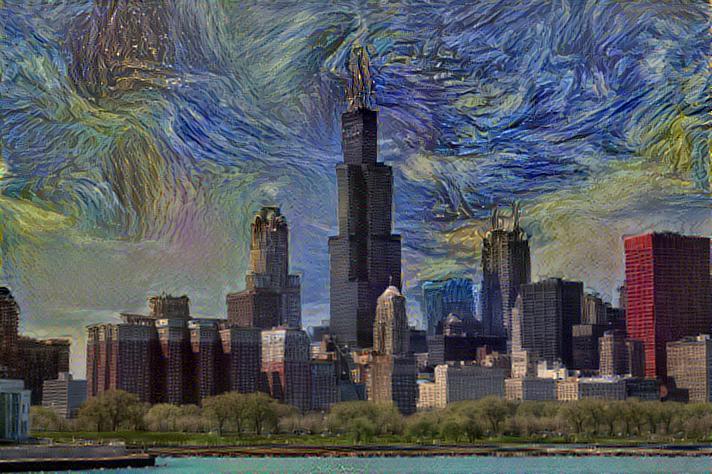

This is the output of the classic neural style algorithm described in "A Neural Algorithm of Artistic Style". Here it takes one content image and one style image and merge the two so that the outcome describe the same scene but with a different artistic "style".

This used essentially the same algorithm in "A Neural Algorithm of Artistic Style" but without a content image. As a result, the output showed the texture features of the style image.

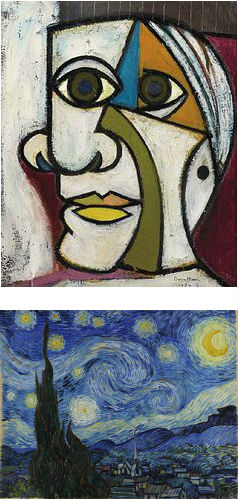

This output is made from weighing Van Gogh's "starry sky" style by 70% and Picasso's "mind, spirit and emotion" by 30%.

This one is achieved by using the MRF loss mentioned in this paper on two images with similar content but different "style". The result is the original day view of Tokyo turned into the same scene during a sunset.

In the same paper, the authors also take use of semantically labeled image for style transfer and they got some interesting result, which is repeated here.

This one is a simple improvement I made to the neural style. When I was using the program, I found that sometimes the program stylize one part of image a little bit too much than what I want but some other parts a little bit less. With the help of what I call 'style weight mask' (in black and white), one can control the degree of stylization for every single pixel.

The images from left to right are: content image, style weight mask, output, style image.

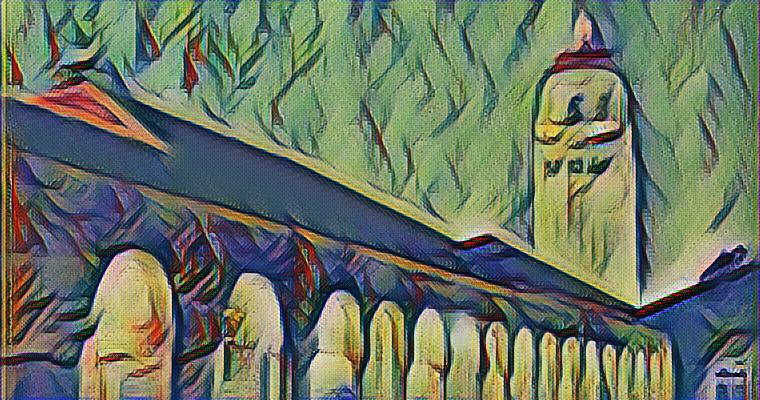

The following examples comes from feed_forward_neural_style_examples.py in the repo.

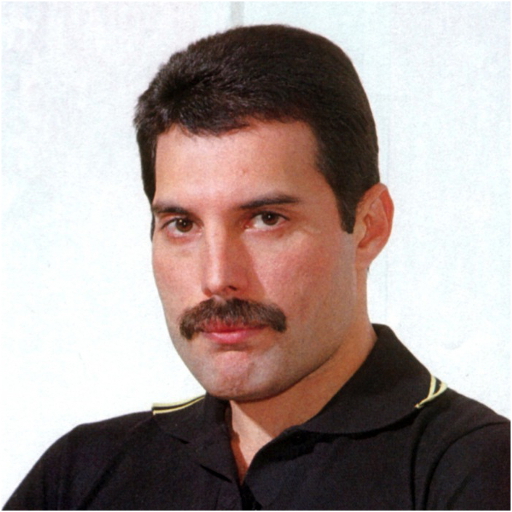

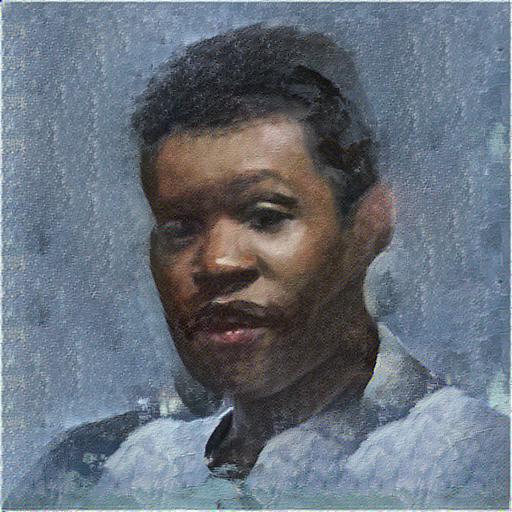

Original image:

| ModelName | Style | Samples |

|---|---|---|

| starry sky |  |

|

| mind, spirit and emotion |  |

|

| composition_v |  |

|

| feathers |  |

|

| la_muse |  |

|

| mosaic |  |

|

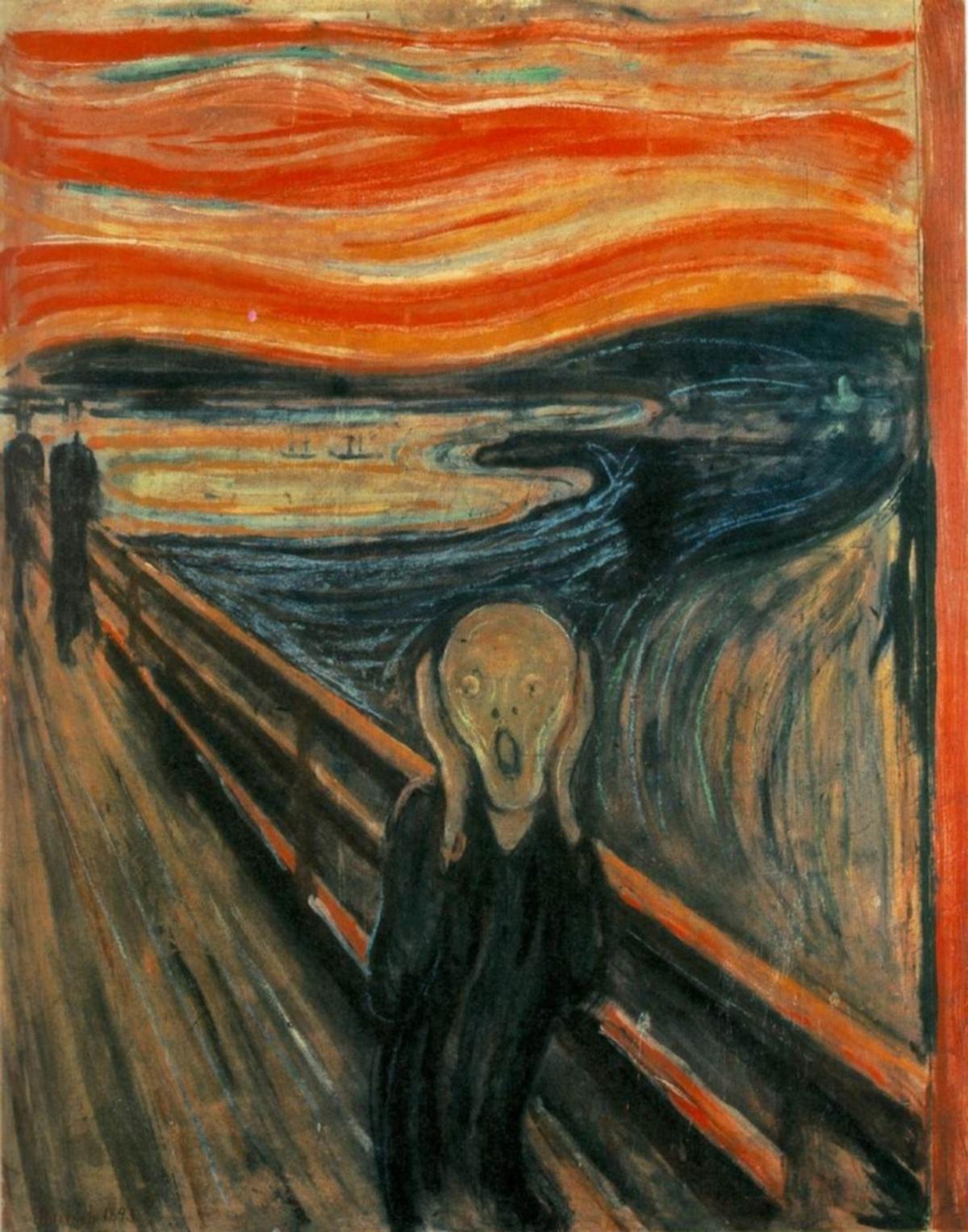

| the scream |  |

|

| udnie |  |

|

| wave |  |

|

These are just some examples. With more training time, the results can be better. (Some style images are taken from here)

TODO: IMAGE MISSING. For now check this blog for sample images.

I basically reimplemented the feed-forward version of the neural doodle, which allows anyone to paint like a real artist within minutes.

- I have yet to implement the style transfer without changing the color of the content image.

- It would be fun if I can improve the neural doodle so that it can draw more semantically complicated things. I've only tried it on paintings on natural scenery.

- Tensorflow

- Anaconda Python 2.7

- typing

- Opencv

- Using cpu is ok but it's slow. It's better to use a GPU to train models.

- If you find OOM errors, just decrease the batch size and/or input/output size.

- More examples can be found in stylize_examples and feed_forward_neural_style_examples