EgoTV: Egocentric Task Verification from Natural Language Task Descriptions

Rishi Hazra, Brian Chen, Akshara Rai, Nitin Kamra, Ruta Desai

ICCV 2023

arxiv | bibtex | website

$ git clone https://github.com/facebookresearch/EgoTV.git

$ export GENERATE_DATA=$(pwd)/EgoTV/alfred

$ cd $GENERATE_DATA

We have build the dataset generation code on top of ALFRED dataset [3] repository.

$ conda create -n <virtual_env> python==3.10.0

$ source activate <virtual_env>

$ bash install_requirements.sh

The EgoTV data can also be downlowded here. We also provide data generation insturction below.

$ cd $GENERATE_DATA/gen

$ python scripts/generate_trajectories.py --save_path <your save path> --split_type <split_type>

# append the following to generate with multiprocessing for faster generation

# --num_threads <num_threads> --in_parallel

The data is generated in: save_path

Here, split_type can be one of the following ["train", "novel_tasks", "novel_steps",

"novel_scenes", "abstraction"]

If you want to generate new layouts (aside from the generated layouts in alfred/gen/layouts),

$ cd $GENERATE_DATA/gen

$ python layouts/precompute_layout_locations.py

Alternatively, the pre-built layouts can be downloaded from here and saved to the path alfred/gen/layouts/

The pddl task files can be downloaded from here and saved to the path alfred/gen/planner/domains/

- Define the goal conditions in alfred/gen/goal_library.py

- Add the list of goals in alfred/gen/constants.py

- Add the goal_variables in alfred/gen/scripts/generate_trajectories.py

- Run the following commands:

$ cd $GENERATE_DATA/gen

$ python scripts/generate_trajectories.py --save_path <your save path>

To simply run the fastforward planner on the generated pddl problem

$ cd $GENERATE_DATA/gen

$ ff_planner/ff -o planner/domains/PutTaskExtended_domain.pddl -s 3 -f logs_gen/planner/generated_problems/problem_<num>.pddl

dataset/

├── test_splits

│ ├── abstraction

│ ├── novel scenes

│ ├── novel tasks

│ └── novel steps

└── train

| ├── heat_then_clean_then_slice

| │ └── Apple-None-None-27

| │ └── trial_T20220917_235349_019133

| │ ├── pddl_states

| │ ├── traj_data.json

| │ └── video.mp4

$GENERATE_DATA/gen/scripts/generate_trajectories.py

Note, no two splits (train or test) have overlapping examples.

-

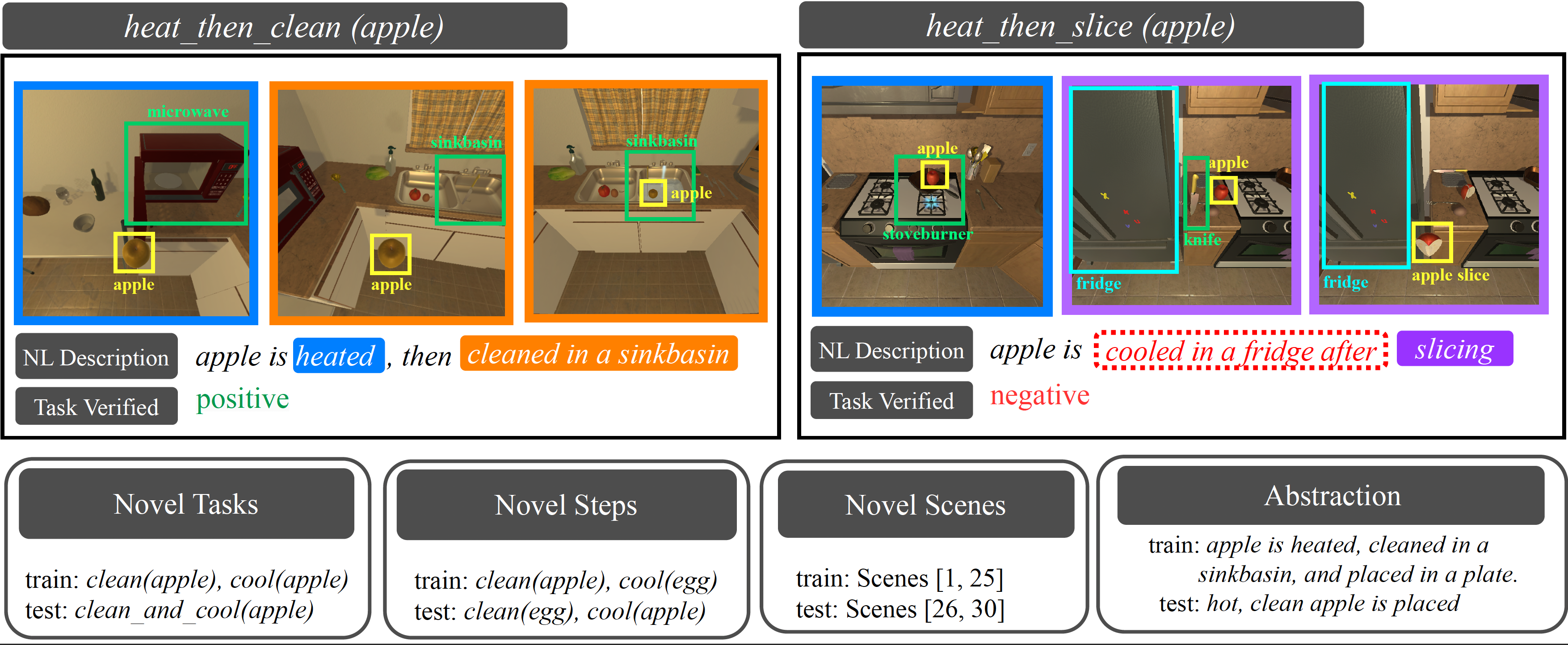

novel tasks:

- all tasks not in train

-

novel steps:

- heat(egg)

- clean(plate)

- slice(lettuce)

- place(in, shelf)

-

abstraction:

- all train tasks with highly abstracted hypothesis ($GENERATE_DATA/gen/goal_library_abstraction.py)

- for the rest of the splits ($GENERATE_DATA/gen/goal_library.py)

- novel scenes:

- all train tasks in scenes in 26-30

For details: ablations/data_analysis.ipynb

- Total hours: 168 hours

- Train: 110 hours

- Test: 58 h

- Average video-length = 84s

- Tasks: 82

- Objects > 130 (with visual variations)

- Pickup objects: 32 (with visual variations)

- Receptacles: 24 (including movable receptacles)

- Sub-tasks: 6 (pick, place, slice, clean, heat, cool)

- Average number of sub-tasks per sample: 4.6

- Scenes: 30 (Kitchens)

For additional details of the collected dataset trajectory, see: alfred/README.md

all baseline models are in the filepath: baselines/all_train

$ export DATA_ROOT=<path to dataset>

$ export BASELINES=$(pwd)/EgoTV/baselines

$ cd $BASELINES

$ bash install_requirements.sh

Alternatively, we provide a Dockerfile for easier setup.

- text encoders: GloVe, (Distil)-BERT uncased [10], CLIP [5]

- visual_encoders: ResNet18, I3D [4], S3D [7], MViT [6], CLIP [5]

$ ./run_scripts/run_violin_train.sh # for train

$ ./run_scripts/run_violin_test.sh # for test

# if data split not preprocessed, specify "--preprocess" in the run instruction

# for attention-based models, specify "--attention" in the run instruction

# to resume training from a previously stored checkpoint, specify "--resume" in the run instruction

Note: to run the I3D and S3D models, download the pretrained model (rgb_imagenet.pt, S3D_kinetics400.pt) from these repositories respectively:

$ mkdir $BASELINES/i3d/models

$ wget -P $BASELINES/i3d/models "https://github.com/piergiaj/pytorch-i3d/tree/master/models/rgb_imagenet.pt" "https://github.com/piergiaj/pytorch-i3d/tree/master/models/rgb_charades.pt"

$ wget -P $BASELINES/s3d "https://drive.google.com/uc?export=download&id=1HJVDBOQpnTMDVUM3SsXLy0HUkf_wryGO"

clip4clip (Clip4Clip [14]), coca [12], text2text (Socratic [15]), videoclip [13], modify and run from root:

$ ./run_scripts/run_<baseline>_train.sh

$ ./run_scripts/run_<baseline>_test.sh

- download CoCa model from OpenCLIP (coca_ViT-B-32 finetuned on mscoco_finetuned_laion2B-s13B-b90k)

The VideoCLIP has conflicting packages with EgoTV, hence we setup a new environment for it.

- create a new conda env since the packages used are different from EgoTV packages

conda create -n videoclip python=3.8.8

source activate videoclip

- clone the repo and run the following installations

$ git clone https://github.com/pytorch/fairseq

$ cd fairseq

$ pip install -e . # also optionally follow fairseq README for apex installation for fp16 training.

$ export MKL_THREADING_LAYER=GNU # fairseq may need this for numpy

$ cd examples/MMPT # MMPT can be in any folder, not necessarily under fairseq/examples.

$ pip install -e .

$ pip install transformers==3.4

- download the checkpoint using

wget -P runs/retri/videoclip/ "https://dl.fbaipublicfiles.com/MMPT/retri/videoclip/checkpoint_best.pt"

- for more details, see baselines/proScript

$ source activate alfred_env

$ export DATA_ROOT=<path to dataset>

$ export BASELINES=$(pwd)/EgoTV/baselines

$ cd $BASELINES/proScript

# train

$ CUDA_VISIBLE_DEVICES=0,1,2,3,4,5,6,7 python -m torch.distributed.launch --nproc_per_node=8 train_supervised.py --num_workers 4 --batch_size 32 --preprocess --test_split <> --run_id <> --epochs 20

# test

$ CUDA_VISIBLE_DEVICES=0,1,2,3,4,5,6,7 python -m torch.distributed.launch --nproc_per_node=8 test.py --num_workers 4 --batch_size 32 --preprocess --test_split <> --run_id <>

<--output_type 'nl'> for natural language graph output; <--output_type 'dsl'> for domain-specific language graph output (default: dsl)

$ ./run_scripts/run_nsg_train.sh # for nsg train

$ ./run_scripts/run_nsg_test.sh # for nsg test

If you find this codebase helpful for your work, please cite our paper:

@InProceedings{Hazra_2023_ICCV,

author = {Hazra, Rishi and Chen, Brian and Rai, Akshara and Kamra, Nitin and Desai, Ruta},

title = {EgoTV: Egocentric Task Verification from Natural Language Task Descriptions},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

month = {October},

year = {2023},

pages = {15417-15429}

}[1] Jingzhou Liu, Wenhu Chen, Yu Cheng, Zhe Gan, Licheng Yu, Yiming Yang, Jingjing Liu "VIOLIN: A Large-Scale Dataset for Video-and-Language Inference". In CVPR 2020

[2] Eric Kolve, Roozbeh Mottaghi, Winson Han, Eli VanderBilt, Luca Weihs, Alvaro Herrasti, Matt Deitke, Kiana Ehsani, Daniel Gordon, Yuke Zhu, Aniruddha Kembhavi, Abhinav Gupta, Ali Farhadi "AI2-THOR: An Interactive 3D Environment for Visual AI"

[3] Mohit Shridhar, Jesse Thomason, Daniel Gordon, Yonatan Bisk, Winson Han, Roozbeh Mottaghi, Luke Zettlemoyer, Dieter Fox "ALFRED: A Benchmark for Interpreting Grounded Instructions for Everyday Tasks" In CVPR 2020

[4] Joao Carreira, Andrew Zisserman "Quo Vadis, Action Recognition? A New Model and the Kinetics Dataset" In CVPR 2017

[5] Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, Gretchen Krueger, Ilya Sutskever "Learning Transferable Visual Models From Natural Language Supervision" In ICML 2021

[6] Haoqi Fan, Bo Xiong, Karttikeya Mangalam, Yanghao Li, Zhicheng Yan, Jitendra Malik, Christoph Feichtenhofer "Multiscale Vision Transformers" In ICCV 2021

[7] Saining Xie, Chen Sun, Jonathan Huang, Zhuowen Tu, Kevin Murphy "Rethinking Spatiotemporal Feature Learning: Speed-Accuracy Trade-offs in Video Classification" In ECCV 2018

[8] Keisuke Sakaguchi, Chandra Bhagavatula, Ronan Le Bras, Niket Tandon, Peter Clark, Yejin Choi "proScript: Partially Ordered Scripts Generation" In Findings of EMNLP 2021

[9] Colin Raffel, Noam Shazeer, Adam Roberts, Katherine Lee, Sharan Narang, Michael Matena, Yanqi Zhou, Wei Li, Peter J. Liu "Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer" In JMLR 2020

[10] Victor Sanh, Lysandre Debut, Julien Chaumond, Thomas Wolf "DistilBERT, a distilled version of BERT: smaller, faster, cheaper and lighter"

[11] Jeffrey Pennington, Richard Socher, Christopher Manning "GloVe: Global Vectors for Word Representation" In EMNLP 2014

[12] Yu, Jiahui, et al. "Coca: Contrastive captioners are image-text foundation models.", In Transactions on Machine Learning Research (2022)

[13] Xu, Hu, Gargi Ghosh, Po-Yao Huang, Dmytro Okhonko, Armen Aghajanyan, Florian Metze, Luke Zettlemoyer, and Christoph Feichtenhofer. "Videoclip: Contrastive pre-training for zero-shot video-text understanding." In EMNLP 2021

[14] Luo, Huaishao, et al. "CLIP4Clip: An empirical study of CLIP for end to end video clip retrieval and captioning." Neurocomputing (2022)

[15] Zeng, Andy, et al. "Socratic Models: Composing Zero-Shot Multimodal Reasoning with Language" ICLR 2023

The majority of EgoTV is licensed under CC-BY-NC, however, portions of the projects are available under separate license terms: Howto100M, I3D and HuggingFace Transformers are licensed under the Apache2.0 license; S3D and CLIP are licensed under the MIT license; CrossTask and MViT are licensed under the BSD-3.