PraNet: Parallel Reverse Attention Network for Polyp Segmentation (MICCAI 2020)

Authors: Deng-Ping Fan, Ge-Peng Ji, Tao Zhou, Geng Chen, Huazhu Fu, Jianbing Shen, and Ling Shao.

1. Preface

-

This repository provides code for "PraNet: Parallel Reverse Attention Network for Polyp Segmentation" MICCAI-2020. (arXiv Pre-print)

-

If you have any questions about our paper, feel free to contact me. And if you are using PraNet or evaluation toolbox for your research, please cite this paper (BibTeX).

1.1. 🔥 NEWS 🔥

-

[2020/05/28]

💥 Upload pre-trained weights. (Updated by Ge-Peng Ji) -

[2020/06/24]

💥 Release training/testing code. (Updated by Ge-Peng Ji) -

[2020/03/24] Create repository.

1.2. Table of Contents

Table of contents generated with markdown-toc

2. Overview

2.1. Introduction

Colonoscopy is an effective technique for detecting colorectal polyps, which are highly related to colorectal cancer. In clinical practice, segmenting polyps from colonoscopy images is of great importance since it provides valuable information for diagnosis and surgery. However, accurate polyp segmentation is a challenging task, for two major reasons: (i) the same type of polyps has a diversity of size, color and texture; and (ii) the boundary between a polyp and its surrounding mucosa is not sharp.

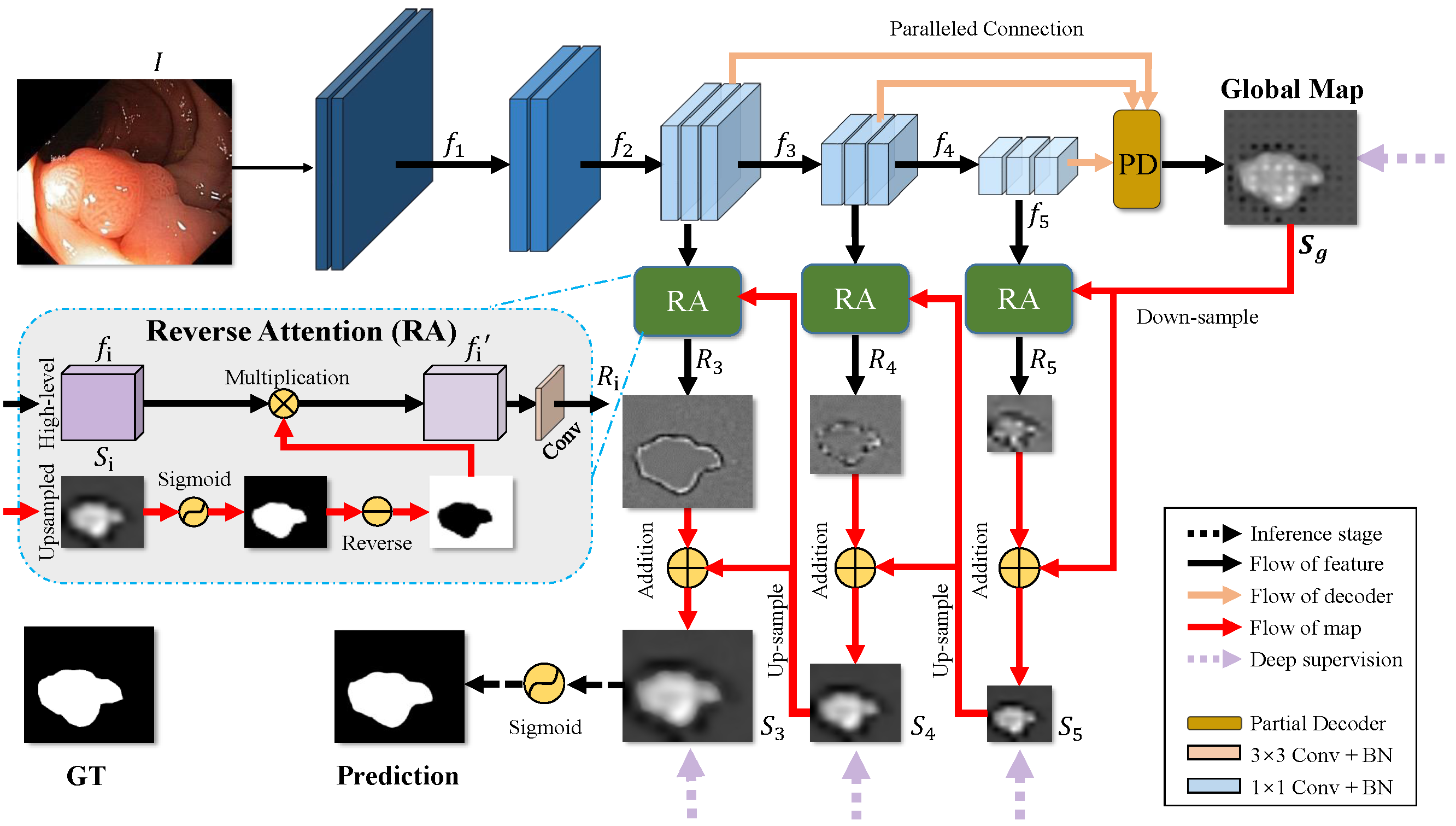

To address these challenges, we propose a parallel reverse attention network (PraNet) for accurate polyp segmentation in colonoscopy images. Specifically, we first aggregate the features in high-level layers using a parallel partial decoder (PPD). Based on the combined feature, we then generate a global map as the initial guidance area for the following components. In addition, we mine the boundary cues using a reverse attention (RA) module, which is able to establish the relationship between areas and boundary cues. Thanks to the recurrent cooperation mechanism between areas and boundaries, our PraNet is capable of calibrating any misaligned predictions, improving the segmentation accuracy.

Quantitative and qualitative evaluations on five challenging datasets across six metrics show that our PraNet improves the segmentation accuracy significantly, and presents a number of advantages in terms of generalizability, and real-time segmentation efficiency (∼50fps).

2.2. Framework Overview

Figure 1: Overview of the proposed PraNet, which consists of three reverse attention

modules with a parallel partial decoder connection. See § 2 in the paper for details.

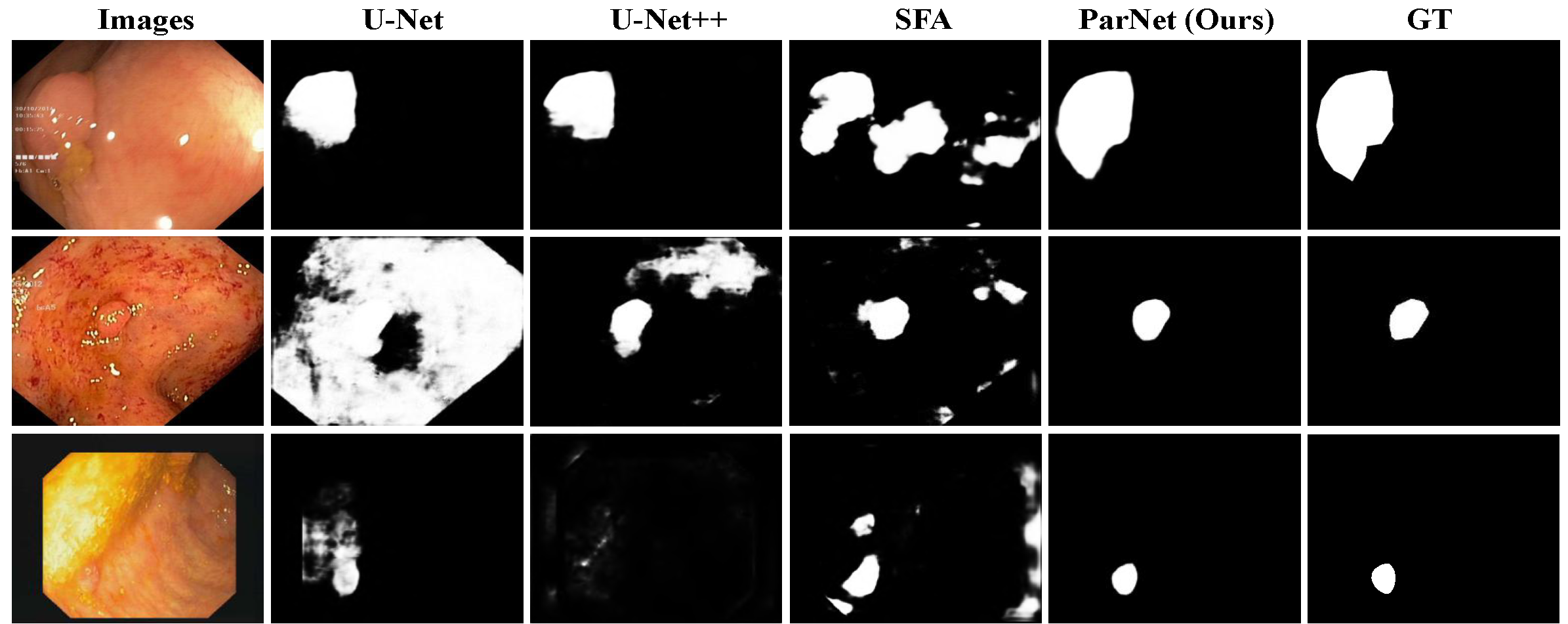

2.3. Qualitative Results

Figure 2: Qualitative Results.

3. Proposed Baseline

3.1. Training/Testing

The training and testing experiments are conducted using PyTorch with a single GeForce RTX TITAN GPU of 24 GB Memory.

Note that our model also supports low memory GPU, which means you can lower the batch size

-

Configuring your environment (Prerequisites):

Note that PraNet is only tested on Ubuntu OS with the following environments. It may work on other operating systems as well but we do not guarantee that it will.

-

Creating a virtual environment in terminal:

conda create -n SINet python=3.6. -

Installing necessary packages: PyTorch 1.1

-

-

Downloading necessary data:

-

downloading testing dataset and move it into

./data/TestDataset/, which can be found in this download link (Google Drive). -

downloading training dataset and move it into

./data/TrainDataset/, which can be found in this download link (Google Drive). -

downloading pretrained weights and move it into

snapshots/PraNet_Res2Net/PraNet-19.pth, which can be found in this download link (Google Drive). -

downloading Res2Net weights download link (Google Drive).

-

-

Training Configuration:

-

Assigning your costumed path, like

--train_saveand--train_pathinMyTrain.py. -

Just enjoy it!

-

-

Testing Configuration:

-

After you download all the pre-trained model and testing dataset, just run

MyTest.pyto generate the final prediction map: replace your trained model directory (--pth_path). -

Just enjoy it!

-

3.2 Evaluating your trained model:

One-key evaluation is written in MATLAB code (revised from link),

please follow this the instructions in ./eval/main.m and just run it to generate the evaluation results in.

pre-computed map can be found in download link.

4. Citation

Please cite our paper if you find the work useful:

@article{fan2020pra,

title={PraNet: Parallel Reverse Attention Network for Polyp Segmentation},

author={Fan, Deng-Ping and Ji, Ge-Peng and Zhou, Tao and Chen, Geng and Fu, Huazhu and Shen, Jianbing and Shao, Ling},

journal={MICCAI},

year={2020}

}

5. TODO LIST

If you want to improve the usability or any piece of advice, please feel free to contact me directly (E-mail).

-

Support

NVIDIA APEXtraining. -

Support different backbones ( VGGNet, ResNet, ResNeXt, iResNet, and ResNeSt etc.)

-

Support distributed training.

-

Support lightweight architecture and real-time inference, like MobileNet, SqueezeNet.

-

Support distributed training

-

Add more comprehensive competitors.

6. FAQ

-

If the image cannot be loaded in the page (mostly in the domestic network situations).