Released code for paper Visual Paraphrase Generation with Key Information Retained in TOMM 2023.

- Install Anaconda or Miniconda distribution based on Python3+ from their downloads' site.

- Install python package the code needs.

All the training, validation and test data in the data_sentences folder.

- All this data is preprocessed from the MSCOCO caption dataset.

- More details about preprocessing, you can see in repository

You can download visualBERT model from link

python train.py@article{xie2023visual,

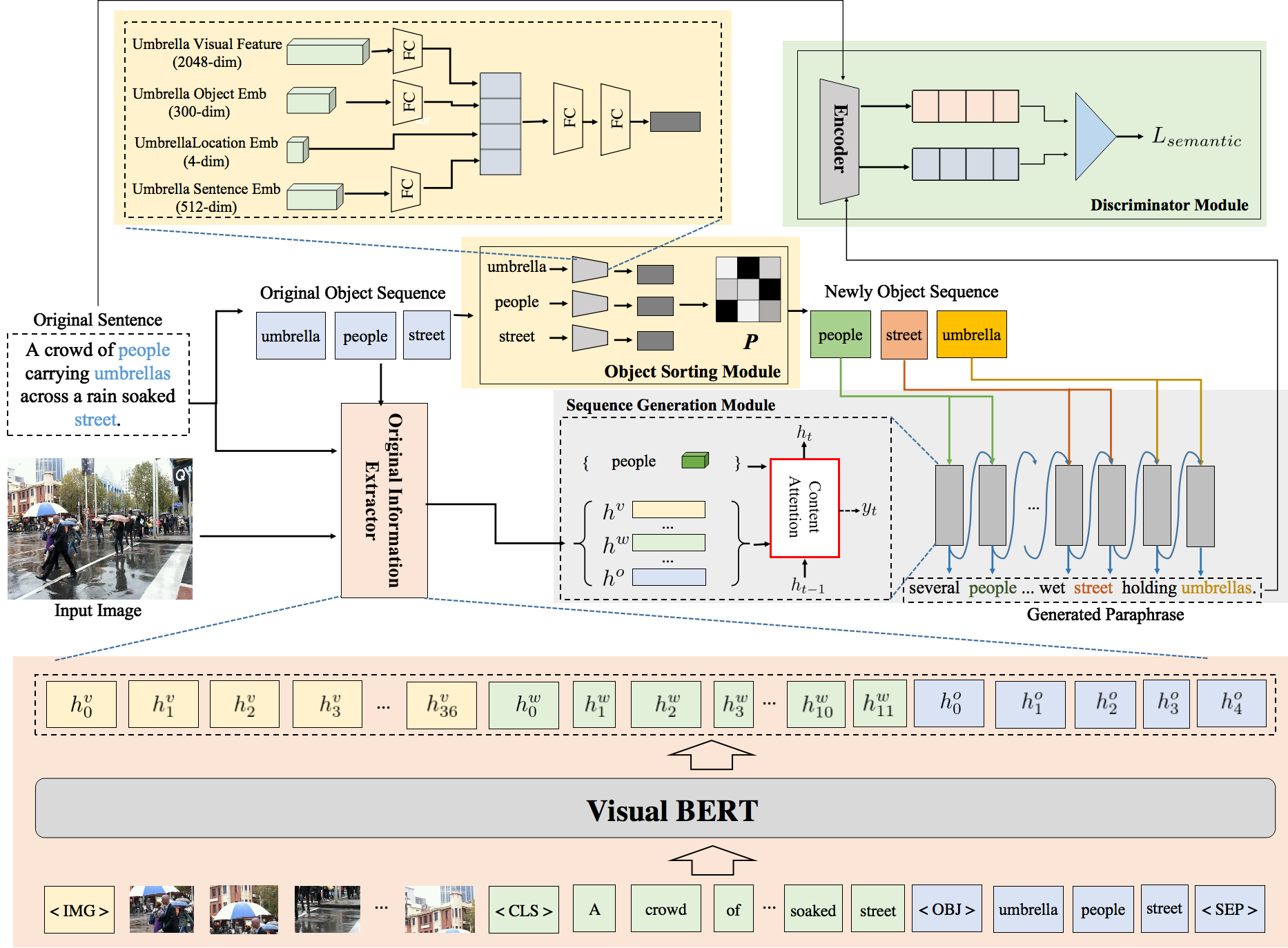

title={Visual paraphrase generation with key information retained},

author={Xie, Jiayuan and Chen, Jiali and Cai, Yi and Huang, Qingbao and Li, Qing},

journal={ACM Transactions on Multimedia Computing, Communications and Applications},

volume={19},

number={6},

pages={1--19},

year={2023},

publisher={ACM New York, NY}

}