Official code repository 📑 for EMNLP 2022 Findings paper "SMiLE: Schema-augmented Multi-level Contrastive Learning for Knowledge Graph Link Prediction".

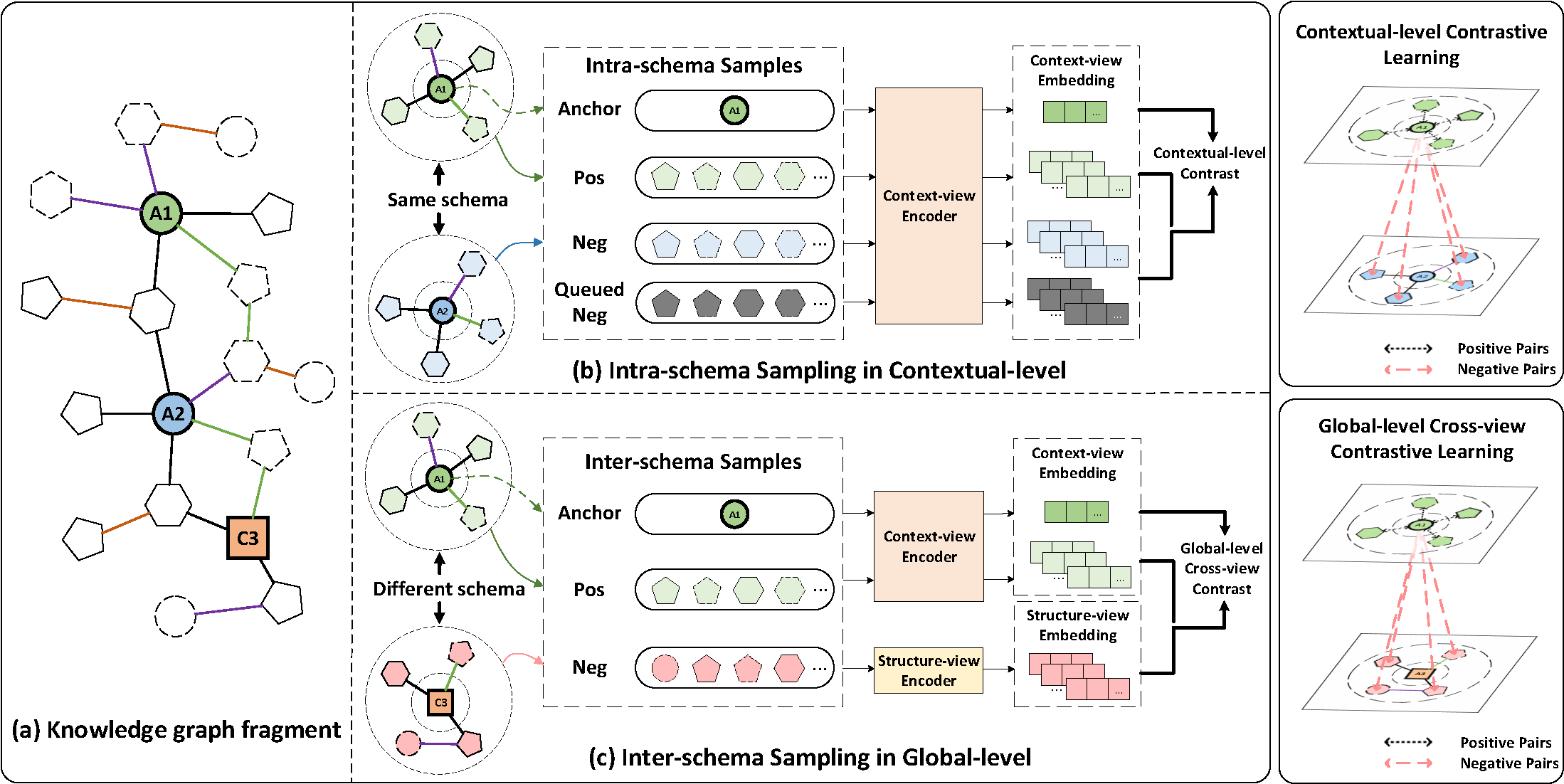

In this paper, we identify that most existing embedding-based methods fail to capture contextual information in entity neighborhoods. Additionally, little attention is paid to the diversity of entity representations in different contexts. We consider that the schema of KG is beneficial for preserving the consistency of entities across contexts, and we propose a novel schema-augmented multi-level contrastive learning framework (SMiLE😊) to conduct knowledge graph link prediction.

Environmental Settings: Linux Ubuntu 18.04.6 LTS, CUDA 10.2, NVIDIA TITAN RTX(24GB).

Run pip install -r requirements.txt to install following dependencies:

- Python 3.6.5

- numpy 1.19.5

- pandas 1.1.0

- networkx 2.4

- scikit_learn 1.1.2

- torch 1.5.0

We use four public KG datasets: FB15k, FB15k-237, JF17k and HumanWiki.

FB15k, JF17k and HumanWiki data are taken from RETA. FB15k-237 data came from CompGCN.

The datasets used in this repository are list in the directory $REPO_DIR/data, you can directly use them or download corresponding datasets from links above.

Download pretrained node embeddings from here, and put them into corresponding dataset directory.

PS: For a new dataset, you can generate the pretrained embeddings by employing Node2vec or CompGCN.

Before starting training on each dataset, schema graph are essential to be generated first.

Run $REPO_DIR/src/build_schema.py file with following command:

# Build schema graph for $dataset with a frequency threshold of $topNfilters

python build_schema.py --data_path '/data/$dataset' --topNfilters $topNfiltersThe generated schema file schema_ttv-$topNfilters.txt will be added to corresponding dataset directory.

The following command lists the key parameters to run SMiLE on a dataset (Taking dataset FB15k as an example):

# Taking dataset FB15k as an example

python main.py \

--gpu_id 0 \

--data_name 'FB15k' \

--data_path 'data' \

--outdir 'output/FB15k-1' \

--pretrained_embeddings 'data/FB15k/FB15k.emd' \

--n_epochs 15 \

--batch_size 1024 \

--checkpoint 4 \

--schema_weight 1\

--n_layers 4 \

--n_heads 4 \

--gcn_option 'no_gcn' \

--node_edge_composition_func 'mult' \

--ft_input_option 'last4_cat' \

--path_option 'shortest' \

--ft_n_epochs 15 \

--num_walks_per_node 1 \

--max_length 6 \

--beam_width 6 \

--walk_type 'bfs' \

--topNfilters -700 \

--is_pre_trained \

--use_schemaTo reproduce the results of SMiLE on FB15k, FB15k-237, JF17k and HumanWiki, please execute following steps:

- Choose a dataset $dataset.

- Copy parameters and commands in file

$REPO_DIR/config/$dataset/run_$dataset.shand paste them to file$REPO_DIR/run.sh - Execute command

sh run.sh.

The trained model checkpoints and output files are saved in directory $REPO_DIR/output.

Some codes are modified from SLiCE and RETA. Thanks a lot to the authors!