This project (based on mmdetection && mmclassification && DeepSort) is the re-implementation of our paper.

The system has been validated in Multi-Class Product Counting & Recognition for Automated Retail Checkout (2023 AI CITY Challenge Task4) competition, where our results achieve F1 Score of 0.9787 in Task4 testA, ranking second in 2023 AICITY Challenge Task4. A self-adaptive target region-based product detection system is proposed to address the challenges of automated retail checkout. The main contributions of this work are as follows:

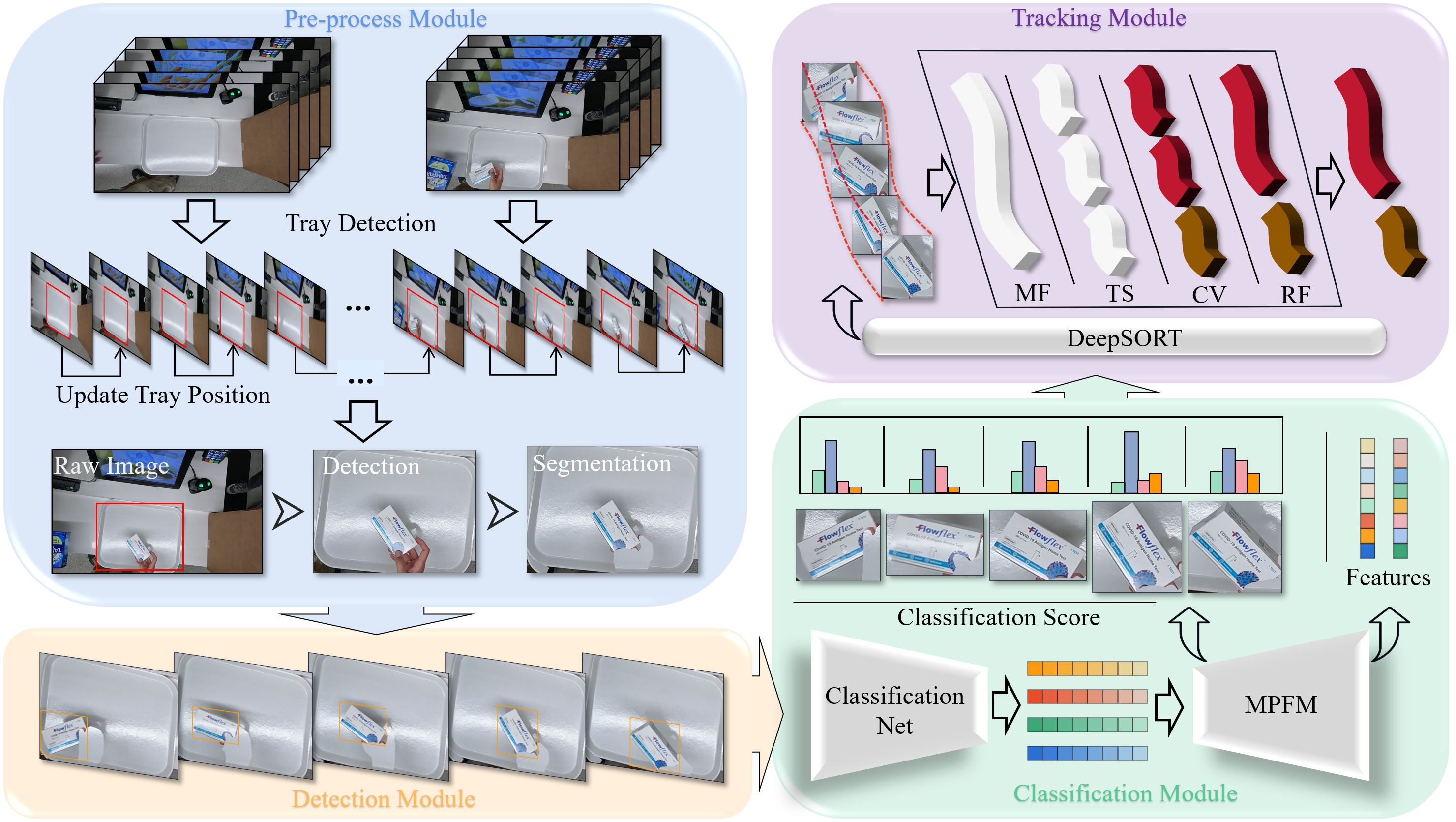

- An Adaptive Target Area Commodity Detection System is designed and verified in 2023 AI CITY Challenge Task4, and it achieves very high accuracy in commodity detection.

- A novel data augmentation approach and targeted data synthesis methods are developed to create training data that is more representative of real-world scenarios.

- A Dual-detector Fusion Algorithm is designed for the product detection problem, which is used to output the final detection boxes.

- A cost-effective Adaptive Target Region Algorithm is introduced to mitigate the impact of camera movement on object detection and tracking in the test videos.

- MPFM is designed to effectively fuse the feature representations from multiple classifiers and provide reliable product feature representations for tracking, leading to more stable and accurate classification and tracking results.

- Download images and annotations for training detection from GoogleDrive-det.

- Download images and annotations for training detection with mask from GoogleDrive-det-Cascade_mask_rcnn.

- Download images for training classification from GoogleDrive-cls.

- Download images for fine tuning from GoogleDrive-cls-fine-tune

data

├── coco

│ └── annotations

│ └── train2017

│ └── val2017

├── cls

│ └── meta

│ └── train

│ └── val

Please place the videos you want to test in the test_videos folder.

test_videos/

├── testA_1.mp4

├── testA_2.mp4

├── testA_3.mp4

├── testA_4.mp4

├── video_id.txt

- python 3.7.12

- pytorch 1.10.0

- torchvision 0.11.2

- cuda 10.2

- mmcv-full 1.4.3

- tensorflow-gpu 1.15.0

1. git clone https://github.com/mzl163/AICITY23_Task4.git

2. conda create -n AdaptCD python=3.7

3. conda activate AdaptCD

4. pip install torch==1.10.1+cu113 torchvision==0.11.2+cu113 torchaudio==0.10.1 -f https://download.pytorch.org/whl/cu113/torch_stable.html

5. pip install mmcv-full==1.4.3 -f https://download.openmmlab.com/mmcv/dist/cu113/torch1.10.0/index.html

6. cd ./AICITY23_Task4

7. pip install -r requirements.txt

8. sh ./tools1/setup.shPrepare train&test data by following here

- Step1: training.

# 1. Train Detector

# a. Single GPU

python ./mmdetection/tools/train.py ./mmdetection/configs/detectors/detectors_cascade_rcnn_r50_1x_coco.py

# b. Multi GPUs(e.g. 2 GPUs)

bash ./mmdetection/tools/dist_train.sh ./mmdetection/configs/detectors/detectors_cascade_rcnn_r50_1x_coco.py 2

# 2. Train Classifier

# a. Single GPU

bash ./mmclassification/tools/train_single.sh

# b. Multi GPUs(e.g. 2 GPUs)

bash ./mmclassification/tools/train_multi.sh 2

# 3. Train mlp(after place the all classification model in new_checkpoints as testing)

# a. Single GPU

bash ./tools1/train_mlp.sh- Step2: testing.

Please place the all models in the new_checkpoints folder. These models can be downloaded from b2.pth ,best_DTC_single_GPU.pth ,cascade_mask_rcnn.pth ,detectors_cascade_rcnn.pth ,detectors_htc.pth ,feature.pth ,s50.pth ,s101.pth

new_checkpoints/

├── b2.pth

├── best_DTC_single_GPU.pth

├── cascade_mask_rcnn.pth

├── detectors_cascade_rcnn.pth

├── detectors_htc.pth

├── feature.pth

├── s50.pth

├── s101.pth

Command line

# 1. Use the FFmpeg library to extract/count frames.

python tools1/extract_frames.py --out_folder ./frames

# 2. AdaptCD

python tools1/test_network.py --input_folder ./frames --out_file ./results.txtIf you have any questions, feel free to contact Zeliang Ma (mzl@bupt.edu.cn).