Implementation of the ECCV22 paper LGV: Boosting Adversarial Example Transferability from Large Geometric Vicinity by Martin Gubri, Maxime Cordy, Mike Papadakis, Yves Le Traon from the University of Luxembourg and Koushik Sen from the University of California, Berkeley.

✅ A clean easy-to-use implementation is available in the torchattacks library.

➡️ With this simple example:

from torchattacks import LGV, BIM

# train_loader should scale images in [0,1]. A data normalization layer should be added to model.

atk = torchattacks.LGV(model, train_loader, lr=0.05, epochs=10, nb_models_epoch=4, wd=1e-4, n_grad=1, attack_class=BIM, eps=4/255, alpha=4/255/10, steps=50, verbose=True)

atk.collect_models()

atk.save_models('models/lgv/')

adv_images = atk(images, labels)➡️ With this complete and runnable notebook based on pretrained LGV models.

➡️ With the documentation of the torchattacks.LGV class.

➡️ LGV models are available on Figshare.

We propose transferability from Large Geometric Vicinity (LGV), a new technique to increase the transferability of black-box adversarial attacks. LGV starts from a pretrained surrogate model and collects multiple weight sets from a few additional training epochs with a constant and high learning rate. LGV exploits two geometric properties that we relate to transferability. First, models that belong to a wider weight optimum are better surrogates. Second, we identify a subspace able to generate an effective surrogate ensemble among this wider optimum. Through extensive experiments, we show that LGV alone outperforms all (combinations of) four established test-time transformations by 1.8 to 59.9 percentage points. Our findings shed new light on the importance of the geometry of the weight space to explain the transferability of adversarial examples.

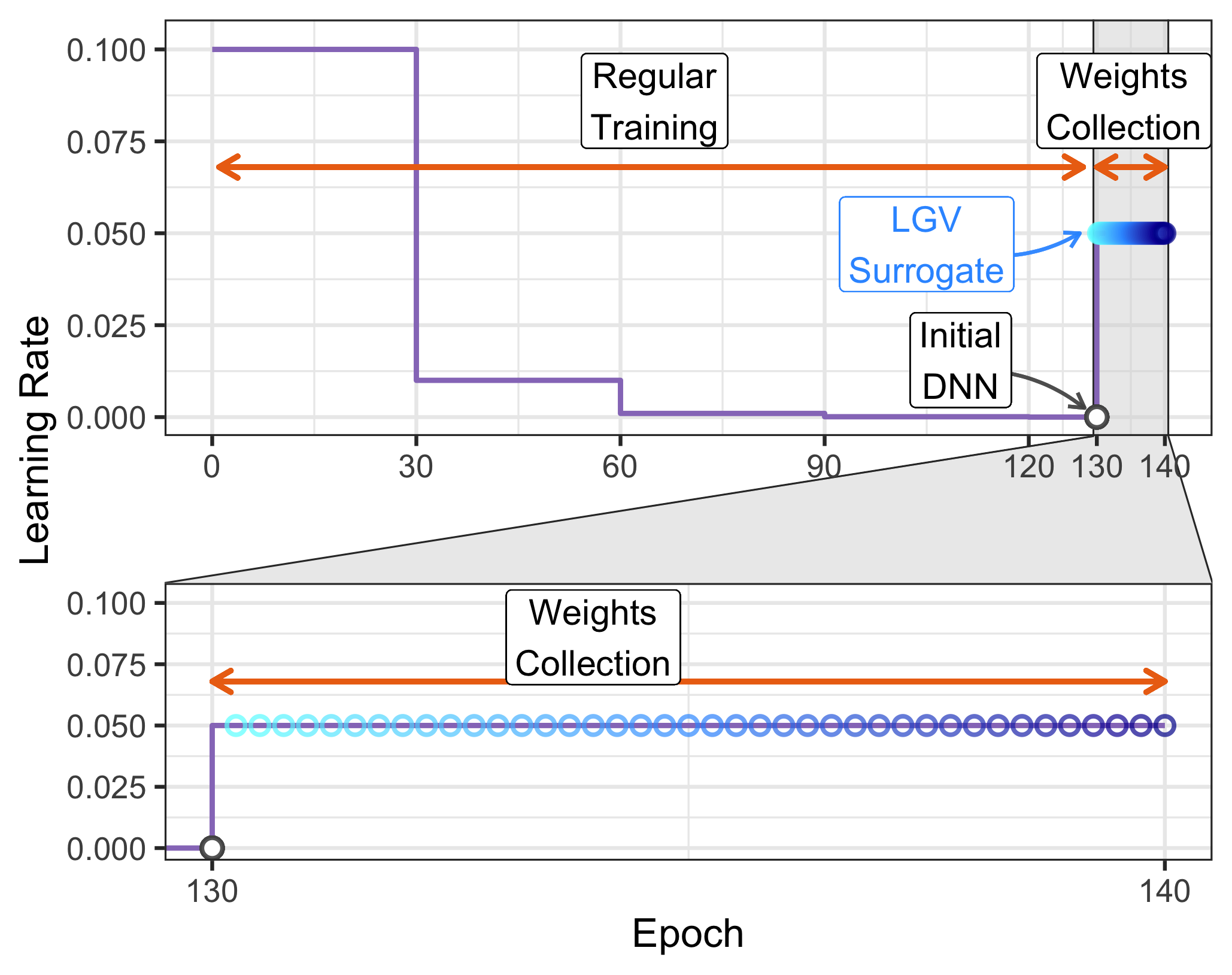

LGV collects weights along the SGD trajectory with a high learning rate during 10 epochs and starting from a regularly trained DNN.

Representation of the proposed LGV approach.

Then, regular attacks (I-FGSM, MI-FGSM, PGD, etc.) are applied on one collected model per iteration.

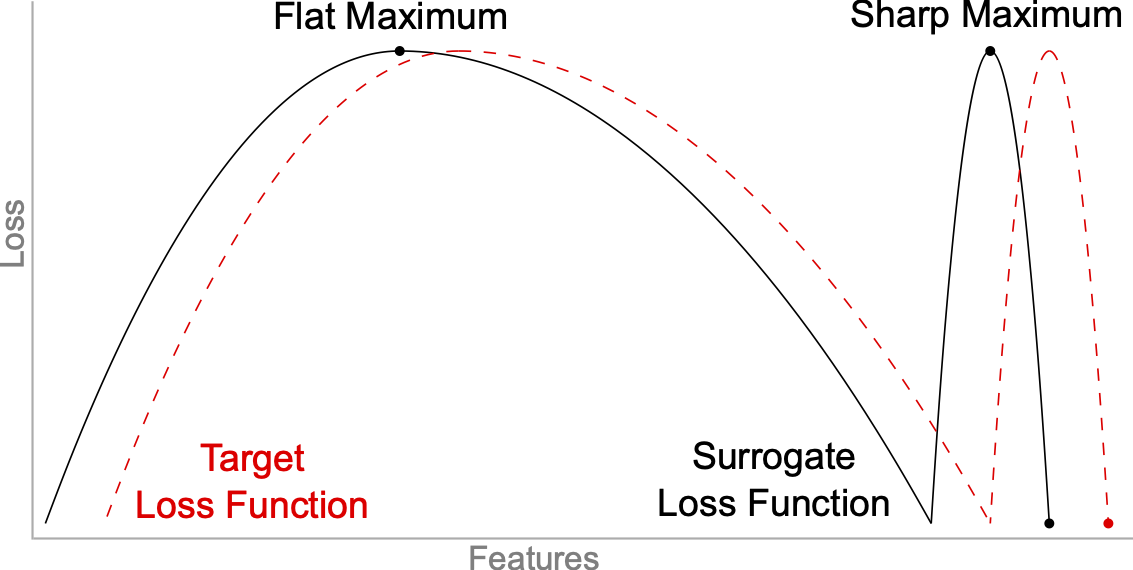

Conceptual sketch of flat and sharp adversarial examples. Adapted from Keskar N.S., et al. (2017).

LGV produces flatter adversarial examples in the feature space than the initial DNN. In the intra-architecture transferability case, the LGV and target losses have similar and shifted shapes.

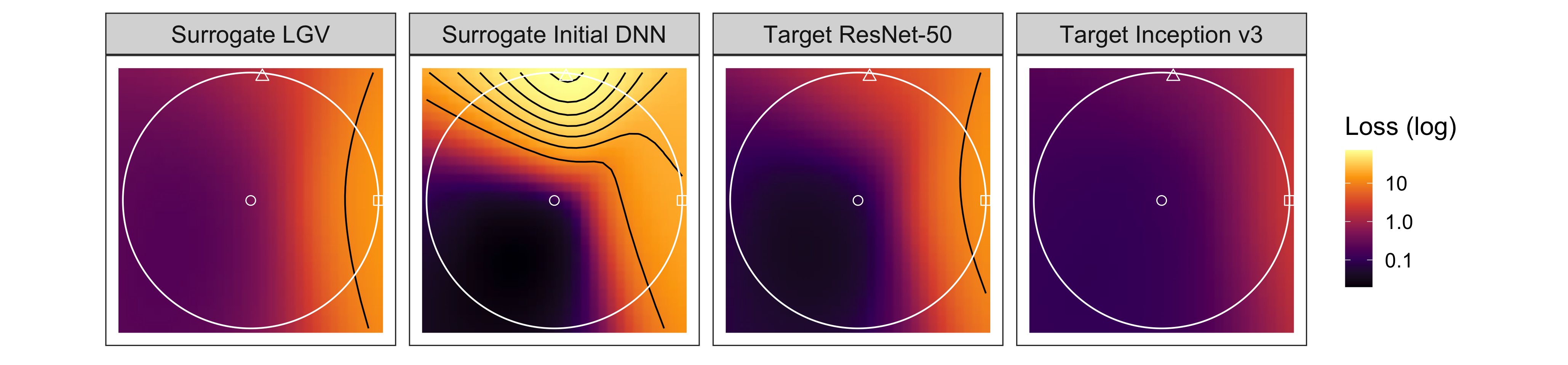

Surrogate (left) and target (right) average losses of 500 planes each containing the original example (circle), an adversarial example against LGV (square) and one against the initial DNN (triangle). Colours are in log-scale, contours in natural scale. The white circle represents the intersection of the 2-norm ball with the plane.

Read the full paper for insights on the relation between transferability and the geometry of the weight space.

If you use our code or our method, please cite our paper:

@InProceedings{gubri_eccv22,

author="Gubri, Martin and Cordy, Maxime and Papadakis, Mike and Le Traon, Yves and Sen, Koushik",

editor="Avidan, Shai and Brostow, Gabriel and Ciss{\'e}, Moustapha and Farinella, Giovanni Maria and Hassner, Tal",

title="LGV: Boosting Adversarial Example Transferability from Large Geometric Vicinity",

booktitle="Computer Vision -- ECCV 2022",

year="2022",

publisher="Springer Nature Switzerland",

address="Cham",

pages="603--618",

abstract="We propose transferability from Large Geometric Vicinity (LGV), a new technique to increase the transferability of black-box adversarial attacks. LGV starts from a pretrained surrogate model and collects multiple weight sets from a few additional training epochs with a constant and high learning rate. LGV exploits two geometric properties that we relate to transferability. First, models that belong to a wider weight optimum are better surrogates. Second, we identify a subspace able to generate an effective surrogate ensemble among this wider optimum. Through extensive experiments, we show that LGV alone outperforms all (combinations of) four established test-time transformations by 1.8 to 59.9{\%} points. Our findings shed new light on the importance of the geometry of the weight space to explain the transferability of adversarial examples.",

isbn="978-3-031-19772-7"

}python3 -m venv venv

. venv/bin/activate

# or conda create -n advtorch python=3.8

# conda activate advtorch

pip install --upgrade pip

pip install -r requirements2.txt -f https://download.pytorch.org/whl/torch_stable.html

cd lgvIf you wish to avoid collecting models on your own, you can retrieve our publicly available pretrained LGV models and skip the next two subsections.

wget -O lgv_models.zip https://figshare.com/ndownloader/files/36698862

unzip lgv_models.zipWe retrieve the ResNet-50 DNNs trained independently by pytorch-ensembles.

# Manual web-based download seems more reliable, but the following scripts is given as help if needed:

pip install wldhx.yadisk-direct

mkdir -p lgv/models/ImageNet/resnet50/SGD

cd lgv/models/ImageNet/resnet50/SGD

curl -L $(yadisk-direct https://yadi.sk/d/rdk6ylF5mK8ptw?w=1) -o deepens_imagenet.zip

unzip deepens_imagenet.zipCollect 40 weights for 9 different initial DNNs: 3 as test for reporting, 3 as validation for hyperparameter tuning, and 3 for the shift subspace experiment.

bash lgv/imagenet/train_cSGD.sh >>lgv/log/imagenet/train_cSGD.log 2>&1To reproduce Tables 1 and 8.

bash lgv/imagenet/attack_inter_arch.sh >>lgv/log/imagenet/attack_inter_arch.log 2>&1Plot and analysis of all experiments are implemented in plot.R.

Create a surrogate by applying random directions in weight space from 1 DNN (RD surrogate),

and compare it with random directions in feature space applied at each iteration.

generate_noisy_models.sh executes both, including HP tuning.

bash lgv/imagenet/generate_noisy_models.sh >>lgv/log/imagenet/generate_noisy_models.log 2>&13 XPs to study flatness in weight space:

- Hessian-based sharpness metrics

The script to compute the trace and the largest eigenvalue of the Hessian is based on PyHessian which requires a separate virtualenv with other requirements.

# create a new virtualenv

conda create -n pyhessian

conda activate pyhessian

conda install pip

pip install pyhessian torchvision

# execute and print results

sh lgv/imagenet/hessian/compute_hessian.sh >>lgv/log/imagenet/compute_hessian.log 2>&1- Moving along 10 random directions in weight space ("random rays")

# be aware that this XP takes a long time to run

bash lgv/imagenet/generate_random1D_models.sh >>lgv/log/imagenet/generate_random1D_models.log 2>&1- Interpolation in weight space between the initial DNN and LGV-SWA

bash lgv/imagenet/generate_parametric_path.sh >>lgv/log/imagenet/generate_parametric_path.log 2>&1Plot the loss in the disk defined by the intersection of the L2 ball with the plane defined by these 3 points: the original example, an adversarial example crafted against a first surrogate and an adversarial example crafted against a second surrogate.

bash lgv/imagenet/analyse_feature_space.sh >>lgv/log/imagenet/analyse_feature_space.log 2>&1Compute the success rate of each individual LGV model on its own.

bash lgv/imagenet/attack_individual_model.sh >>lgv/log/imagenet/attack_individual_model.log 2>&1Create the "LGV-SWA + RD" surrogate by applying random directions in weight space from LGV-SWA.

bash lgv/imagenet/generate_noisy_models_lgvswa.sh >>lgv/log/imagenet/generate_noisy_models_lgvswa.log 2>&1Create the "LGV-SWA + RD in S" surrogate by applying sampling random directions in the LGV subspace (instead of the full weight space for LGV-SWA + RD).

bash lgv/imagenet/generate_gaussian_subspace.sh >>lgv/log/imagenet/generate_gaussian_subspace.log 2>&1Decompose the LGV deviation matrix in orthogonal directions using PCA (Figure 5).

bash lgv/imagenet/generate_gaussian_subspace.sh >>lgv/log/imagenet/generate_gaussian_subspace.log 2>&1Shift the deviations of independently obtained LGV' weights to LGV-SWA and 1 DNN, and compare it to random directions and regular LGV.

bash lgv/imagenet/analyse_weights_space_translation.sh >>lgv/log/imagenet/analyse_weights_space_translation.log 2>&1bash lgv/imagenet/train_HP_lr.sh >>lgv/log/imagenet/train_HP_lr.log 2>&1bash lgv/imagenet/train_HP_epochs.sh >>lgv/log/imagenet/train_HP_epochs.log 2>&1bash lgv/imagenet/train_HP_nb_models.sh >>lgv/log/imagenet/train_HP_nb_models.log 2>&1bash lgv/imagenet/train_HP_nb_iters.sh >>lgv/log/imagenet/train_HP_nb_iters.log 2>&1This repository includes code from the following papers or repositories:

- SWAG code to collect models along the SGD trajectory with constant learning rate

- Subspace Inference some utils to analyse the weight space of pytorch model

- ART Adversarial Robustness Toolbox library

- PyHessian to compute Hessian-based sharpness metrics