Tensorflow and Pytorch implementation of the Total Variation Graph Neural Network (TVGNN) as presented in the original paper.

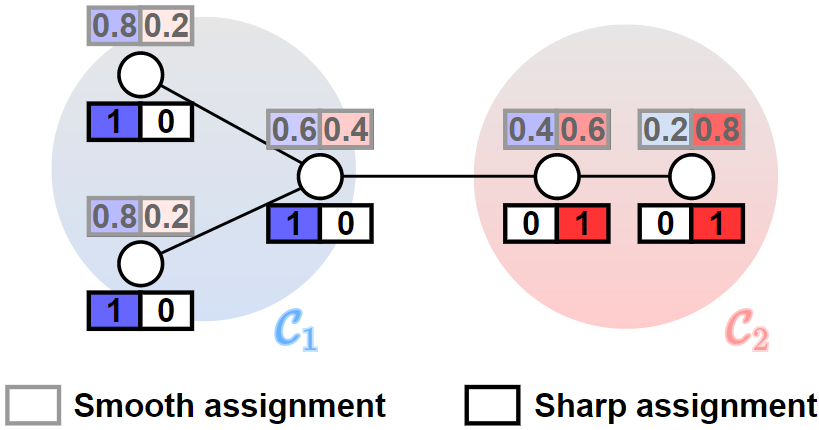

The TVGNN model can be used to cluster the vertices of an annotated graph, by accounting both for the graph topology and the vertex features. Compared to other GNNs for clustering, TVGNN creates sharp cluster assignments that better approximate the optimal (in the minimum cut sense) partition.

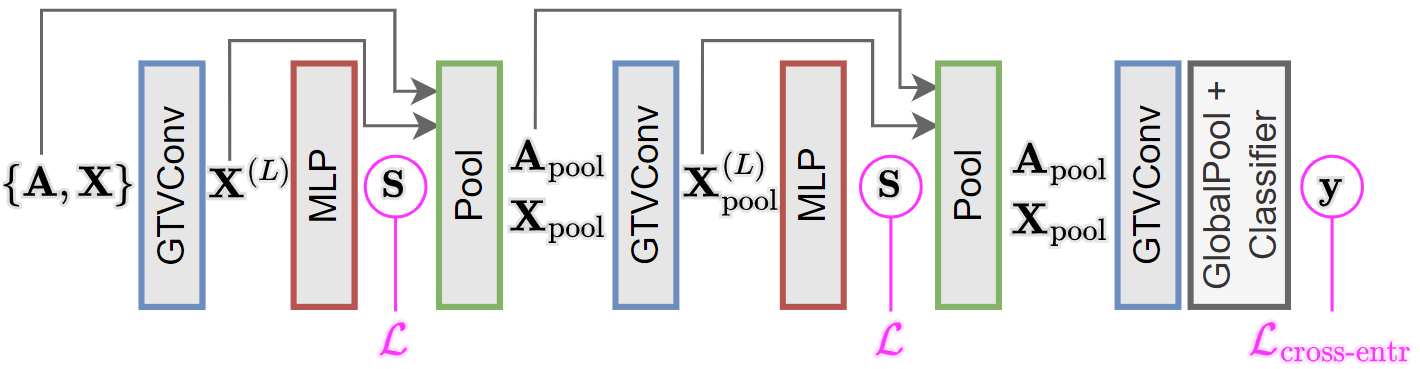

The TVGNN model can also be used to implement graph pooling in a deep GNN architecture for tasks such as graph classification.

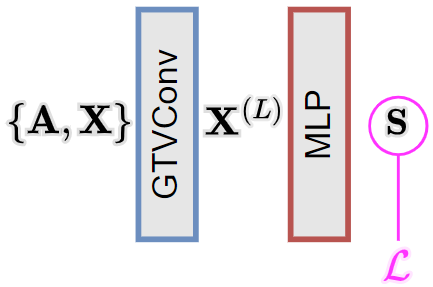

TVGNN consists of the GTVConv layer and the AsymmetricCheegerCut layer.

The GTVConv layer is a message-passing layer that minimizes the

where

where

The AsymCheegerCut is a graph pooling layer that internally contains an

- a cluster assignment matrix

$\mathbf{S} = \texttt{Softmax}(\texttt{MLP}(\mathbf{X}; \mathbf{\Theta}_\text{MLP})) \in \mathbb{R}^{N\times K}$ , which maps the$N$ vertices in$K$ clusters, - an unsupervised loss

$\mathcal{L} = \alpha_1 \mathcal{L}_ \text{GTV} + \alpha_2 \mathcal{L}_ \text{AN}$ , where$\alpha_1$ and$\alpha_2$ are two hyperparameters, - the adjacency matrix and the vertex features of a coarsened graph

The term

where

The term

where

We use TVGNN to perform vertex clustering and graph classification. Other tasks such as graph regression could be considered as well.

This is an unsupervised task, where the goal is to generate a partition of the vertices based on the similarity of their vertex features and the graph topology. The GNN model is trained only by minimizing the unsupervised loss

This is a supervised with goal of predicting the class of each graph. The GNN rchitectures for graph classification alternates GTVConv layers with a graph pooling layer, which gradually distill the global label information from the vertex representations. The GNN is trained by minimizing the unsupervised loss

This implementation is based on the Spektral library and follows the Select-Reduce-Connect API. To execute the code, first install the conda environment from tf_environment.yml

conda env create -f tf_environment.yml

The tensorflow/ folder includes:

- The implementation of the GTVConv layer

- The implementation of the AsymCheegerCutPool layer

- An example script to perform the clustering task

- An example script to perform the classification task

This implementation is based on the Pytorch Geometric library. To execute the code, first install the conda environment from pytorch_environment.yml

conda env create -f pytorch_environment.yml

The pytorch/ folder includes:

- The implementation of the GTVConv layer

- The implementation of the AsymCheegerCutPool layer

- An example script to perform the clustering task

- An example script to perform the classification task

TVGNN is now available on Spektral:

- GTVConv layer,

- AsymCheegerCutPool layer,

- Example script to perform node clustering with TVGNN.

If you use TVGNN in your research, please consider citing our work as

@inproceedings{hansen2023tvgnn,

title={Total Variation Graph Neural Networks},

author={Hansen, Jonas Berg and Bianchi, Filippo Maria},

booktitle={Proceedings of the 40th international conference on Machine learning},

pages={},

year={2023},

organization={ACM}

}