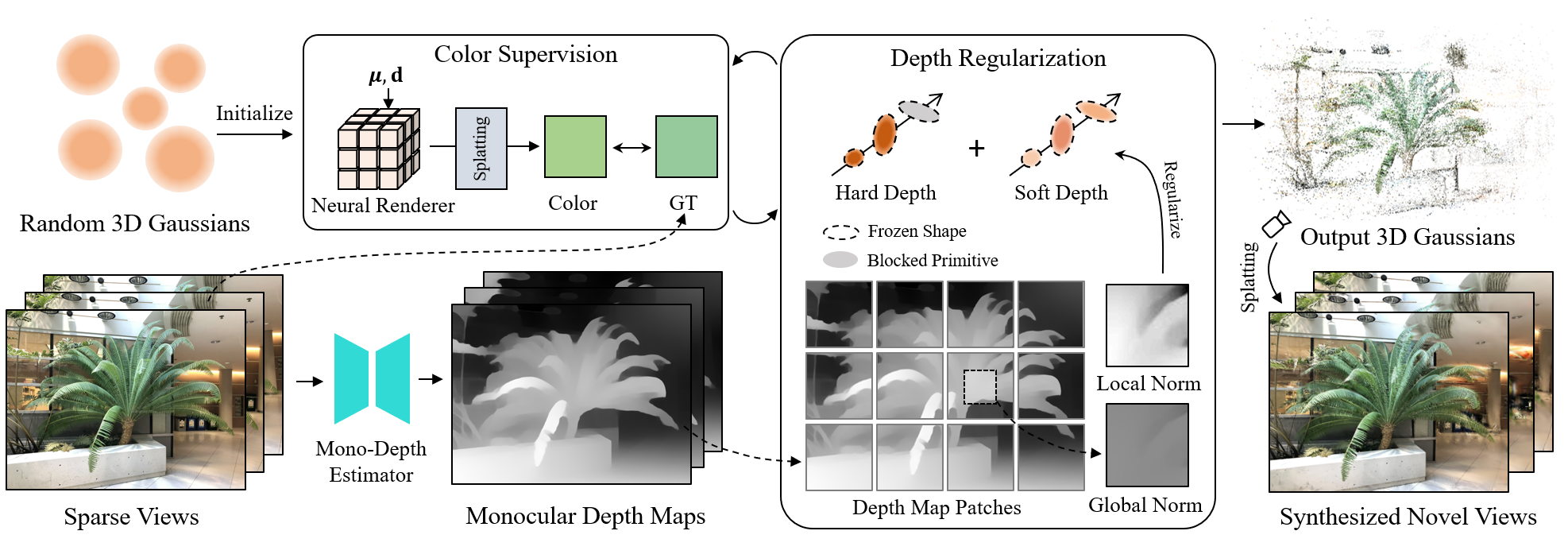

DNGaussian: Optimizing Sparse-View 3D Gaussian Radiance Fields with Global-Local Depth Normalization

This is the official repository for our CVPR 2024 paper DNGaussian: Optimizing Sparse-View 3D Gaussian Radiance Fields with Global-Local Depth Normalization.

Tested on Ubuntu 18.04, CUDA 11.3, PyTorch 1.12.1

conda env create --file environment.yml

conda activate dngaussian

cd submodules

git clone git@github.com:ashawkey/diff-gaussian-rasterization.git --recursive

git clone https://gitlab.inria.fr/bkerbl/simple-knn.git

pip install ./diff-gaussian-rasterization ./simple-knn

If encountering installation problem of the diff-gaussian-rasterization or gridencoder, you may get some help from gaussian-splatting and torch-ngp.

-

Download LLFF from the official download link.

-

Generate monocular depths by DPT:

cd dpt python get_depth_map_for_llff_dtu.py --root_path $<dataset_path_for_llff> --benchmark LLFF

-

Start training and testing:

# for example bash scripts/run_llff.sh data/llff/fern output/llff/fern ${gpu_id}

-

Download DTU dataset

- Download the DTU dataset "Rectified (123 GB)" from the official website, and extract it.

- Download masks (used for evaluation only) from this link.

-

Organize DTU for few-shot setting

bash scripts/organize_dtu_dataset.sh $rectified_path -

Format

- Poses: following gaussian-splatting, run

convert.pyto get the poses and the undistorted images by COLMAP. - Render Path: following LLFF to get the

poses_bounds.npyfrom the COLMAP data. (Optional)

- Poses: following gaussian-splatting, run

-

Generate monocular depths by DPT:

cd dpt python get_depth_map_for_llff_dtu.py --root_path $<dataset_path_for_dtu> --benchmark DTU

-

Set the mask path and the expected output model path in

copy_mask_dtu.shfor evaluation. (default: "data/dtu/submission_data/idrmasks" and "output/dtu") -

Start training and testing:

# for example bash scripts/run_dtu.sh data/dtu/scan8 output/dtu/scan8 ${gpu_id}

-

Download the NeRF Synthetic dataset from here.

-

Generate monocular depths by DPT:

cd dpt python get_depth_map_for_blender.py --root_path $<dataset_path_for_blender>

-

Start training and testing:

# for example # there are some special settings for different scenes in the Blender dataset, please refer to "run_blender.sh". bash scripts/run_blender.sh data/nerf_synthetic/drums output/blender/drums ${gpu_id}

Due to the randomness of the densification process and random initialization, the metrics may be unstable in some scenes, especially PSNR.

You can download our provided checkpoints from here. These results are reproduced with a lower error tolerance bound to keep aligned with this repo, which is different from what we use in the paper. This could lead to higher metrics but worse visualization.

If more stable performance is needed, we recommend trying the dense initialization from FSGS.

Here we provide an example script for LLFF that just modifies a few hyperparameters to adapt our method to this initialization:

# Following FSGS to get the "data/llff/$<scene>/3_views/dense/fused.ply" first

bash scripts/run_llff_mvs.sh data/llff/$<scene> output_dense/$<scene> ${gpu_id}However, there may still be some randomness.

For reference, the best results we get in two random tests are as follows:

| PSNR | LPIPS | SSIM (SK) | SSIM (GS) |

|---|---|---|---|

| 19.942 | 0.228 | 0.682 | 0.687 |

where GS refers to the calculation originally provided by 3DGS, and SK denotes calculated by sklearn which is used in most previous NeRF-based methods.

Similar to Gaussian Splatting, our method can read standard COLMAP format datasets. Please customize your sampling rule in scenes/dataset_readers.py, and see how to organize a COLMAP-format dataset from raw RGB images referring to our preprocessing of DTU.

Consider citing as below if you find this repository helpful to your project:

@article{li2024dngaussian,

title={DNGaussian: Optimizing Sparse-View 3D Gaussian Radiance Fields with Global-Local Depth Normalization},

author={Li, Jiahe and Zhang, Jiawei and Bai, Xiao and Zheng, Jin and Ning, Xin and Zhou, Jun and Gu, Lin},

journal={arXiv preprint arXiv:2403.06912},

year={2024}

}

This code is developed on gaussian-splatting with simple-knn and a modified diff-gaussian-rasterization. The implementation of neural renderer are based on torch-ngp. Codes about DPT are partial from SparseNeRF. Thanks for these great projects!