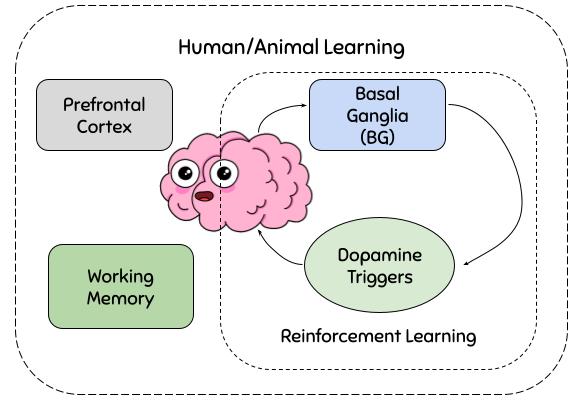

Picture: A schematic for Human/Animal Learning process. (missing working memory component in RL designs)

Abstract: Classical RL models lack in encoding behavioural variance present in the learning process of humans. We explore the effect of the working memory module on learning behaviour. We propose an RL model which combines the classical RL methods with a working memory component to study its effects on Foraging tasks. We use three variants of foraging with replenishing patches task for our experiments and show the performance of various RL algorithms on them. We further propose a mathematical model that combines the Working Memory component with the RL framework and explain its plausibility in detail. Moreover, we also do a detailed ablation analysis of the proposed mathematical model describing each module’s impacts on performance.

-

We use OpenAI Gym to create a custom environment for the foraging with replinishing patches task.

-

We use OpenCV (opencv-python) for rendering the environment in manual play mode.

-

We use PyTorch and Stable-Baselines3 for training the Deep RL agent.

pip install requirements.txt

-

Testing the registered openai gym environment

python registration_test.py -

Manual Testing

python gym-env\gym_env\envs\foraging_replenishing_patches.py --manual_play --block_type=1-

Manual play actions:

- Jump between bushes: Press num keys 0-7

- Harvest: Press "h"

- Quit Game: Press "q"

-

Demo run of trained PPO agent on Block 3 of the environment available at demo

Modified Marginal Value Theorem [.py] [jupyter notebooks]

Multi-Armed Bandit [.py] [jupyter notebooks]

Deep RL methods [.py] [jupyter notebooks]

MDP and Human Forgetful Behaviour Modelling [.py] [jupyter notebooks]

| Method | Reward in Block 1 | Reward in Block 3 |

|---|---|---|

| MVT (with replenishment) | 19983.5 | 23215.8 |

| Multi Armed Bandit (Decaying Epsilon Greedy) | 17199 | 22102.2 |

| SARSA | 15842 | 20999 |

| Q Learning | 18814.7 | 25732 |

| Deep RL (PPO) | 11610.9 | 16104 |

The detailed reports and presentations for this project can be found below:

Milestone-1 Submission [Presentation] [Report]

Final Submission [Presentation] [Report]

- Abhinav Joshi

- Samrudh B Govindraj

- Archi Gupta

- Shiven Tripathi

-

We thank Prof. Ashutosh Modi for providing us the opportunity to learn about RL algorithms through this course and the project.

-

We thank Prof. Arjun Ramakrishnan for the project ideas and and helping us understand the human behavior modelling with reinforcement learning.

-

We thank Kshitij, our project mentor for helping us with the solution approaches and guiding and clarifying our doubts about the environment.

-

We thank Shubham, our TA for helping us with the logistics and providing valuable inputs to the project.