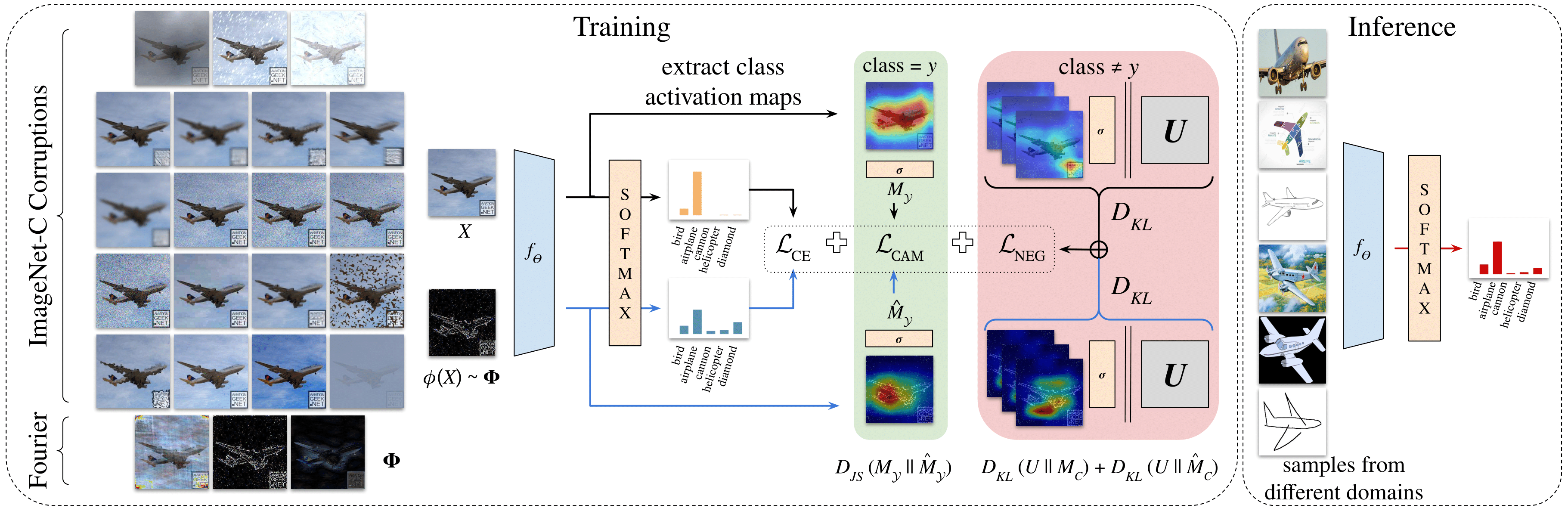

Attention Consistency on Visual Corruptions for Single-Source Domain Generalization

Ilke Cugu, Massimiliano Mancini, Yanbei Chen, Zeynep Akata

IEEE Computer Vision and Pattern Recognition Workshops (CVPRW), 2022

The official PyTorch implementation of the CVPR 2022, L3D-IVU Workshop paper titled "Attention Consistency on Visual Corruptions for Single-Source Domain Generalization". This repository contains: (1) our single-source domain generalization benchmark that aims at generalizing from natural images to other domains such as paintings, cliparts and skethces, (2) our adaptation/version of well-known advanced data augmentation techniques in the literaure, and (3) our final model ACVC which fuses visual corruptions with an attention consistency loss.

torch~=1.5.1+cu101

numpy~=1.19.5

torchvision~=0.6.1+cu101

Pillow~=8.3.1

matplotlib~=3.1.1

sklearn~=0.0

scikit-learn~=0.24.1

scipy~=1.6.1

imagecorruptions~=1.1.2

tqdm~=4.58.0

pycocotools~=2.0.0

-

We also include a YAML script

acvc-pytorch.ymlthat is prepared for an easy Anaconda environment setup. -

One can also use the

requirements.txtif one knows one's craft.

Training is done via run.py. To get the up-to-date list of commands:

python run.py --helpWe include a sample script run_experiments.sh for a quick start.

The benchmark results are prepared by analysis/GeneralizationExpProcessor.py, which outputs LaTeX tables of the cumulative results in a .tex file.

- For example:

python GeneralizationExpProcessor.py --path generalization.json --to_dir ./results --image_format pdf- You can also run distributed experiments, and merge the results later on:

python GeneralizationExpProcessor.py --merge_logs generalization_gpu0.json generalization_gpu1.jsonCOCO benchmark is especially useful for further studies on ACVC since it includes segmentation masks per image.

Here are the steps to make it work:

- For this benchmark you only need 10 classes:

airplane

bicycle

bus

car

horse

knife

motorcycle

skateboard

train

truck

-

Download COCO 2017 trainset, valset, and annotations

-

Extract the annotations zip file into a folder named

COCOinside your choice ofdata_dir(For example:datasets/COCO) -

Extract train and val set zip files into a subfolder named

downloads(For example:datasets/COCO/downloads) -

Download DomainNet (clean version)

-

Create a new

DomainNetfolder next to yourCOCOfolder -

Extract each domain's zip file under its respective subfolder (For example:

datasets/DomainNet/clipart) -

Back to the project, use

--first_runargument once while running the training script:

python run.py --loss CrossEntropy --epochs 1 --corruption_mode None --data_dir datasets --first_run --train_dataset COCO --test_datasets DomainNet:Real --print_config-

If everything works fine, you will see

train2017andval2017folders underCOCO -

Both folders must contain 10 subfolders that belong to shared classes between COCO and DomainNet

-

Now, try running ACVC as well:

python run.py --loss CrossEntropy AttentionConsistency --epochs 1 --corruption_mode acvc --data_dir datasets --train_dataset COCO --test_datasets DomainNet:Real --print_config-

All good? Then, you are good to go with the COCO section of

run_experiments.shto run multiple experiments -

That's it!

If you use these codes in your research, please cite:

@InProceedings{Cugu_2022_CVPR,

author = {Cugu, Ilke and Mancini, Massimiliano and Chen, Yanbei and Akata, Zeynep},

title = {Attention Consistency on Visual Corruptions for Single-Source Domain Generalization},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops},

month = {June},

year = {2022},

pages = {4165-4174}

}We indicate if a function or script is borrowed externally inside each file. Specifically for visual corruption implementations we benefit from:

- The imagecorruptions library of Autonomous Driving when Winter is Coming.

Consider citing this work as well if you use it in your project.