This experiment is conducted on 100 hours of librispeech data for the task of E2E ASR using an intermediate character level representation and Connectionist Temporal Classifications(CTC) loss function.

Transforming transcription of both train and dev set from sequence of words to sequence of characters by adding additional character(">") at the end of each word of the transcription is required to train the CTC model on character level.

We used a prefix beam search decoding strategy to decode the model output and return word transcription.

- model.py: rnnt joint model

- train_rnnt.py: rnnt training script

- train_ctc.py: ctc acoustic model training script

- eval.py: rnnt & ctc decode

- DataLoader.py: kaldi feature loader

- The following python programs are found in the above utils folder

Prepare custom lexicon file that maps word to a sequence of characters

python word_to_characters.py --path [path-to-your-original-lexicon-file]

Transform the word transcription to a sequence of characters by adding ">" at the end of each word

- THE SUNDAY SCHOOL => T H E > S U N D A Y > S C H O O L >

python prepare_target.py --path [path-of-your-traget-file]

Retrieve unique characters from the lexicon

python prepare.phone.py --path [path-to-your-original-lexicon-file]

- Extract feature

link kaldi librispeech example dirs (

localstepsutils) excuterun.shto extract 13 dim mfcc feature runfeature_transform.shto get 39 dim feature

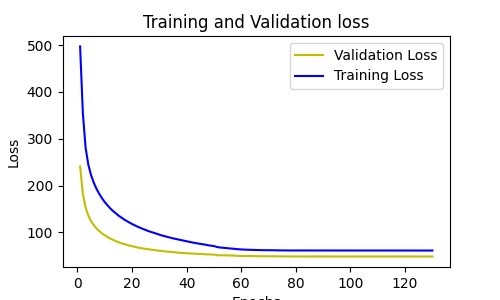

python train_ctc.py --lr 1e-3 --bi --dropout 0.5 --out exp/ctc_bi_lr1e-3 --schedule

python eval.py <path to best model> [--ctc] --bi

| model | beam width | CER(%) | WER(%) |

|---|---|---|---|

| CTC | 1 | 30.47 | 35.71 |

| CTC | 3 | 29.65 | 34.86 |

| CTC | 10 | 29.40 | 34.66 |

- Python 3.6

- PyTorch >= 0.4

- numpy 1.14

- warp-transducer

- RNN Transducer (Graves 2012): Sequence Transduction with Recurrent Neural Networks

- RNNT joint (Graves 2013): Speech Recognition with Deep Recurrent Neural Networks

- (E2E-ASR)[https://github.com/HawkAaron/E2E-ASR]

- (CTC Networks and Language Models: Prefix Beam Search Explained)[https://medium.com/corti-ai/ctc-networks-and-language-models-prefix-beam-search-explained-c11d1ee23306]