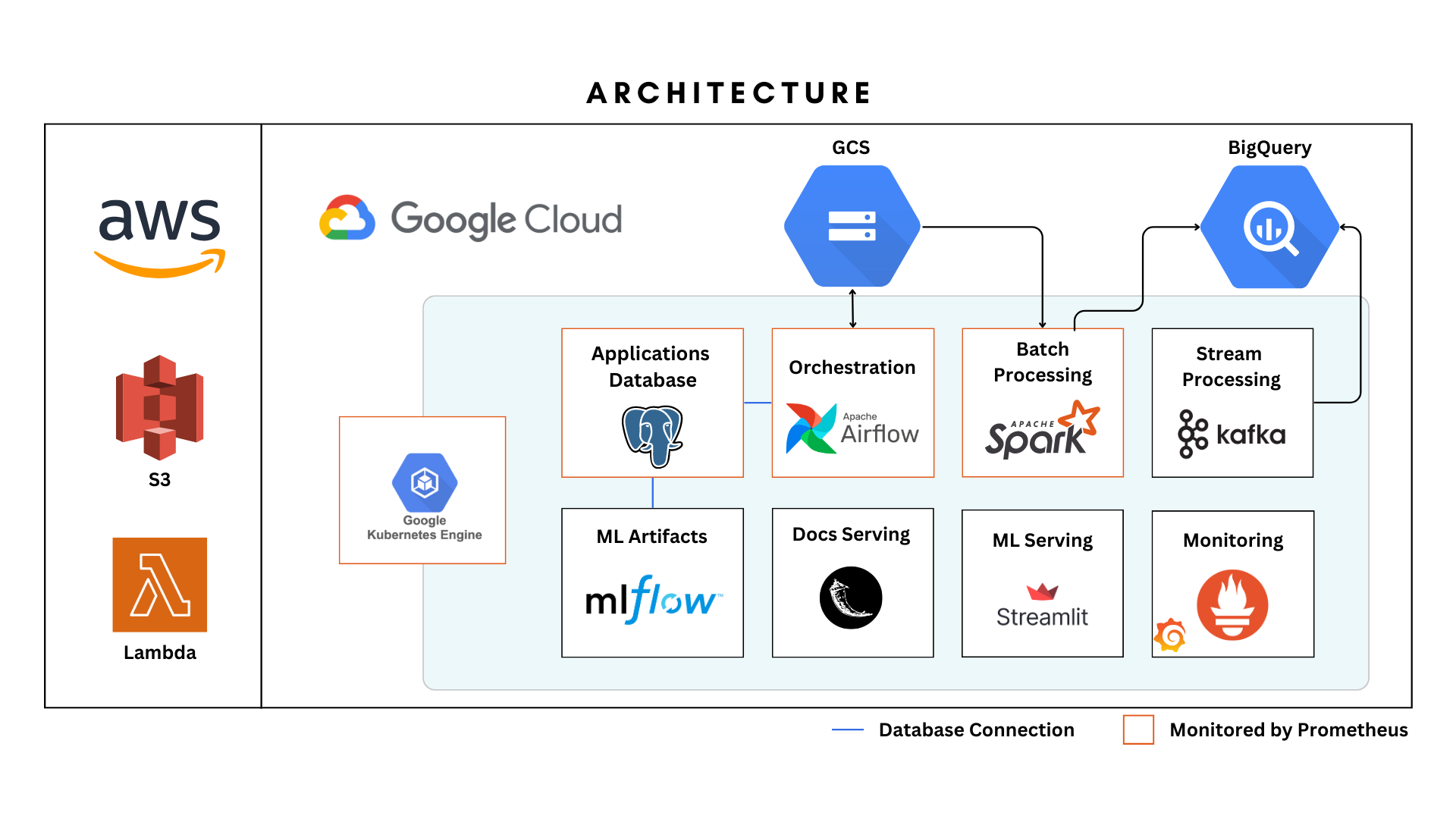

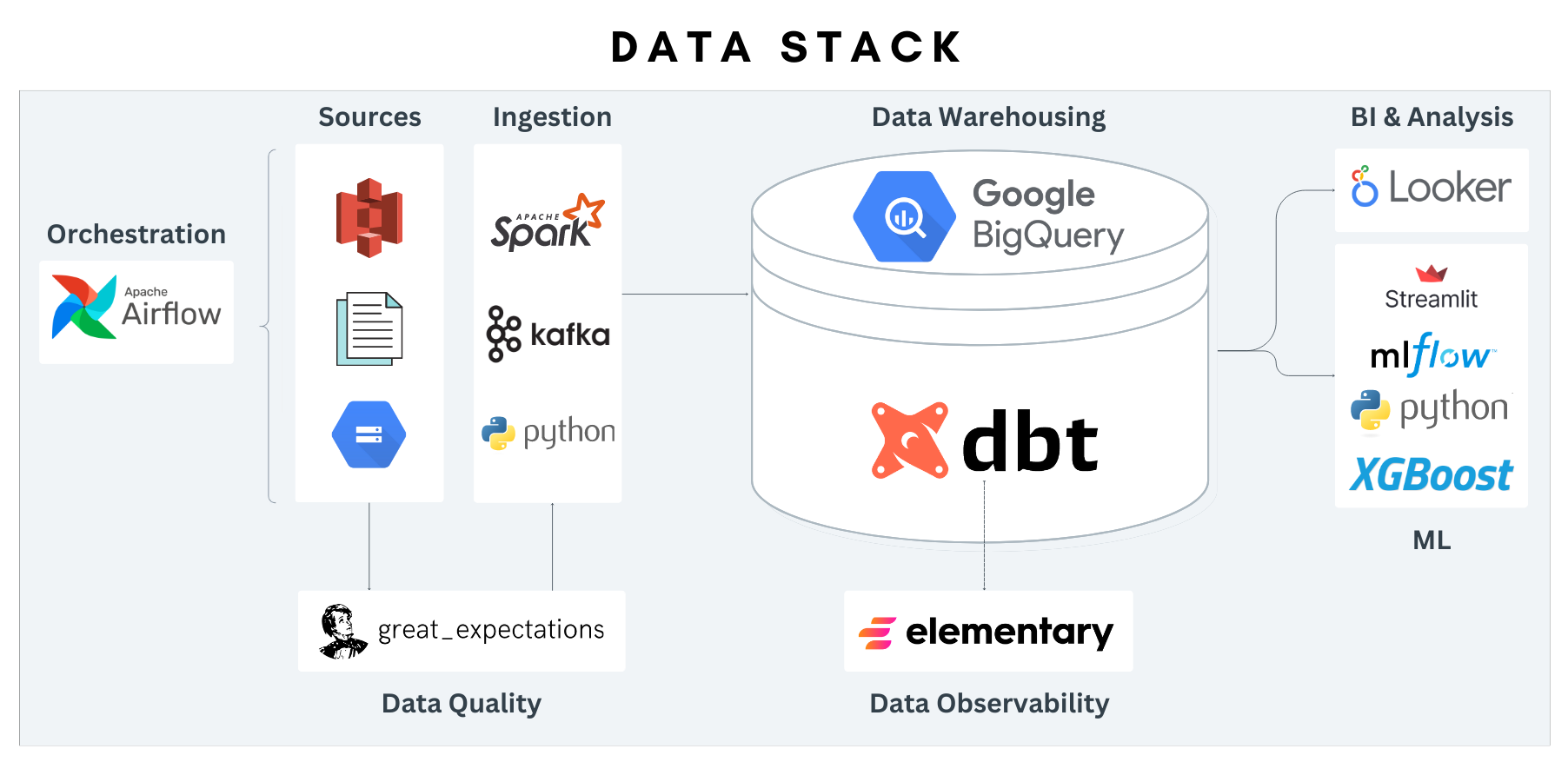

This project showcases the orchestration of a scalable data pipeline using Kubernetes and Apache Airflow. It makes use of well known data solutions like Spark, Kafka, and DBT, while maintaining a strong focus on data quality.

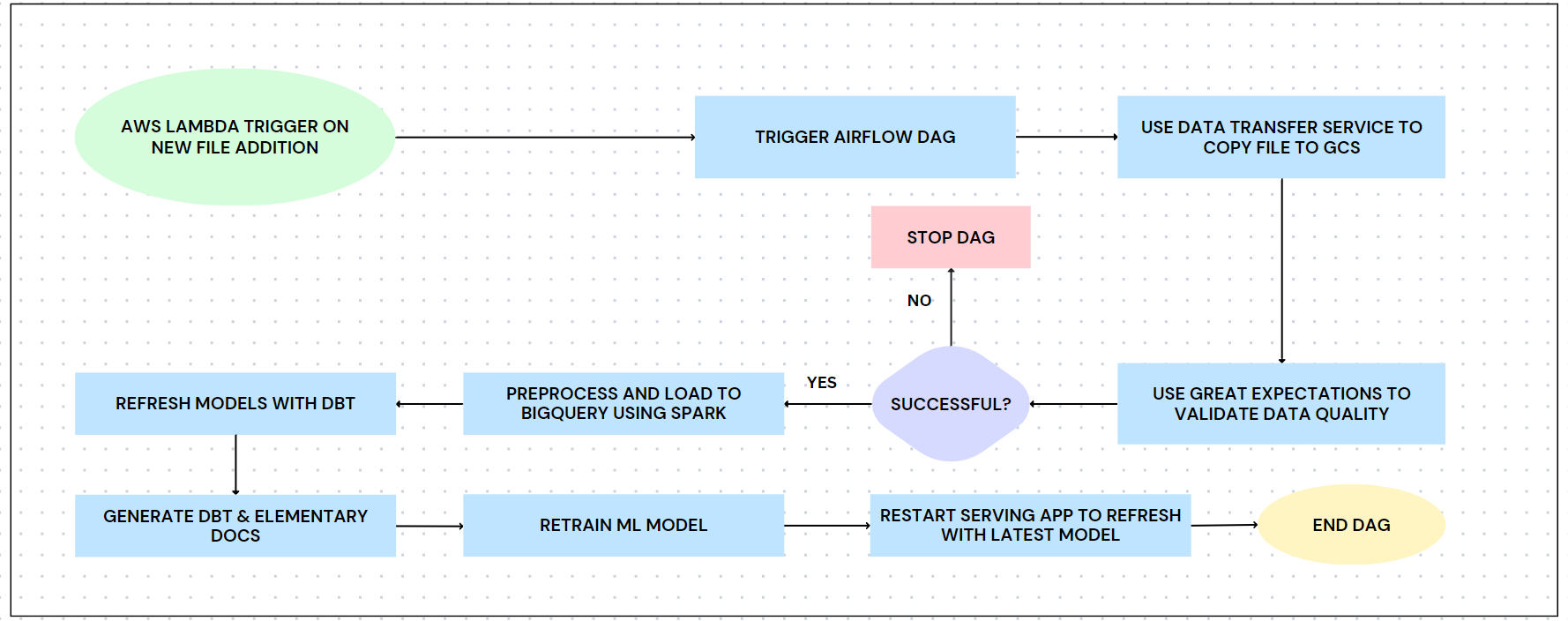

With NYC Taxi data stored in S3 as the starting point, this pipeline goes beyond generating a standard BI dashboard. It also delivers a prediction service and data models tailored for data scientists' needs.

Table of Contents

**Kindly note that the services are hosted using free GCP credits and may not be available once the credits are exhausted.

- Static Docs:

Great Expectations: Link | ScreenshotDBT Docs: Link | ScreenshotElementary Docs: Link | Elementary Dashboard

- ML Serving/Prediction Service: Link | Screenshot

- MLFlow: Screenshot

You can find extra documentation in the docs/ dir.

flowchart LR

A[GitHub Push / Pull Request]

A --> |Python File?| B(PyApps CI)

A --> |Docker Folder Content/ K8 Manifests Changed?| C(Build & Deploy Components)

A --> |Terraform File Changed?| D(Terraform Infrastructure CD)

B -- Yes --> E[Run Tests]

C -- Yes --> F[Build Images]

D -- Yes --> G[Terraform Actions]

E --> |Test Successful?| H[Format, Lint, and Sort]

F --> |Deployment Restart Required?| I[Kubectl rollout restart deployment]

G --> |Terraform Plan Successful?| J[Terraform Apply]

E --> K[End]

H --> K

F --> |No Deployment Restart Required| K

I --> K

G --> |Terraform Plan Unsuccessful| K

J --> K

B -- No --> K

C -- No --> K

D -- No --> K

Flask: Used to serve static documentation websites forDBT,Great Expectation, andElementary.Prometheus: Collects metrics from various sources includingAirflow(viaStatsd),SparkApplications(viaJMX Prometheus Java Agent),Postgres(viaprometheus-postgres-exporter), and Kubernetes (viakube-state-metrics).GrafanaUtilized to visualize the metrics received by Prometheus. The Grafana folder contains JSON dashboard definitions created to monitor Airflow and Spark.Kafka: Integrated as a streaming solution in the project. It reads a file and utilizes its rows as streaming material.Streamlit: Used to serve the bestxgboostmodel from theMLFlowArtifact Repository.gitsync: Implemented to allow components to directly read their respective code from GitHub without needing to include the code in the Docker image.Spark-on-K8s: Utilized to run Spark jobs on Kubernetes.

Other than standard unit testing, the below was also done.

- Data quality is validated on ingestion using Great Expectations.

- All data source and models have

dbt expectationsdata quality tests. - There are also unit tests where a sample input and expected output are used to test the model.

An integration test for the lambda function can be found in the tests/integration directory.

- Google Cloud SDK with Kubectl.

- Clone the repository:

git clone https://github.com/Elsayed91/taxi-data-pipeline- Rename template.env to .env and fill out the values, you dont need to fill out buckets or

AUTH_TOKENvalues. - Run the project setup script it will prompt you to login to your gcloud account, do so and it will do the rest.

make setup- to manually trigger the

batch-dag:

make trigger_batch_dag- to run kafka

make run_kafka- to destroy kafka instance

make destroy_kafka- to run the lambda integration test

make run_lambda_integration_test

Note: to use the workflows, a GCP service account as well as the content of your .env file need to be added to your Github Secrets.