Light-weight spatio-temporal graphs for segmentation and ejection fraction prediction in cardiac ultrasound (MICCAI 2022).

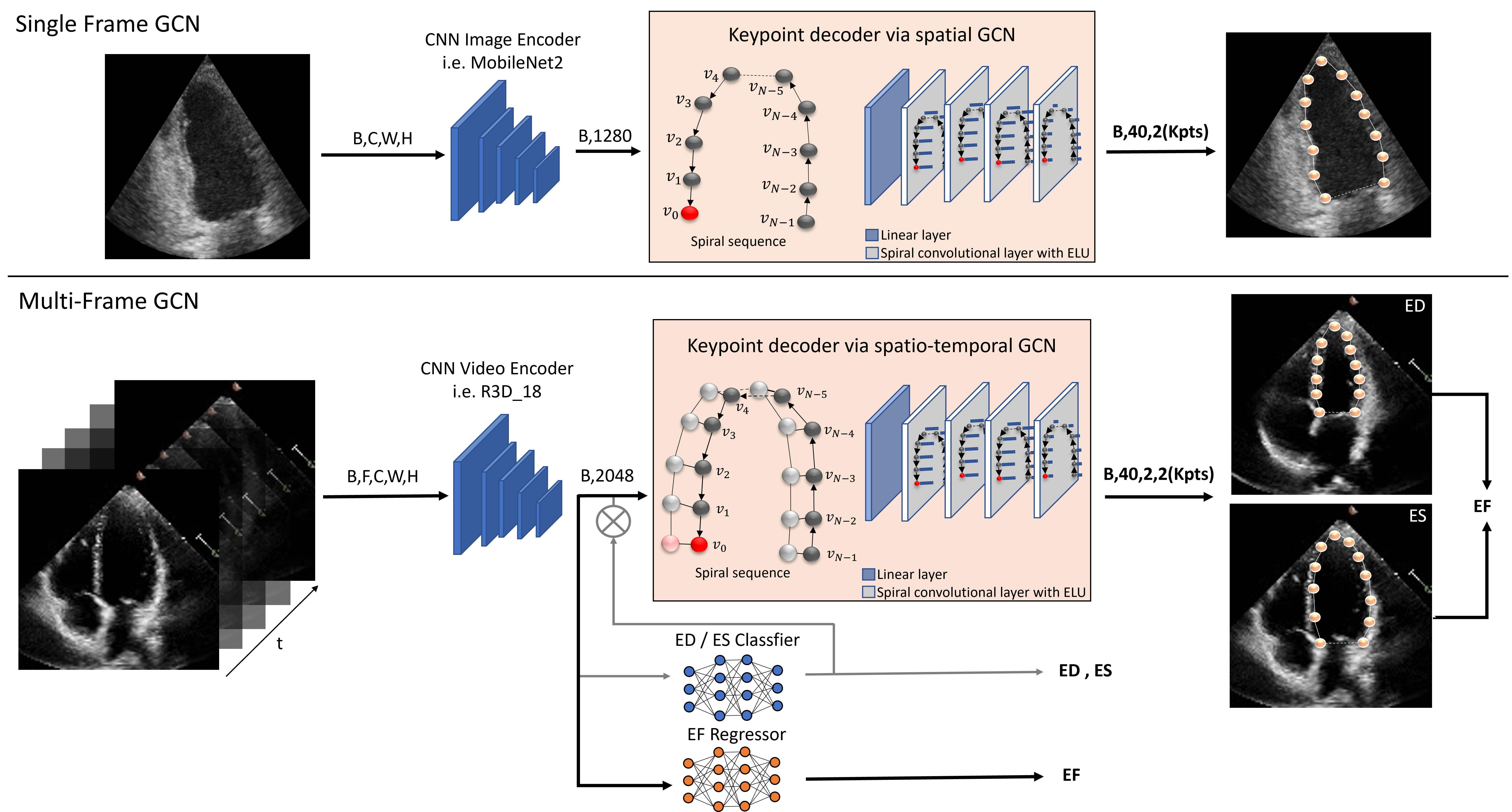

Accurate and consistent predictions of echocardiography parameters are important for cardiovascular diagnosis and treatment. In particular, segmentations of the left ventricle can be used to derive ventricular volume, ejection fraction (EF) and other relevant measurements. In this paper we propose a new automated method called EchoGraphs for predicting ejection fraction and segmenting the left ventricle by detecting anatomical keypoints. Models for direct coordinate regression based on Graph Convolutional Networks (GCNs) are used to detect the keypoints. GCNs can learn to represent the cardiac shape based on local appearance of each keypoint, as well as global spatial and temporal structures of all keypoints combined. We evaluate our EchoGraph model on the EchoNet benchmark dataset. Compared to semantic segmentation, GCNs show accurate segmentation and improvements in robustness and inference run-time. EF is computed simultaneously to segmentations and our method also obtains state-of-the-art ejection fraction estimation.

This is the source code for MICCAI 2022 paper: Light-weight spatio-temporal graphs for segmentation and ejection fraction prediction in cardiac ultrasound

EchoGraphs provides a framework for graph-based contour detection for medical ultrasound. The repository includes model configurations for

- predicting the contour of the left ventricle in single ultrasound images (single-frame GCN)

- predicting two contours of the ED and ES frame and the corresponding EF value for ultrasound sequences with known ED/ES frame (multi-frame GCN)

- predicting the EF values of arbitrary ultrasound sequences alongside with the occurence of ED and ES and the corresponding frames (multi-frame GCN, extension indicated in grey in the figure)

The proposed methods were trained and evaluated using the EchoNet dataset which consists of 10.030 echocardiac ultrasound sequences that were de-identified and made publicly available. The usage of those datasets requires registration and is shared with a non-commerical data use agreement. Additional information on the data and on regulations can be found in echonet dataset. If you use the data, please cite the corresponding paper. We provide a preprocessing script to convert the data into formats that are readible by our pipeline (details can be found here).

See INSTALL.md/ for environment setup.

See GETTING_STARTED.md to get started with training and testing the echographs model.

These examples show video sequences with model prediction. Although the single frame Echograph was only trained on the keyframes ED (end diastole) and ES (end systole) it produces accurate and consistent predictions across all other frames.

If you feel helpful of this work, please cite it.

@inproceedings{echographs,

title={Light-weight spatio-temporal graphs for segmentation and ejection fraction prediction in cardiac ultrasound},

author={Thomas, Sarina and Gilbert, Andrew and Ben-Yosef, Guy},

booktitle={MICCAI},

year={2022}

}