A simple and efficient algorithm for spectral analysis of music. Examples for browser and Raspberry Pi are provided.

- Pianolizer app - runs directly in the browser. Also, mobile browser. Chrome is recommended for the best experience.

- Algorithm benchmark - test the speed of the core algorithm, in the browser. WASM results closely match what is expected from the native binary performance, on the same machine. 44100 samples per second is enough for realtime performance. As a matter of fact, one can get decent results with as little as 8000 samples per second (saving both CPU & RAM resources!); however the resampling algorithm implementation is left as an exercise to the reader (that is, for the JS/browser; the

pianolizerCLI utility is meant to be paired with sox).

The C++ implementation should compile just fine on any platform that supports C++14, there are no dependencies as the code uses C++14 standard data types.

It is known to compile & run successfully with Clang, GCC and Emscripten.

The target platform should support double float operations efficiently (in other words, hardware FPU is rather mandatory).

Compile the native binary (AKA the pianolizer CLI utility) and to WebAssembly:

make

Compile only the native binary:

make pianolizer

To compile only to WebAssembly:

make emscripten

Test and benchmark the C++ implementation (optional; depends on GoogleTest):

make test

Delete all the compiled files:

make clean

$ ./pianolizer -h

Usage:

sox -V -d -traw -r44100 -b32 -c1 -efloat - | ./pianolizer | sudo misc/hex2ws281x.py

Options:

-h this

-b buffer size; default: 256

-s sample rate; default: 44100 (Hz)

-p A4 reference frequency; default: 440 (Hz)

-a average window (effectively a low-pass filter for the output); default: 0.04 (seconds; 0 to disable)

Description:

Consumes an audio stream (1 channel, 32-bit float PCM)

and emits the volume levels of 61 notes (from C2 to C7) as a hex string.

The pianolizer CLI utility receives an input stream of following specifications:

Channels : 1

Sample Rate : 44100

Sample Encoding: 32-bit Floating Point PCM

And emits a stream of 122-character hexadecimal strings representing the volume level of the 61 consecutive notes (from C2 to C6):

...

000000000000000000010004020100000000000001010419110101010102182e040203192202040b080201010001000105010901060101000000000000

0000000000000000000100040201000000000000010104151301010101021634030202192202040a070101010001000104010801060101000000000000

00000000000000000000000402010000000000000101031215010101010215380302021922020309060101010001000104010701050101000000000000

000000000000000000000004030100000000000000010310160101010103163a0302021a20020308050101010001000104010601040101000000000000

00000000000000000000000404010000000000000001030e1601010102041839030202181f020307050101000001000103010601040101000000000000

...

Each level uses 2 hexadecimal characters (therefore, the value range is 0-255).

A new string is emitted every 256 samples (adjustable with -b option); that amounts to ~6ms of audio.

sox is recommended to provide the input for pianolizer.

When using a microphone source, sox will insert tiny delays between the samples, so that the audio stream is synchronized with the sample rate.

When decoding an audio file, sox spits out the data as fast as possible.

With that, it should be trivial to convert the pianolizer output into a static spectrogram image.

The included Python script consumes the hexadecimal output of pianolizer and drives a WS2812B LED strip (depends on the rpi_ws281x library).

Conveniently, 1m LED strip with 144 diodes/meter matches precisely the standard piano keyboard dimensions and is enough to cover 61 keys.

Raspberry Pi has no audio input hardware at the time of writing, therefore an expansion board is required. I am using ReSpeaker 2-Mics Pi HAT by Seeed.

Check rc.local for an example of how to drive the LED strip with a microphone. You will probably need to adjust the sample rate and volume in this script, and also the I/O pin number in the Python script.

The main purpose of Pianolizer is music visualization. Because of this, the volume level values are squared (more contrast, less CPU usage) and averaged (effectively, a low-pass filter of the output, otherwise it is unpleasant and potentially harmful to look at, due to flickering). However, the library is modular by design, so you can shuffle things around and implement other stuff like DTMF decoder or even a vocoder (YMMV!).

In a nutshell, first you need to create an instance of SlidingDFT class, which takes an instance of PianoTuning class as a parameter. PianoTuning requires the sample rate parameter. Sample rate should be at least 8kHz.

By default, PianoTuning defines 61 keys (from C2 to C7), with A4 tuned to 440Hz.

Why not 88 keys, like most of the acoustic pianos?

Essentially, it is because the frequencies below C2 (65.4Hz) would require some extra processing to be visualized properly.

Once you have an instance of SlidingDFT, you can start pumping the audio samples into the process method (I recommend doing it in chunks of 128 samples, or more).

process then returns an array of 61 values (or whatever you defined instantiating PianoTuning) ranging from 0.0 to 1.0, each value being the squared amplitude of the fundamental frequency component for that key.

Standard: C++14

- Include pianolizer.hpp in your project. It is reasonably well commented and documented and relevant examples are provided inline.

- benchmark.cpp is a good starting point.

- test.cpp describes the expected behavior of the library.

Standard: ECMAScript 6

- Include pianolizer.js in your project. It is reasonably well commented and documented and relevant examples are provided inline.

- benchmark.js is a good starting point. It runs directly via Node.js (try

node js/benchmark.js). Also check benchmark.html, which works in the browser. - AudioWorkletProcessor compatibility layer can be found in pianolizer-worklet.js. Worklet is set up in index.html.

- visualization.js is what draws the keyboard and the spectrogram.

How/why does this algorithm work? First of all: it is not based on the Fast Fourier Transform (FFT). FFT is awesome, and is indeed the first option for almost anything spectral analysis related. In a nutshell, FFT takes N data points of some signal, and twists them into N frequency bands. Works the best when N is a power of two. FFT uses a brilliant mathematical shortcut which makes this operation go very efficiently. And on top of that, nowadays we have extremely efficient and portable implementations of this algorithm.

But... It is not very suitable for recognizing the musical pitches. Here's why: all the frequency bands from the output of FFT have exactly the same bandwidth. On the other hand, our ears and brains evolved in such a way that 65Hz sounds distinctively different from 98Hz; however 4186Hz sounds barely distinguishable from 4219Hz. Therefore, regardless of our cultural background, musical sounds follow a logarithmic distribution, with the bandwidth of the subsequent notes gradually increasing.

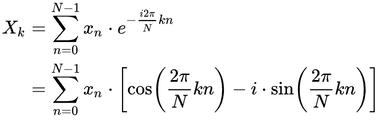

This effectively voids the computational advantage of using FFT for this specific application. Fortunately, the Fourier transform does not need to be a fast one. Let's revisit the basics of the Discrete Fourier Transform (DFT):

The interpretation most relevant for our purposes would be:

It is the cross correlation of the input sequence, xₙ, and a complex sinusoid at frequency k / N. Thus it acts like a matched filter for that frequency. (source: Wikipedia)

Unpacking the above formula to C++ code (JavaScript has no native complex number type):

const complex<double> discreteFourierTransform (

const vector<complex<double> >& x,

const double k, const unsigned N

) {

if (x.size() < N)

throw invalid_argument("x vector should have at least N samples");

const double q = 2. * M_PI * k / N;

complex<double> Xk = complex<double>(0., 0.);

for (unsigned n = 0; n < N; n++)

Xk += x[n] * complex<double>(cos(q * n), -sin(q * n));

return Xk;

}(check the full example)

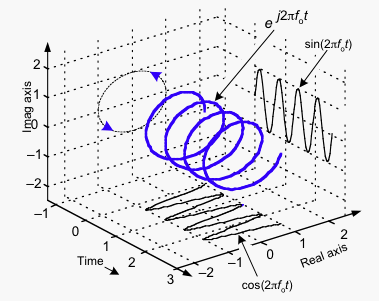

A somewhat free interpretation of what's going on here is: the code generates a template signal and checks it's similarity with the input signal. This template, when plotted, looks exactly like a corkscrew spiral:

It has the length of N data points. The pitch of the corkscrew, which determines the frequency of the template signal, is derived from the k / N ratio. More specifically, this is the relationship between k, N, frequency, bandwidth and the sample rate:

// in Hertz

const double sampleRate = 44100;

const double frequency = 441;

const double bandwidth = 21;

const double k = frequency / bandwidth;

const double N = sampleRate / bandwidth;

assert(k == 21);

assert(N == 2100);With this, we can establish that what we need is to find such pairs of k and N that closely match the frequencies and the bandwidths of the piano notes. I say "closely" because both k and N have to be integers for our specific application case. For the general case, only N has to be an integer (because it represents the amount of the discrete samples) and k can be any number. However, there is a cool computational shortcut that only works for integer values of k; more on this later.

Provided that the sample rate is 44100Hz, this is what we have now (source):

| Note name | Note frequency | Effective frequency | Note bandwidth | Effective bandwidth | Error in cents | k | N |

|---|---|---|---|---|---|---|---|

| A4 | 440.000000 | 439.964789 | 25.785968 | 25.880282 | -0.136552 | 17 | 1704 |

| A#4 | 466.163762 | 466.231343 | 27.319282 | 27.425373 | 0.247378 | 17 | 1608 |

| B4 | 493.883301 | 493.873518 | 28.943771 | 29.051383 | -0.033802 | 17 | 1518 |

| C5 | 523.251131 | 523.168179 | 30.664857 | 30.774599 | -0.270511 | 17 | 1433 |

| C#5 | 554.365262 | 554.511834 | 32.488284 | 32.618343 | 0.451155 | 17 | 1352 |

| D5 | 587.329536 | 587.539185 | 34.420138 | 34.561129 | 0.609089 | 17 | 1276 |

| D#5 | 622.253967 | 622.157676 | 36.466866 | 36.597510 | -0.264051 | 17 | 1205 |

| E5 | 659.255114 | 659.366755 | 38.635299 | 38.786280 | 0.288961 | 17 | 1137 |

| F5 | 698.456463 | 698.695247 | 40.932673 | 41.099720 | 0.583358 | 17 | 1073 |

| F#5 | 739.988845 | 740.078973 | 43.366657 | 43.534057 | 0.207828 | 17 | 1013 |

| G5 | 783.990872 | 784.205021 | 45.945372 | 46.129707 | 0.466095 | 17 | 956 |

| G#5 | 830.609395 | 830.232558 | 48.677426 | 48.837209 | -0.774151 | 17 | 903 |

| A5 | 880.000000 | 879.929577 | 51.571936 | 51.760563 | -0.136552 | 17 | 852 |

Effective frequency & bandwidth are the ones derived from the integer k and N values. Error in cents is the deviation from the original values (0 means "no error"; 100 means "so off that it is literally the next note"; -100 means "so off that it is literally the previous note").

To summarize: for every musical note frequency we want to isolate, for a given sample rate, all we need is the pair of numbers k and N.

We plug the pair, along with the data points/samples themselves, into the discreteFourierTransform() function described above.

And this is where it gets (computationally) complicated: the sum implicit in the DFT is re-done for every frequency!

Fortunately, there is another mathematical shortcut, albeit far less known than the FFT: the Sliding DFT! The Sliding DFT uses it's own previous output as the input for the next iteration. It's mechanism is somewhat related to that of the moving sum/average: for each iteration, add the value of the most recent sample, while subtracting the value of the oldest sample (and this is why k must be an integer, lest the output starts drifting out of phase!).

There's plenty of links related to the details of the Sliding DFT in the references; plus the source code of this very project. Enjoy, and do let me know of your cool projects making the use of this algorithm!

- Speaking Piano - Now with (somewhat decent) captions! - YouTube video.

- Rousseau - YouTube channel.

- Piano LED Visualizer - GitHub repository.

- Music Transcription with Convolutional Neural Networks (Jun 2016) - the method used by AnthemScore (the automatic music transcription app).

- Chopin Mazurka in F minor, op. 68 no. 4 - music used in the demo; performance by Pianotomy.

- Piano key frequencies - Wikipedia article.

- The Fundamentals of FFT-Based Signal Analysis and Measurement - tutorial by National Instruments.

- Sliding DFT - Wikipedia article.

- The Sliding DFT - tutorial featuring GNU Octave/MATLAB implementation.

- The sliding DFT - Published in: IEEE Signal Processing Magazine (Volume: 20, Issue: 2, March 2003).

- Sliding Discrete Fourier Transform - Development & Research in Electro-Acoustic Media page.

- Constant-Q transform - Wikipedia article.

- Computationally Efficient Moving Average for Microcontrollers - fast approximation of moving average.

- Efficiently detecting a frequency using a Goertzel filter - a related technique.

MIT License

Copyright (c) 2022 Stanislaw Pusep