🔥 Restore Anything with Masks:Leveraging Mask Image Modeling for Blind All-in-One Image Restoration (ECCV2024)

This is the official PyTorch codes for the paper.

Restore Anything with Masks:Leveraging Mask Image Modeling for Blind All-in-One Image Restoration

Chujie Qin, Ruiqi Wu, Zikun Liu, Xin Lin, Chunle Guo, Hyun Hee Park, Chongyi Li* ( * indicates corresponding author)

European Conference on Computer Vision (ECCV), 2024

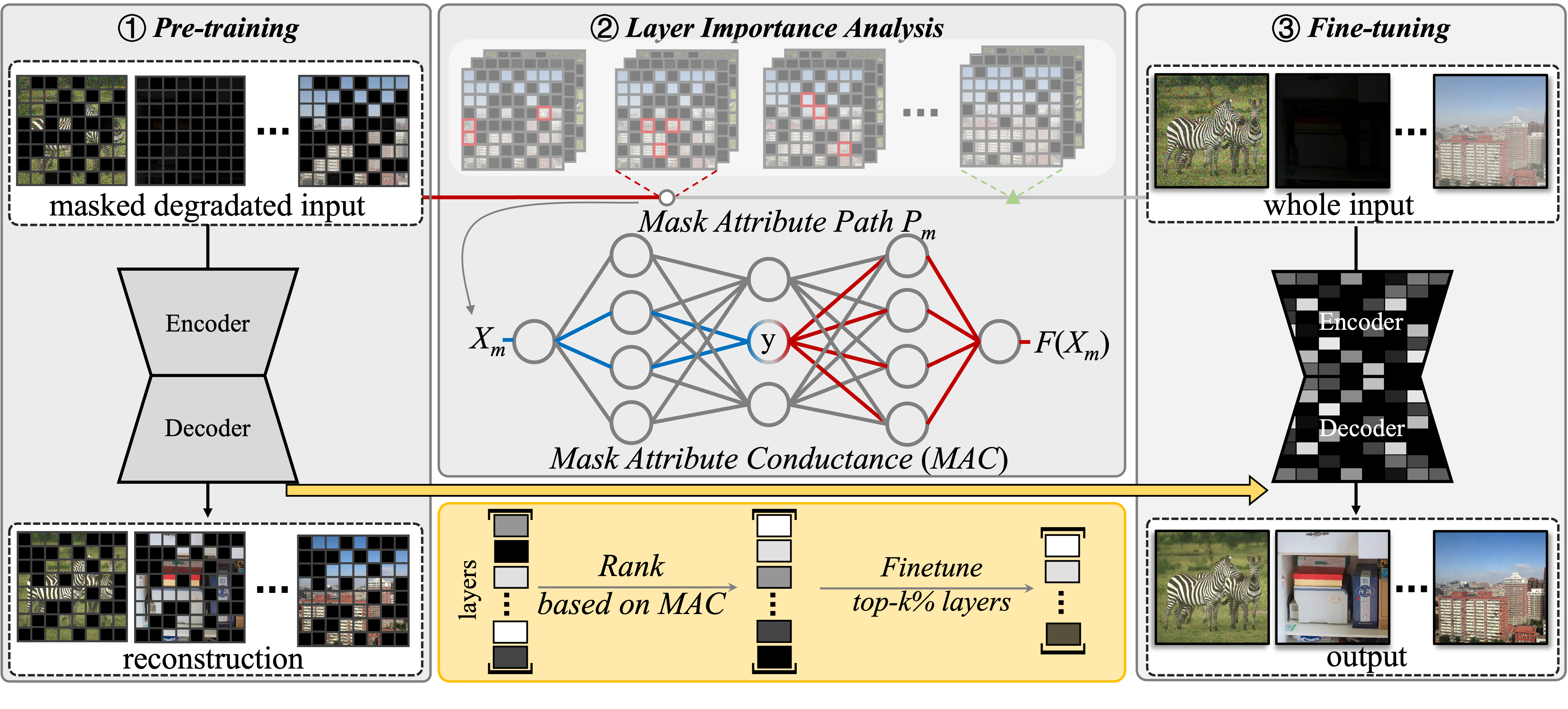

- RAM is a Blind All-In-One Image Restoration framework that can simultaneously handle 7 Restoration Tasks and achieve SOTA performance !

- RAM focus on tackling how to extract Image Prior instead of degradation prior from diverse corrupted images by Leveraging Mask Image Modeling.

- Clone and enter our repository:

git clone https://github.com/Dragonisss/RAM.git RAM cd RAM - Simply run the

install.shfor installation!source install.sh - Activate the environment whenever you test!

conda activate RAM

Given the number of datasets involved, we plan to offer a unified download link in the future to make it easier to access all datasets.

We combine datasets from various restoration tasks to form the training set. Here are the relevant links for all the datasets used:

| Dataset | Phase | Source | Task for |

|---|---|---|---|

| OTS_ALPHA | Train | [Baidu Cloud(f1zz)] | Dehaze |

| Rain-13k | Train & Test | [Google Drive] | Derain |

| LOL-v2 | Train & Test | [Real Subset Baidu Cloud(65ay)] / [Synthetic Subset Baidu Cloud(b14u)] | Low Light Enhancement |

| GoPro | Train & Test | [Download] | Motion Deblur |

| LSDIR | Train & Test | [HomePage] | Denoise DeJPEG DeBlur |

| SOTS | Test | [Download] | Denoise DeJPEG DeBlur |

| CBSD68 | Test | [Download] | Denoise |

Symbolic links is a recommended approach, allowing you to place the datasets anywhere you prefer!

The final directory structure will be arranged as:

datasets

|- CBSD68

|- CBSD68

|- noisy5

|- noisy10

|- ...

|- gopro

|- test

|- train

|- LOL-v2

|- Real_captured

|- Synthetic

|- LSDIR

|- 0001000

|- 0002000

|- ...

|- OTS_ALPHA

|- clear

|- depth

|- haze

|- LSDIR-val

|- 0000001.png

|- 0000002.png

|- ...

|- rain13k

|- test

|- train

|- SOTS

|- outdoor

Our pipeline can be applied to any image restoration network. We provide the pre-trained and fine-tuned model files for SwinIR and PromptIR mentioned in the paper:

| Method | Phase | Framework | Download Links | Config File |

|---|---|---|---|---|

| RAM | Pretrain | SwinIR | [GoogleDrive(TBD)] | [options/RAM_SwinIR/ram_swinir_pretrain.yaml] |

| RAM | Finetune | SwinIR | [GoogleDrive(TBD)] | [options/RAM_SwinIR/ram_swinir_finetune.yaml] |

| RAM | Pretrain | PromptIR | [GoogleDrive(TBD)] | [options/RAM_PromptIR/ram_promptir_pretrain.yaml] |

| RAM | Finetune | PromptIR | [GoogleDrive(TBD)] | [options/RAM_PromptIR/ram_promptir_finetune.yaml] |

We provide scripts for inference your own images in inference/inference.py.

You could run python inference/inference.py --help to get detailed information of this scripts.

Before proceeding, please ensure that the relevant datasets have been prepared as required.

1.Pretraining with MIM We use the collected datasets for model training. First, we execute the following command:

python -m torch.distributed.launch \

--nproc_per_node=[num of gpus] \

--master_port=[PORT] ram/train.py \

-opt [OPT] \

--launcher pytorch

# e.g.

python -m torch.distributed.launch \

--nproc_per_node=8 \

--master_port=4321 ram/train.py \

-opt options/RAM_SwinIR/ram_swinir_pretrain.yaml \

--launcher pytorch2.Mask Attribute Conductance Analysis

We use proposed Mask Attribute Conductance Analysis to analyze the importance of different layers for finetuning. You can run the following command to conduct MAC analysis:

python scripts/mac_analysis.py -opt [OPT] --launcher pytorch

# e.g.

python scripts/mac_analysis.py \

-opt options/RAM_SwinIR/ram_swinir_mac.yml --launcher pytorchFor convenience, we have provided the analysis results of the two models, RAM-SwinIR and RAM-PromptIR, mentioned in the paper. You can find them in ./mac_analysis_result/

3.Finetuning

python -m torch.distributed.launch --nproc_per_node=<num of gpus> --master_port=4321 ram/train.py \

-opt [OPT] --launcher pytorchYou can also add CUDA_DEVICE_VISIBLE= to choose gpu you want to use.

We have provided a script for fast evaluation:

python -m torch.distributed.launch \

--nproc_per_node=1 \

--master_port=[PORT] ram/test.py \

-opt [OPT] --launcher pytorchTo benchmark the performance of RAM on the test dataset, you can run the following command:

# RAM-SwinIR

python -m torch.distributed.launch \

--nproc_per_node=1 \

--master_port=4321 ram/test.py \

-opt options/test/ram_swinir_benchmark.yml --launcher pytorch

# RAM-PromptIR

python -m torch.distributed.launch \

--nproc_per_node=1 \

--master_port=4321 ram/test.py \

-opt options/test/ram_promptir_benchmark.yml --launcher pytorchIf you find our repo useful for your research, please consider citing our paper:

@misc{qin2024restoremasksleveragingmask,

title={Restore Anything with Masks: Leveraging Mask Image Modeling for Blind All-in-One Image Restoration},

author={Chu-Jie Qin and Rui-Qi Wu and Zikun Liu and Xin Lin and Chun-Le Guo and Hyun Hee Park and Chongyi Li},

year={2024},

eprint={2409.19403},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2409.19403},

}For technical questions, please contact chujie.qin[AT]mail.nankai.edu.cn