MDP implementation reference code

This implementation relies on the pymdptoolbox toolkit.

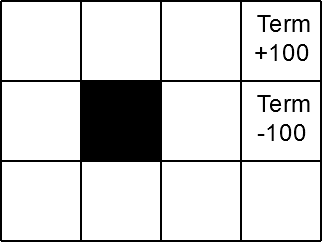

In this practical exercise, we will look at how we can implement MDP planning in a mathematical toolkit, and track the calculation of the rewards for each state via Value Iteration. The following code sets up an MDP environment (the basic case shown in class, shown in the Figure below) and computes the policy for the given MDP using the Value Iteration.

Then we provide a set of questions for you to implement and answer. This assignment is not graded.

-

Study the code of the MDP notebook and answer the following questions.

- What is the policy generated if we change the discount factor of the grid domain to 0.1?

- Use the following line

vi.verbose = Truebeforevi.run():

What is the variation for each of the first three iterations with the discount factor of 0.9 and how many iterations does the algorithm take to converge? - How does changes to the discount factor affect the variation of the state values over time?

-

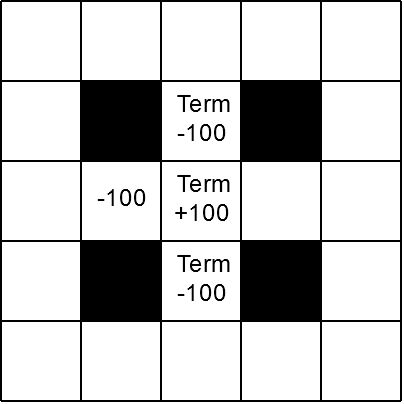

The scenario below has an interesting structure whereby the positive rewarding terminal state is partially surrounded by negatively-rewarding states. Program this scenario in pymdptoolbox and compute the optimal policy with a discount factor of 0.99.

- Define two new 5 by 5 scenarios with multiple obstacles and an interesting geometry following the guidelines below. Calculate the policy with discount factor 0.99, and then try to explain intuitively the reason for the resulting policies, given the initial parameters. These two scenarios must have the following characteristics:

- A scenario with one (or more) terminal states with positive rewards and at least one other state with the same amount of, but negative reward and no terminal states with negative rewards.

- A scenario with one terminal state with a negative reward and at least one non-terminal state with a positive reward.

In LAPRO you can just run (for Linux):

jupyter notebookand for Windows you should execute Jupyter Notebook from the start menu. Open the given URL in a browser, and navigate to the folder of the cloned repository of this assignment.

Conda is required to run this assignment, and will install Jupyter for you. The following sequence of steps creates a virtual environment and installs the required dependencies for Python 3.6:

conda create -n py36_heu python=3.6

source activate py36_heu #For windows: conda activate py36_heu

pip install ipykernel

python -m ipykernel install --name py36_heuCorrections: From time to time, students or staff find errors (e.g., typos, unclear instructions, etc.) in the assignment specification. In that case, a corrected version of this file will be produced, announced, and distributed for you to commit and push into your repository. Because of that, you are NOT to modify this file in any way to avoid conflicts.

Late submissions & extensions: You have a 24 hour grace period with a penalty of 10% of the maximum mark, which increases to 50% until 48 hours after the due date, and 100% penalty thereafter. Extensions will only be permitted in exceptional circumstances.

About this repo: You must ALWAYS keep your fork private and never share it with anybody in or outside the course, even after the course is completed. You are not allowed to make another repository copy outside the provided GitHub Classroom without the written permission of the teaching staff.

Please do not distribute or post solutions to any of the projects and notebooks.

Collaboration Policy: You must work on this project individually. You are free to discuss high-level design issues with the people in your class, but every aspect of your actual formalisation must be entirely your own work. Furthermore, there can be no textual similarities in the reports generated by each group. Plagiarism, no matter the degree, will result in forfeiture of the entire grade of this assignment.

We are here to help!: We are here to help you! But we don't know you need help unless you tell us. We expect reasonable effort from you side, but if you get stuck or have doubts, please seek help by creating an issue in the repository and assigning it to the instructor. Always keep the most updated version of your code pushed to Git so when you create an issue, the teaching staff can look into your code to help.

Silence Policy: A silence policy will take effect 48 hours before this assignment is due. This means that no question about this assignment will be answered, whether it is asked on Moodle, by email, or in person. Use the last 48 hours to wrap up and finish your project quietly as well as possible if you have not done so already. Remember it is not mandatory to do all perfect, try to cover as much as possible. By having some silence we reduce anxiety, last minute mistakes, and unreasonable expectations on others.

Please remember to follow all the submission steps as per project specification.