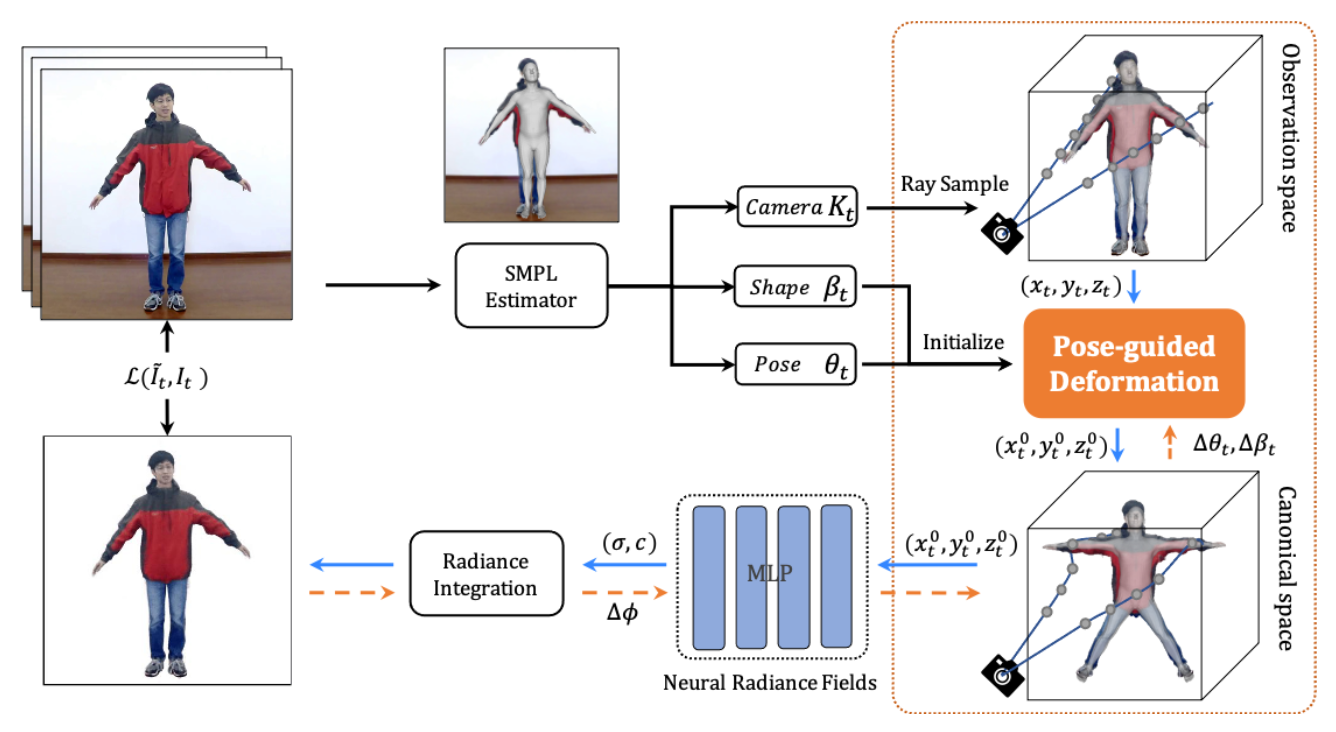

Animatable Neural Radiance Fields from Monocular RGB Videos

Github | Paper

Animatable Neural Radiance Fields from Monocular RGB Videos

Jianchuan Chen, Ying Zhang, Di Kang, Xuefei Zhe, Linchao Bao, Xu Jia, Huchuan Lu

Demos

More demos please see Demos.

Requirements

For visualization

- pyrender

- Trimesh

- PyMCubes

Run the following code to install all pip packages:

pip install -r requirements.txtTo install KNN_CUDA, we provide two ways:

- from source

git clone https://github.com/unlimblue/KNN_CUDA.git cd KNN_CUDA make && make install

- from wheel

pip install --upgrade https://github.com/unlimblue/KNN_CUDA/releases/download/0.2/KNN_CUDA-0.2-py3-none-any.whl

SMPL models

To download the SMPL model go to this (male, female and neutral models).

Place them as following:

smplx

└── models

└── smpl

├── SMPL_FEMALE.pkl

├── SMPL_MALE.pkl

└── SMPL_NEUTRAL.pklData Preparation

People-Snapshot datasets

- Prepare images and smpls

python -m tools.people_snapshot --data_root ${path_to_people_snapshot_datasets} --people_ID male-3-casual --gender male --output_dir data/people_snapshot - Prepare template

python -m tools.prepare_template --data_root data/people_snapshot --people_ID male-3-casual --model_type smpl --gender male --model_path ${path_to_smpl_models}

iPER datasets or Custom datasets

-

Prepare images

First convert video to images, and crop the images to make the person as centered as possible.

python -m tools.video_to_images --vid_file ${path_to_iper_datasets}/023_1_1.mp4 --output_folder data/iper/iper_023_1_1/cam000/images --img_wh 800 800 --offsets -30 20 -

Images segmentation

Here, we use RVM to extact the foreground mask of the person.

python -m tools.rvm --images_folder data/iper/iper_023_1_1/cam000/images --output_folder data/iper/iper_023_1_1/cam000/images

-

SMPL estimation

In our experiment, we use VIBE to estimate the smpl parameters.

python -m tools.vibe --images_folder data/iper/iper_023_1_1/cam000/images --output_folder data/iper/iper_023_1_1

Then convert vibe_output.pkl to the format in our experiment setup.

python -m tools.convert_vibe --data_root data/iper --people_ID iper_023_1_1 --gender neutral

-

Prepare template

python -m tools.prepare_template --data_root data/iper --people_ID iper_023_1_1 --model_type smpl --gender neutral --model_path ${path_to_smpl_models}

Training

- Training on the training frames

python train.py --cfg_file configs/people_snapshot/male-3-casual.yml

- Finetuning the smpl params on the testing frames

python train.py --cfg_file configs/people_snapshot/male-3-casual_refine.yml train.ckpt_path checkpoints/male-3-casual/last.ckpt

We provide the preprocessed data and pretrained models at Here

Visualization

Novel view synthesis

python novel_view.py --ckpt_path checkpoints/male-3-casual/last.ckpt3D reconstruction

python extract_mesh.py --ckpt_path checkpoints/male-3-casual/last.ckptShape Editing

- To make the person look fatter, we can properly reduce the betas_2th parameter.

python novel_view.py --ckpt_path checkpoints/male-3-casual/last.ckpt --betas_2th -2.0

- In contrast, we can increase the betas_2th parameter to make the person look slimmer.

python novel_view.py --ckpt_path checkpoints/male-3-casual/last.ckpt --betas_2th 3.0

Novel pose synthesis

python novel_pose.py --ckpt_path checkpoints/male-3-casual/last.ckptThe mixamo motion capture smpl parameters can be downloaded from here.

Testing

python test.py --ckpt_path checkpoints/male-3-casual_refine/last.ckpt --visCitation

If you find the code useful, please cite:

@misc{chen2021animatable,

title={Animatable Neural Radiance Fields from Monocular RGB Videos},

author={Jianchuan Chen and Ying Zhang and Di Kang and Xuefei Zhe and Linchao Bao and Xu Jia and Huchuan Lu},

year={2021},

eprint={2106.13629},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

Acknowledgements

Parts of the code were based on from kwea123's NeRF implementation: https://github.com/kwea123/nerf_pl. Some functions are borrowed from PixelNeRF https://github.com/sxyu/pixel-nerf