AdaBoost is short for Adaptive Boosting. Boosting is one method of Ensemble Learning. There are other Ensemble Learning methods like Bagging, Stacking, etc.. The differences between Bagging, Boosting, Stacking are:

-

Bagging:

Equal weight voting. Trains each model with a random drawn subset of training set. -

Boosting:

Trains each new model instance to emphasize the training instances that previous models mis-classified. Has better accuracy comparing to bagging, but also tends to overfit. -

Stacking:

Trains a learning algorithm to combine the predictions of several other learning algorithms.

Given a N*M matrix X, and a N vector y, where N is the count of samples, and M is the features of samples. AdaBoost trains T weak classifiers with the following steps:

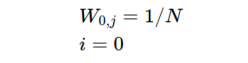

- Initialize.

-

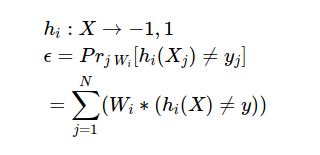

Train i-th weak classifier with training set {X, y} and distribution W_i.

-

Get the predict result \( h_i \) on the weak classifier with input X.

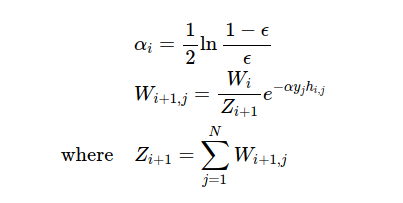

- Update.

Z is a normalization factor.

-

Repeat steps 2 ~ 4 until i reaches T.

-

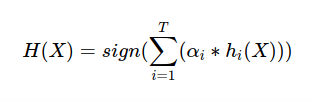

Output the final hypothesis:

Using a demo from sklearn AdaBoost, I got the following result.

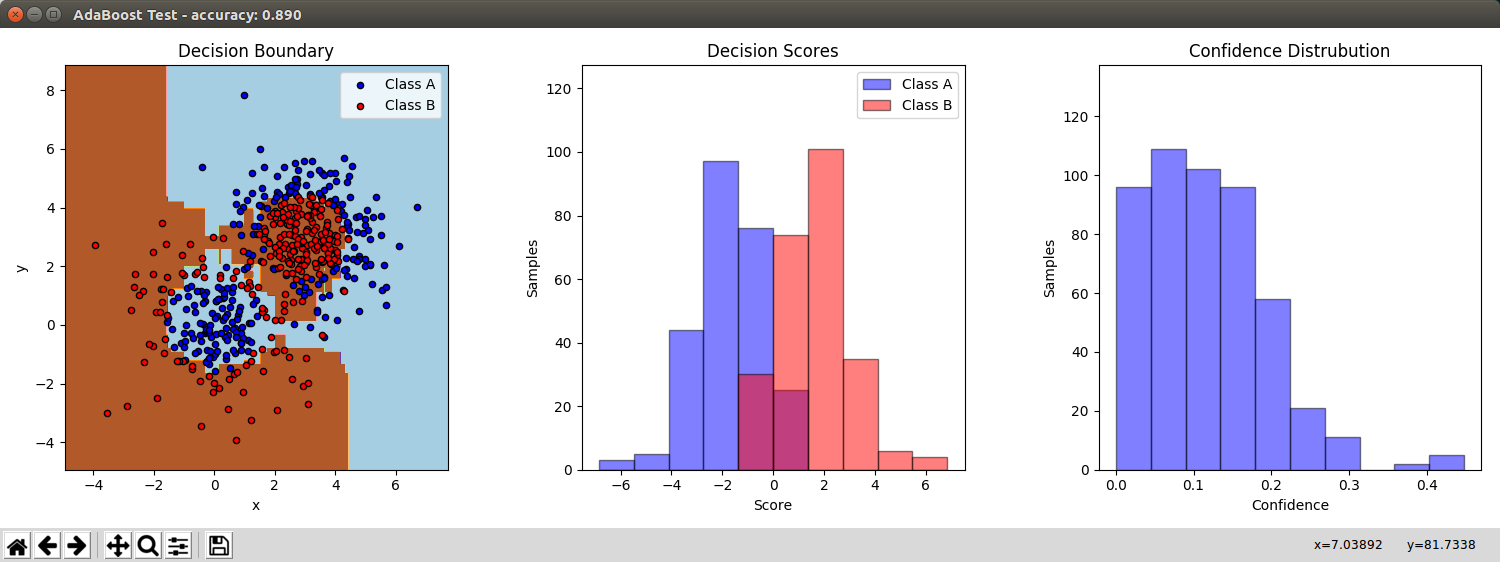

Weak classifiers: 200; Iteration steps in each weak classifier: 200:

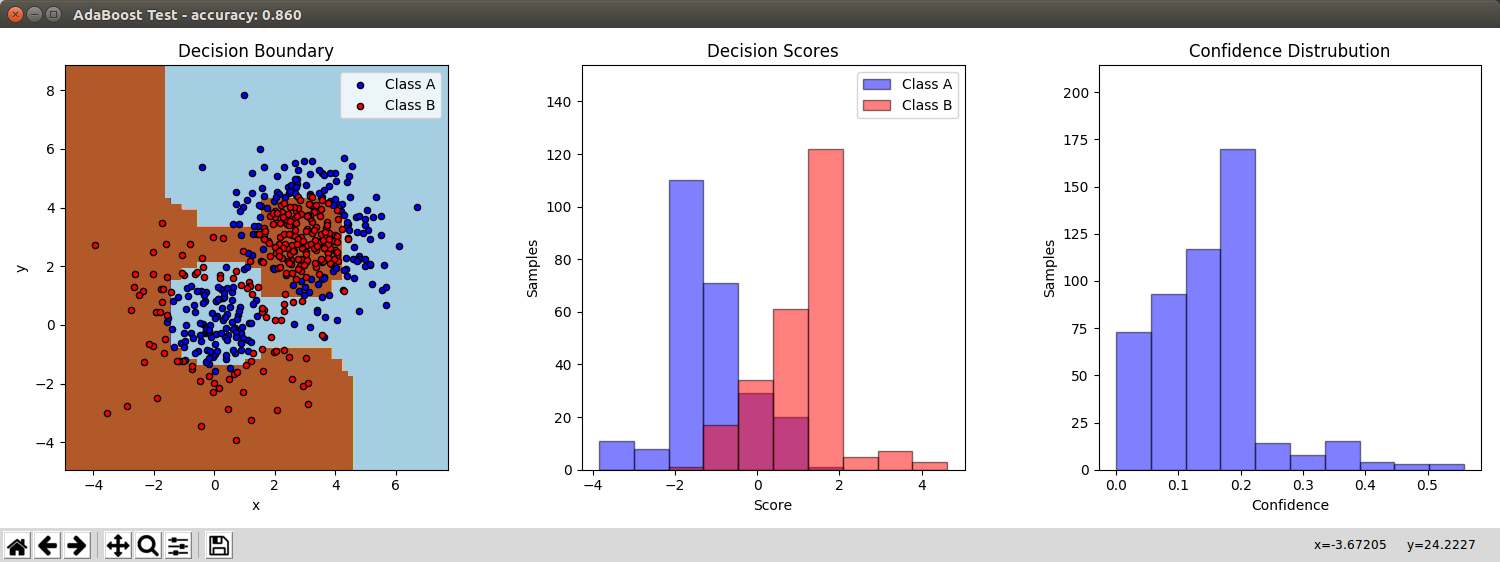

Weak classifiers: 60; Iteration steps in each weak classifier: 60:

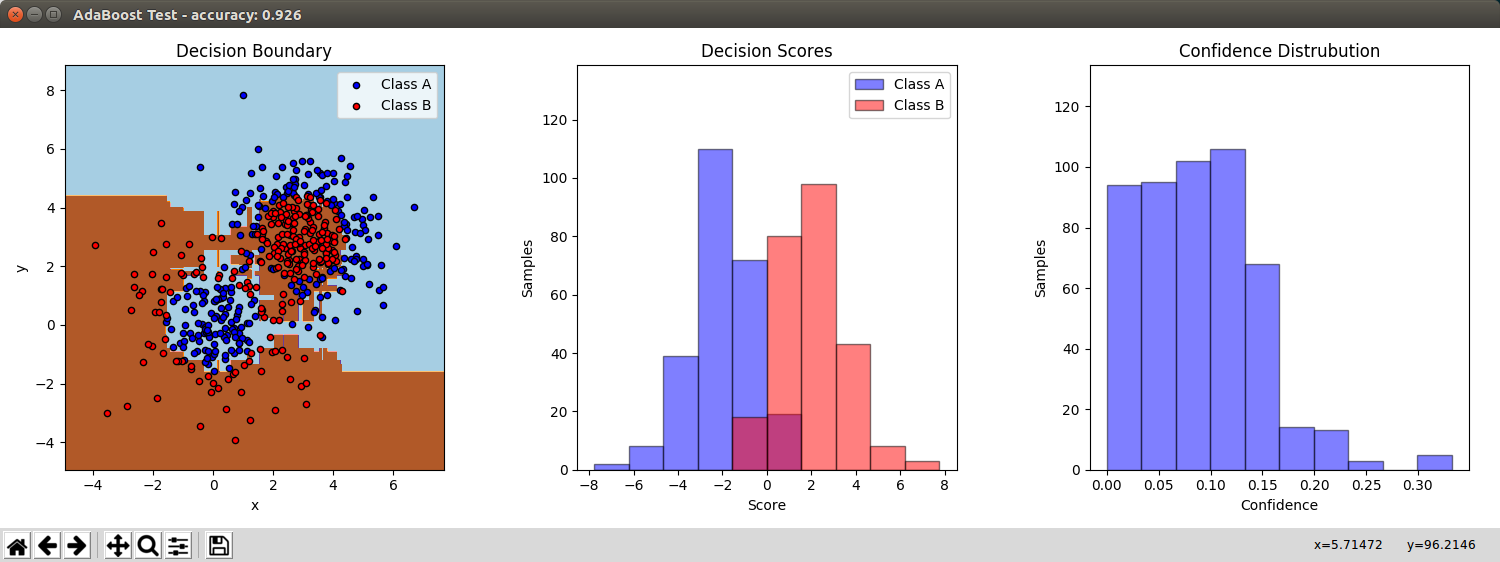

Weak classifiers: 400; Iteration steps in each weak classifier: 400:

We can see the result varies as the number of weak classifiers and the iteration steps change.

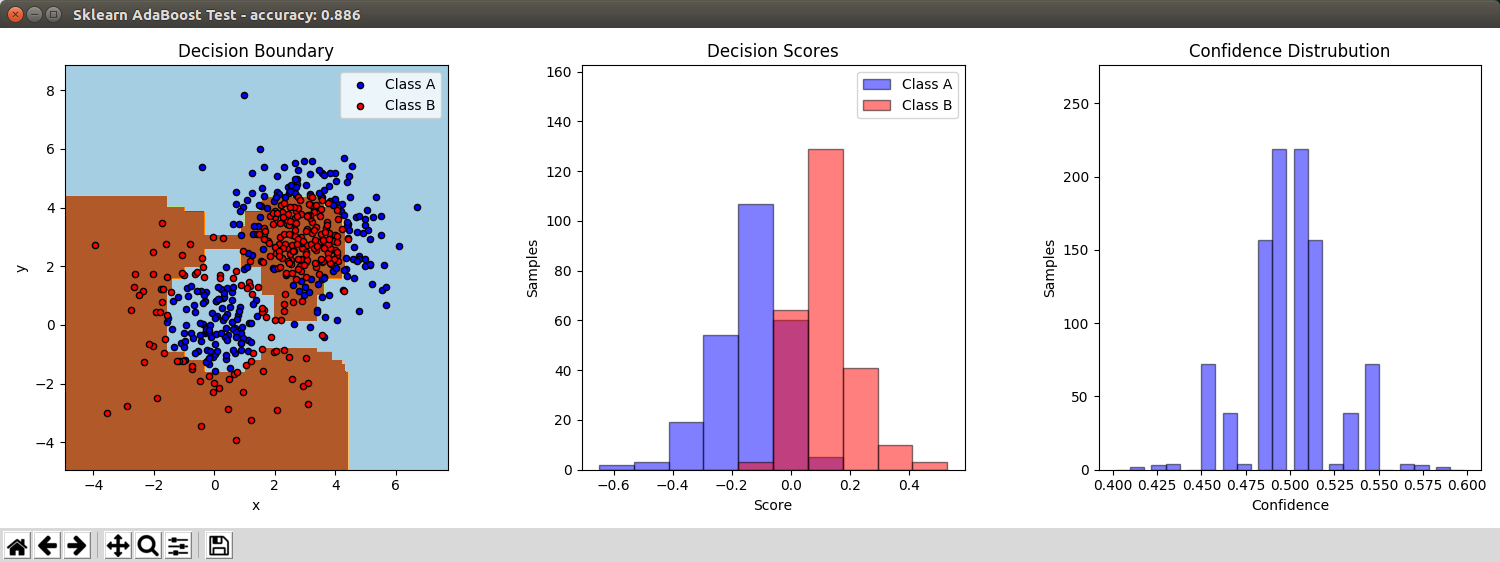

Compares with the AdaBoostClassifier from sklearn with 200 estimators (weak classifiers):

More comparison for my AdaBoostClassifier with different parameters:

| estimators | iteration steps | time | accuracy |

|---|---|---|---|

| 30 | 30 | 0.0621 | 0.8540 |

| 30 | 60 | 0.1095 | 0.8760 |

| 30 | 200 | 0.3725 | 0.8620 |

| 30 | 400 | 0.7168 | 0.8720 |

| 60 | 30 | 0.1291 | 0.8620 |

| 60 | 60 | 0.2328 | 0.8600 |

| 60 | 200 | 0.7886 | 0.8780 |

| 60 | 400 | 1.4679 | 0.8840 |

| 200 | 30 | 0.3942 | 0.8600 |

| 200 | 60 | 0.7560 | 0.8700 |

| 200 | 200 | 2.4925 | 0.8900 |

| 200 | 400 | 4.7178 | 0.9020 |

| 400 | 30 | 0.8758 | 0.8640 |

| 400 | 60 | 1.6578 | 0.8720 |

| 400 | 200 | 5.0294 | 0.9040 |

| 400 | 400 | 10.0294 | 0.9260 |

Find out further information, refer to my blog AdaBoost - Donny (In Chinese & English).