News

Very excited to announce that a brand new job has been accepted by the top multimedia conference ACMMM2022:

Learning Hierarchical Dynamics with Spatial Adjacency for Image Enhancement

IJCAI: Surprisingly, our two-stage network can also brighten low-light images.

SLAdehazing

Self-supervised Learning and Adaptation for Single Image Dehazing (IJCAI-ECAI 2022 long presentation)

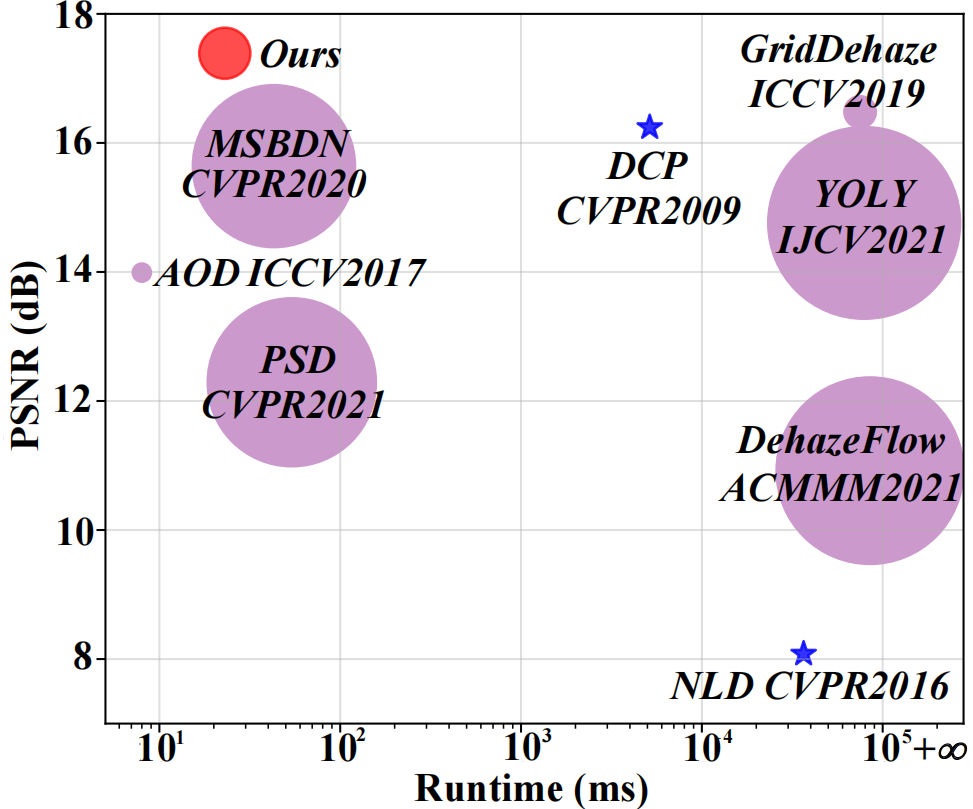

Existing deep image dehazing methods usually depend on supervised learning with a large number of hazy-clean image pairs which are expensive or difficult to collect. Moreover, dehazing performance of the learned model may deteriorate significantly when the training hazy-clean image pairs are insufficient and are different from real hazy images in applications. In this paper, we show that exploiting large scale training set and adapting to real hazy images are two critical issues in learning effective deep dehazing models. Under the depth guidance estimated by a well-trained depth estimation network, we leverage the conventional atmospheric scattering model to generate massive hazy-clean image pairs for the self-supervised pretraining of dehazing network. Furthermore, self-supervised adaptation is presented to adapt pretrained network to real hazy images. Learning without forgetting strategy is also deployed in self-supervised adaptation by combining self-supervision and model adaptation via contrastive learning. Experiments show that our proposed method performs favorably against the state-of-the-art methods, and is quite efficient, i.e., handling a 4K image in 23 ms.

Some Result

Environment Settings

pytorch 1.5We recommend using pytorch>1.0 and pytorch<1.6 to avoid unnecessary trouble. Our method does not rely on the special design of the network structure, so the rest of the general dependencies are not limited.

Train

Here! You first need to prepare some clear images that you want. Put them in ./train_images/collect_images

python train_stage1.py --net='Stage1' --crop --crop_size=256 --blocks=1 --gps=3 --bs=8 --lr=0.0002 --trainset='its_train' --testset='its_test' --steps=50000 --eval_step=2500Get RTTS and pre-processed data from https://github.com/zychen-ustc/PSD-Principled-Synthetic-to-Real-Dehazing-Guided-by-Physical-Priors Put them in ./train_images/RTTS and ./train_images/CLAHE-RTTS

python train_stage2.py --net='Stage2' --crop --crop_size=256 --blocks=1 --gps=3 --bs=8 --lr=0.0002 --trainset='its_train' --testset='its_test' --steps=10000 --eval_step=1000Attention! You can modify the code to use an end-to-end dehazing network instead of (Anet, Tnet, and Rnet). Such as: BS_e2enet, it in bsnet.py

Only-Test

If you just need to test, you can execute the following code (need to load the parameters we provide):

python test_meta.pyIt provides 2 different stages of test code, you can choose to test any labeled data set or unlabeled data and real haze maps on the Internet, our method does not need any labeled data during training, however, competitive results were still produced on these datasets.

It should be noted that the qualitative results of the first-stage results are better, and the quantitative results of the second-stage results are better on the real data set.

Checkpoint-Files

You can get our parameter file from the link below https://drive.google.com/drive/folders/1xq1tg7wvNJeZTw8w4RnqEsJzQRcmHLCI?usp=sharing

Data-Get

We will give a link to the data we used for training, please wait while we sort out the training code.

The data used for testing can be obtained through the following links:

SOTS: http://t.cn/RQ34zUi

4KID: https://github.com/zzr-idam/4KDehazing

URHI: http://t.cn/RHVjLXp

Unfortunately, SOTS and URHI's dataset link may not be accessible, you can find the Baidu network disk link to get the dataset from the following link (if you are in China):

https://sites.google.com/view/reside-dehaze-datasets

In the meantime, we'll make a link to help you get these two datasets. Please wait for a while.

Citation

The paper can be accessed from https://www.ijcai.org/proceedings/2022/0159.pdf .

If you find our work useful in your research, please cite:

@InProceedings{Liang_2022_IJCAI,

author = {Liang, Yudong and Wang, Bin and Zuo, Wangmeng and Liu, Jiaying and Ren, Wenqi},

title = {Self-supervised Learning and Adaptation for Single Image Dehazing},

booktitle = {Proceedings of the 31st International Joint Conference on Artificial Intelligence (IJCAI-22)},

pages = {1137-1143},

year = {2022}

}

Contact Us

If you have any questions, please contact us:

liangyudong006@163.com202022407046@email.sxu.edu.cn