MIMDet 🎭

Unleashing Vanilla Vision Transformer with Masked Image Modeling for Object Detection

Yuxin Fang1 *, Shusheng Yang1 *, Shijie Wang1 *, Yixiao Ge2, Ying Shan2, Xinggang Wang1

1 School of EIC, HUST, 2 ARC Lab, Tencent PCG.

(*) equal contribution, (

ArXiv Preprint (arXiv 2204.02964)

Introduction

This repo provides code and pretrained models for MIMDet (Masked Image Modeling for Detection).

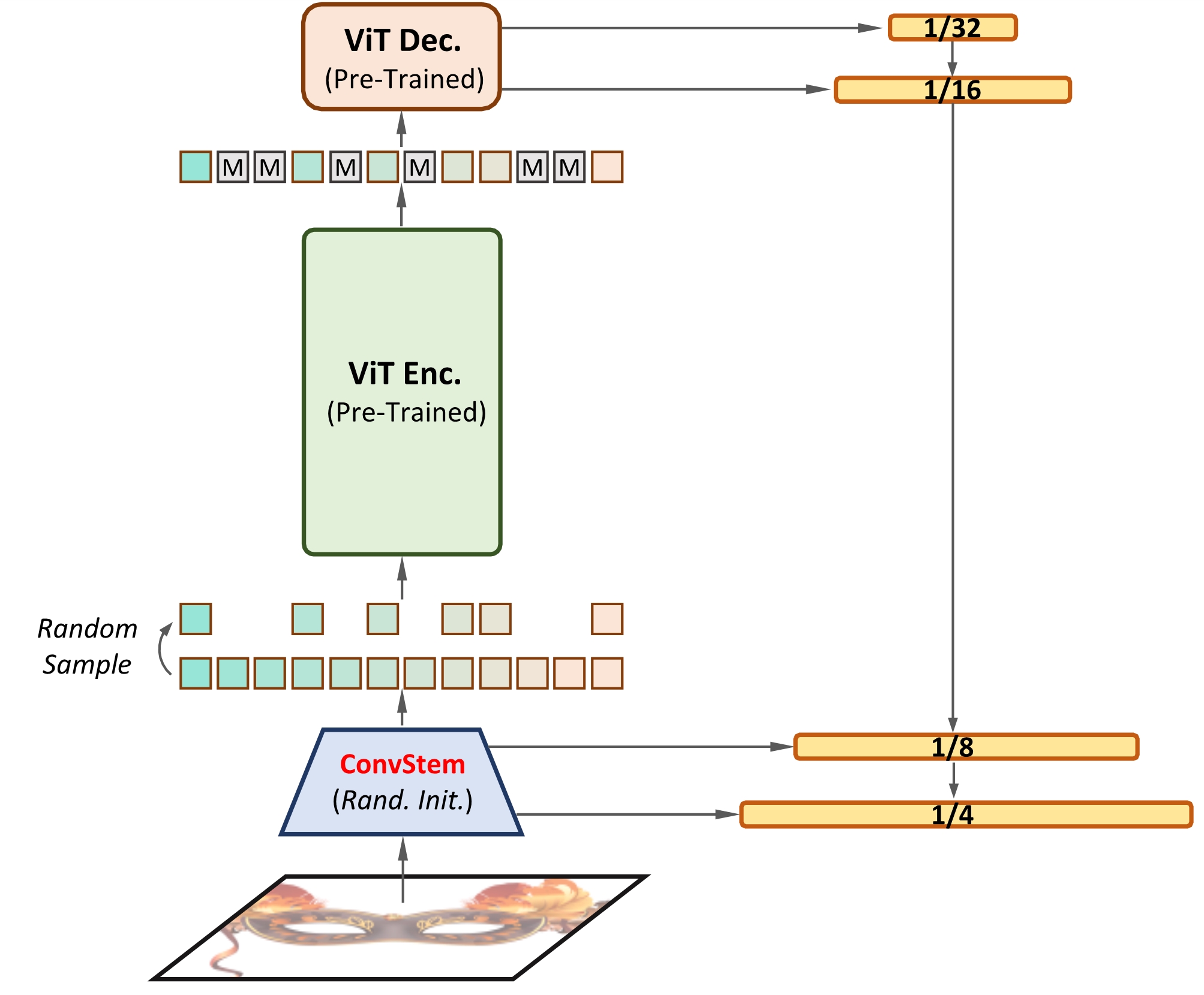

- MIMDet is a simple framekwork that enables a MIM pretrained vanilla ViT to perform high-performance object-level understanding, e.g, object detection and instance segmentation.

- In MIMDet, a MIM pre-trained vanilla ViT encoder can work surprisingly well in the challenging object-level recognition scenario even with randomly sampled partial observations, e.g., only 25%~50% of the input embeddings.

- In order to construct multi-scale representations for object detection, a randomly initialized compact convolutional stem supplants the pre-trained large kernel patchify stem, and its intermediate features can naturally serve as the higher resolution inputs of a feature pyramid without upsampling. While the pre-trained ViT is only regarded as the third-stage of our detector's backbone instead of the whole feature extractor, resulting in a ConvNet-ViT hybrid architecture.

- MIMDet w/ ViT-Base & Mask R-CNN FPN obtains 51.5 box AP and 46.0 mask AP on COCO.

Models and Main Results

Mask R-CNN

| Model | Sample Ratio | Schedule | Aug | box mAP | mask mAP | #params | config | model/log |

|---|---|---|---|---|---|---|---|---|

| MIMDet-ViT-B | 0.25 | 3x | [480-800, 1333] w/crop | 49.9 | 44.7 | 127.56M | config | github/log |

| MIMDet-ViT-B | 0.5 | 3x | [480-800, 1333] w/crop | 51.5 | 46.0 | 127.56M | config | github/log |

| MIMDet-ViT-L | 0.5 | 3x | [480-800, 1333] w/crop | 53.3 | 47.5 | 345.27M | config | github/log |

| Benchmarking-ViT-B | - | 25ep | [1024, 1024] LSJ(0.1-2) | 48.0 | 43.0 | 118.67M | config | github/log |

| Benchmarking-ViT-B | - | 50ep | [1024, 1024] LSJ(0.1-2) | 50.2 | 44.9 | 118.67M | config | github/log |

| Benchmarking-ViT-B | - | 100ep | [1024, 1024] LSJ(0.1-2) | 50.4 | 44.9 | 118.67M | config | github/log |

Notes:

- Benchmarking-ViT-B is an unofficial implementation of Benchmarking Detection Transfer Learning with Vision Transformers

- The configuration & results of MIMDet-ViT-L are still under-tuned.

Installation

Prerequisites

- Linux

- Python 3.7+

- CUDA 10.2+

- GCC 5+

Prepare

- Clone

git clone https://github.com/hustvl/MIMDet.git

cd MIMDet

- Create a conda virtual environment and activate it:

conda create -n mimdet python=3.9

conda activate mimdet

- Install

torch==1.9.0andtorchvision==0.10.0 - Install

Detectron2==0.6, follow d2 doc. - Install

timm==0.4.12, follow timm doc. - Install

einops, follow einops repo. - Prepare

COCOdataset, follow d2 doc.

Inference

# inference

python lazyconfig_train_net.py --config-file <CONFIG_FILE> --num-gpus <GPU_NUM> --eval-only train.init_checkpoint=<MODEL_PATH>

# inference with 100% sample ratio (see Table 2 in our paper for a detailed analysis)

python lazyconfig_train_net.py --config-file <CONFIG_FILE> --num-gpus <GPU_NUM> --eval-only train.init_checkpoint=<MODEL_PATH> model.backbone.bottom_up.sample_ratio=1.0

Training

Downloading MAE full pretrained ViT-B Model and ViT-L Model, follow MAE repo-issues-8.

# single-machine training

python lazyconfig_train_net.py --config-file <CONFIG_FILE> --num-gpus <GPU_NUM> mae_checkpoint.path=<MAE_MODEL_PATH>

# multi-machine training

python lazyconfig_train_net.py --config-file <CONFIG_FILE> --num-gpus <GPU_NUM> --num-machines <MACHINE_NUM> --master_addr <MASTER_ADDR> --master_port <MASTER_PORT> mae_checkpoint.path=<MAE_MODEL_PATH>

Acknowledgement

This project is based on MAE, Detectron2 and timm. Thanks for their wonderful works.

License

MIMDet is released under the MIT License.

Citation

If you find our paper and code useful in your research, please consider giving a star

@article{MIMDet,

title={Unleashing Vanilla Vision Transformer with Masked Image Modeling for Object Detection},

author={Fang, Yuxin and Yang, Shusheng and Wang, Shijie and Ge, Yixiao and Shan, Ying and Wang, Xinggang},

journal={arXiv preprint arXiv:2204.02964},

year={2022}

}