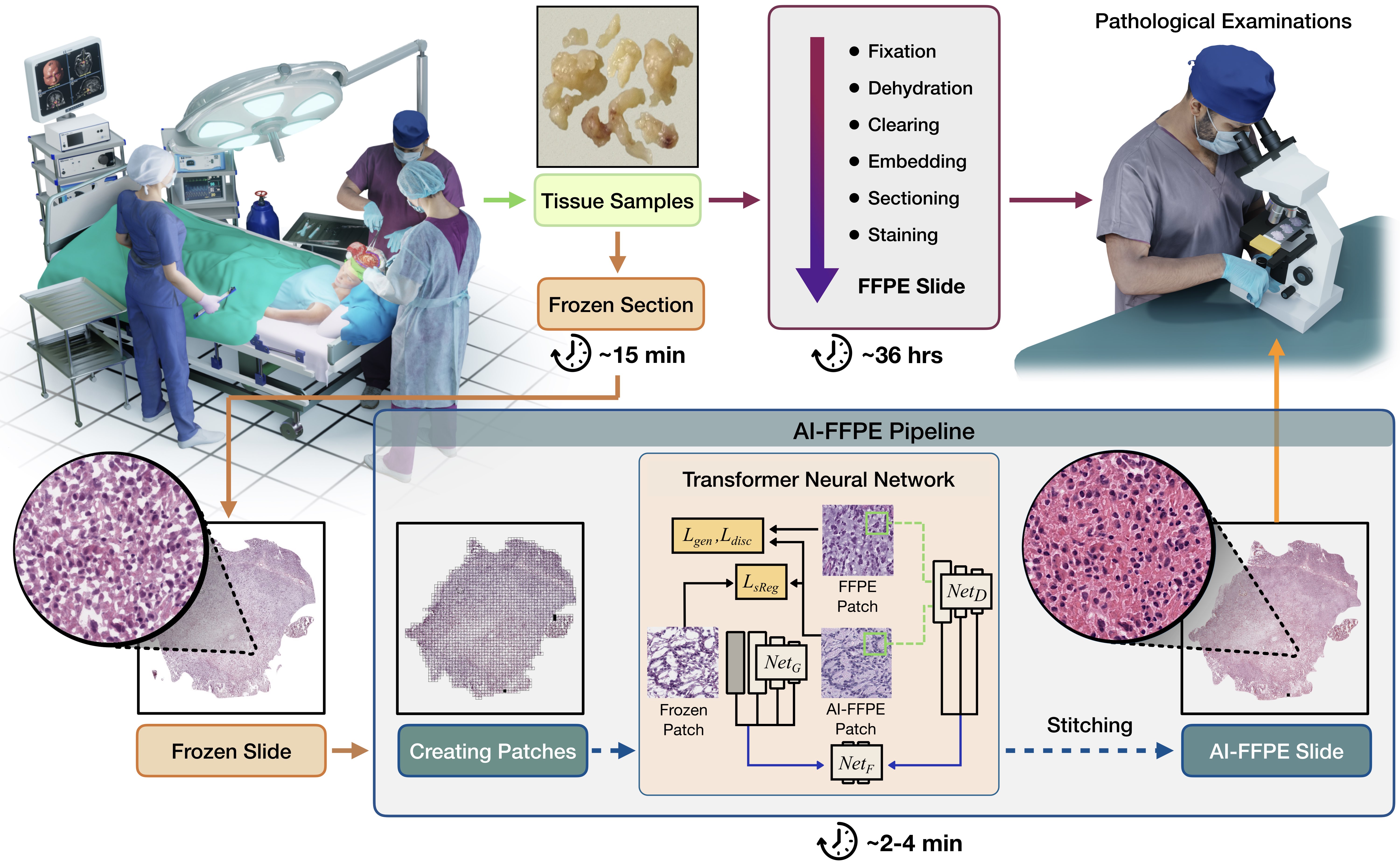

A deep-learning model for transforming the style of tissue images from cryosectioned to formalin-fixed and paraffin-embedded

Paper | Brain GBM Dataset | Brain LGG Dataset | Lung LUAD Dataset | Lung LUSC Dataset | Pretrained Models | WebSite

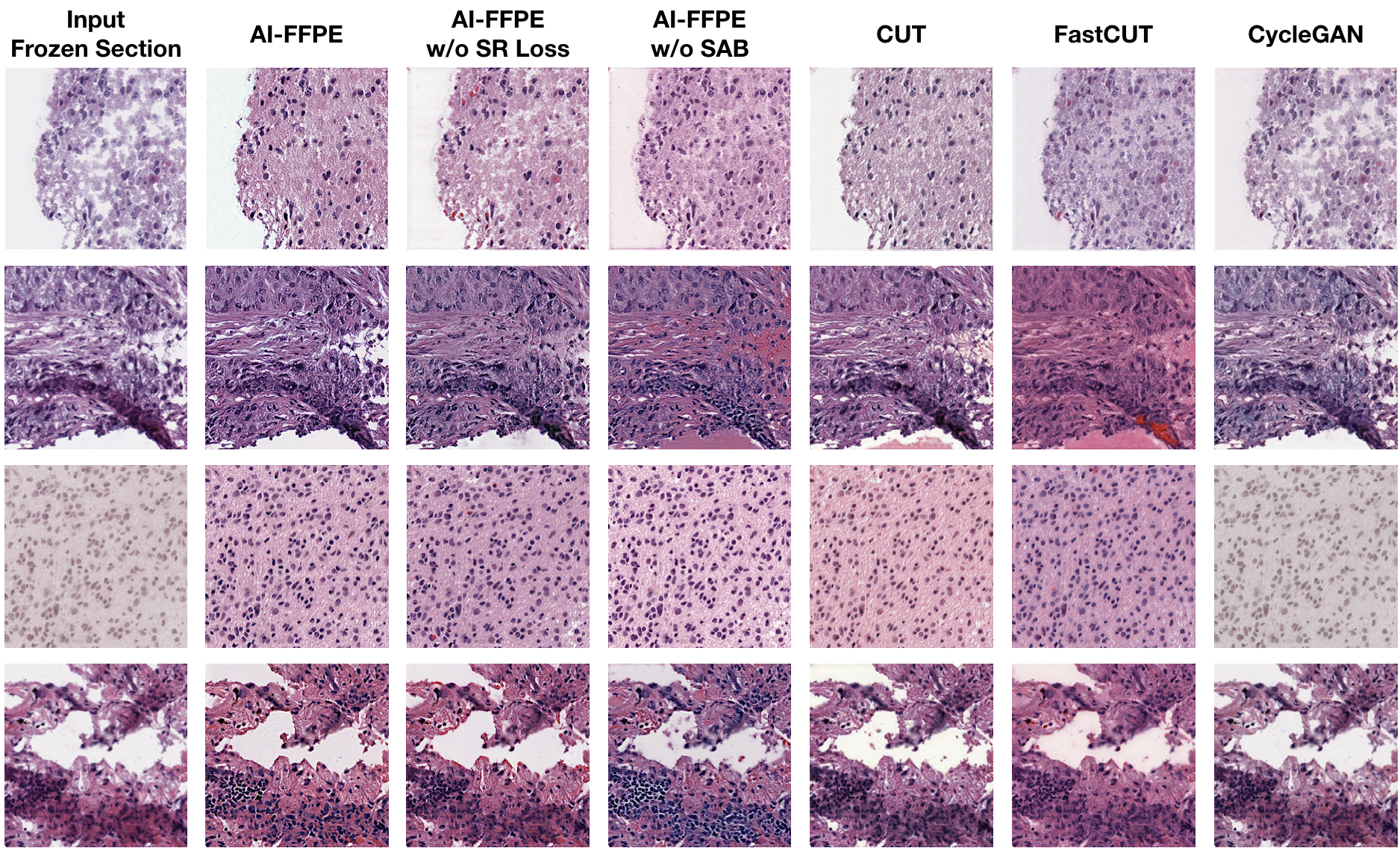

In this work, we propose AI-FFPE pipeline which is optimized for histopathology images by driving the network attention specifically to the nuclei and tissue preperation protocols related deficiencies. Compared to CycleGAN, our model training is faster and less memory-intensive.

Example Results

Frozen to FFPE Translation in Brain Specimens

Frozen to FFPE Translation in Lung Specimens

Prerequisites

- Linux or macOS

- Python 3

- CPU or NVIDIA GPU + CUDA CuDNN

Getting started

- Clone this repo:

git clone https://github.com/DeepMIALab/AI-FFPE

cd AI-FFPE-

Install PyTorch 1.1 and other dependencies (e.g., torchvision, visdom, dominate, gputil).

-

For pip users, please type the command

pip install -r requirements.txt. -

For Conda users, you can create a new Conda environment using

conda env create -f environment.yml.

Training and Test

- The slide identity numbers which were used in train, validation and test sets are given as .txt files in docs/ for both Brain and Lung dataset. To replicate the results, you may download GBM and LGG projects for Brain, LUAD and LUSC projects for Lung from TCGA Data Portal and create a subset using these .txt files.

- To extract the patches from WSIs and create PNG files, please follow the instructions given in AI-FFPE/Data_preprocess section.

The data used for training are expected to be organized as follows:

Data_Path # DIR_TO_TRAIN_DATASET

├── trainA

| ├── 1.png

| ├── ...

| └── n.png

├── trainB

| ├── 1.png

| ├── ...

| └── m.png

├── valA

| ├── 1.png

| ├── ...

| └── j.png

└── valB

├── 1.png

├── ...

└── k.png

-

To view training results and loss plots, run

python -m visdom.serverand click the URL http://localhost:8097. -

Train the AI-FFPE model:

python train.py --dataroot ./datasets/Frozen/${dataroot_train_dir_name} --name ${model_results_dir_name} --CUT_mode CUT --batch_size 1- Test the AI-FFPE model:

python test.py --dataroot ./datasets/Frozen/${dataroot_test_dir_name} --name ${result_dir_name} --CUT_mode CUT --phase test --epoch ${epoch_number} --num_test ${number_of_test_images}The test results will be saved to a html file here: ./results/${result_dir_name}/latest_train/index.html

AI-FFPE, AI-FFPE without Spatial Attention Block, AI-FFPE without self-regularization loss, CUT, FastCUT, and CycleGAN

Apply a pre-trained AI-FFPE model and evaluate

For reproducability, you can download the pretrained models for each algorithm here.

Reference

If you find our work useful in your research or if you use parts of this code please consider citing our paper:

@article{article,

author = {Ozyoruk, Kutsev and Can, Sermet and Darbaz, Berkan and Başak, Kayhan and Demir, Derya and Gokceler, Irem and Serin, Gurdeniz and Hacısalihoglu, Payam and Kurtuluş, Emirhan and Lu, Ming and Chen, Tiffany and Williamson, Drew and Yılmaz, Funda and Mahmood, Faisal and Turan, Mehmet},

year = {2022},

month = {12},

pages = {},

title = {A deep-learning model for transforming the style of tissue images from cryosectioned to formalin-fixed and paraffin-embedded},

volume = {6},

journal = {Nature Biomedical Engineering},

doi = {10.1038/s41551-022-00952-9}

}

Acknowledgments

Our code is developed based on CUT. We also thank pytorch-fid for FID computation, and stylegan2-pytorch for the PyTorch implementation of StyleGAN2 used in our single-image translation setting.