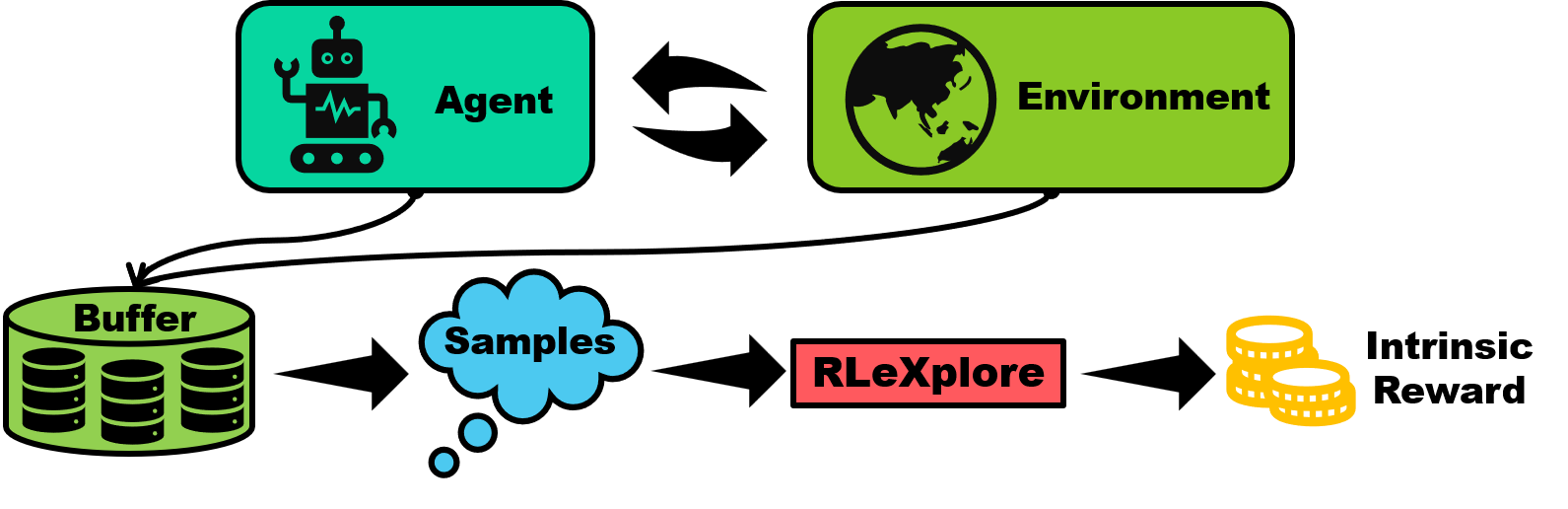

RLeXplore is a set of implementations of intrinsic reward driven-exploration approaches in reinforcement learning using PyTorch, which can be deployed in arbitrary algorithms in a plug-and-play manner. In particular, RLeXplore is designed to be well compatible with Stable-Baselines3, providing more stable exploration benchmarks.

- See Changelog and Implemented Algorithms;

- Code test in progress! Welcome to contribute to this program!

- Get the repository with git:

git clone https://github.com/yuanmingqi/rl-exploration-baselines.git

- Run the following command to get dependencies:

pip install -r requirements.txtDue to the large differences in the calculation of different intrinsic reward methods, RLeXplore has the following rules:

- In RLeXplore, the environments are assumed to be vectorized;

- The compute_irs function of each intrinsic reward module has a mandatory argument rollouts, which is a dict like:

+ observations (n_steps, n_envs, *obs_shape) <class 'numpy.ndarray'>

- actions (n_steps, n_envs, action_shape) <class 'numpy.ndarray'>

+ rewards (n_steps, n_envs, 1) <class 'numpy.ndarray'>Take RE3 for instance, it computes the intrinsic reward for each state based on the Euclidean distance between the state and

its

import torch

import numpy as np

from rlexplore.re3 import RE3

if __name__ == '__main__':

''' env setup '''

device = torch.device('cuda:0')

obs_shape = (4, 84, 84)

action_shape = 1 # for discrete action space

n_envs = 16

n_steps = 256

observations = np.random.randn(

n_steps, n_envs, *obs_shape).astype('float32') # collected experiences

''' create RE3 instance '''

re3 = RE3(obs_shape=obs_shape, action_shape=action_shape, device=device,

latent_dim=128, beta=0.05, kappa=0.00001)

''' compute intrinsic rewards '''

intrinsic_rewards = re3.compute_irs(rollouts={'observations': observations},

time_steps=25600, k=3, average_entropy=False)

print(intrinsic_rewards.shape, type(intrinsic_rewards))

print(intrinsic_rewards)

# Output: (256, 16, 1) <class 'numpy.ndarray'>Train with Stable-Baselines3 on PyBullet games:

python examples/ppo_re3_bullet.py --action-space cont --env-id AntBulletEnv-v0 --algo ppo --n-envs 10 --exploration re3 --total-time-steps 2000000 --n-steps 128| Algorithm | Remark | Year | Paper | Code |

|---|---|---|---|---|

| ICM | Curiosity-driven exploration | 2017 | Curiosity-Driven Exploration by Self-Supervised Prediction | Link |

| RND | Count-based exploration | 2019 | Exploration by Random Network Distillation | Link |

| GIRM | Curiosity-driven exploration | 2020 | Intrinsic Reward Driven Imitation Learning via Generative Model | Link |

| NGU | Memory-based exploration | 2020 | Never Give Up: Learning Directed Exploration Strategies | Link |

| RIDE | Procedurally-generated environment | 2020 | RIDE: Rewarding Impact-Driven Exploration for Procedurally-Generated Environments | Link |

| RE3 | Shannon Entropy Maximization | 2021 | State Entropy Maximization with Random Encoders for Efficient Exploration | Link |

| RISE | Rényi Entropy Maximization | 2022 | Rényi State Entropy Maximization for Exploration Acceleration in Reinforcement Learning | Link |

| REVD | Rényi Divergence Maximization | 2022 | Rewarding Episodic Visitation Discrepancy for Exploration in Reinforcement Learning | Link |

28/12/2022

- Update RE3, RISE, RND, RIDE.

- Add a new method entitled REVD.

04/12/2022

- Update RND and RIDE.

03/12/2022

- We start to reconstruct the project to make it compatible with arbitrary tasks;

- Update RE3 and RISE.

27/09/2022

- Update the RISE;

- Introduce JAX in RISE. See

experimentalfolder.

26/09/2022

- Update the RE3;

- Try to introduce JAX to accelerate computation. See

experimentalfolder.

Some source codes of RLeXplore are built based on the following repositories: