- Introduction

- Components of Learning

- Expectation-Maximization Algorithm

- Pseudocode of GMM Algorithm

- Test make_blobs Dataset

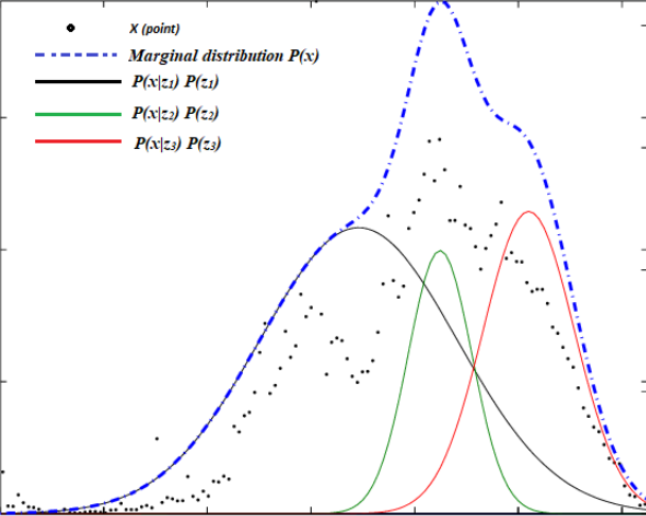

Modelling real dataset using single Gaussian distribution can suffers from significant limitations while kernel density of dataset showing two or more Gaussians distribution. The algorithm work by grouping instance into certain groups or cluster that generated by a Gaussian distribution. Gaussian Mixture initialize the covariances of the cluster based on the covariance type that represent the distribution of each cluster.

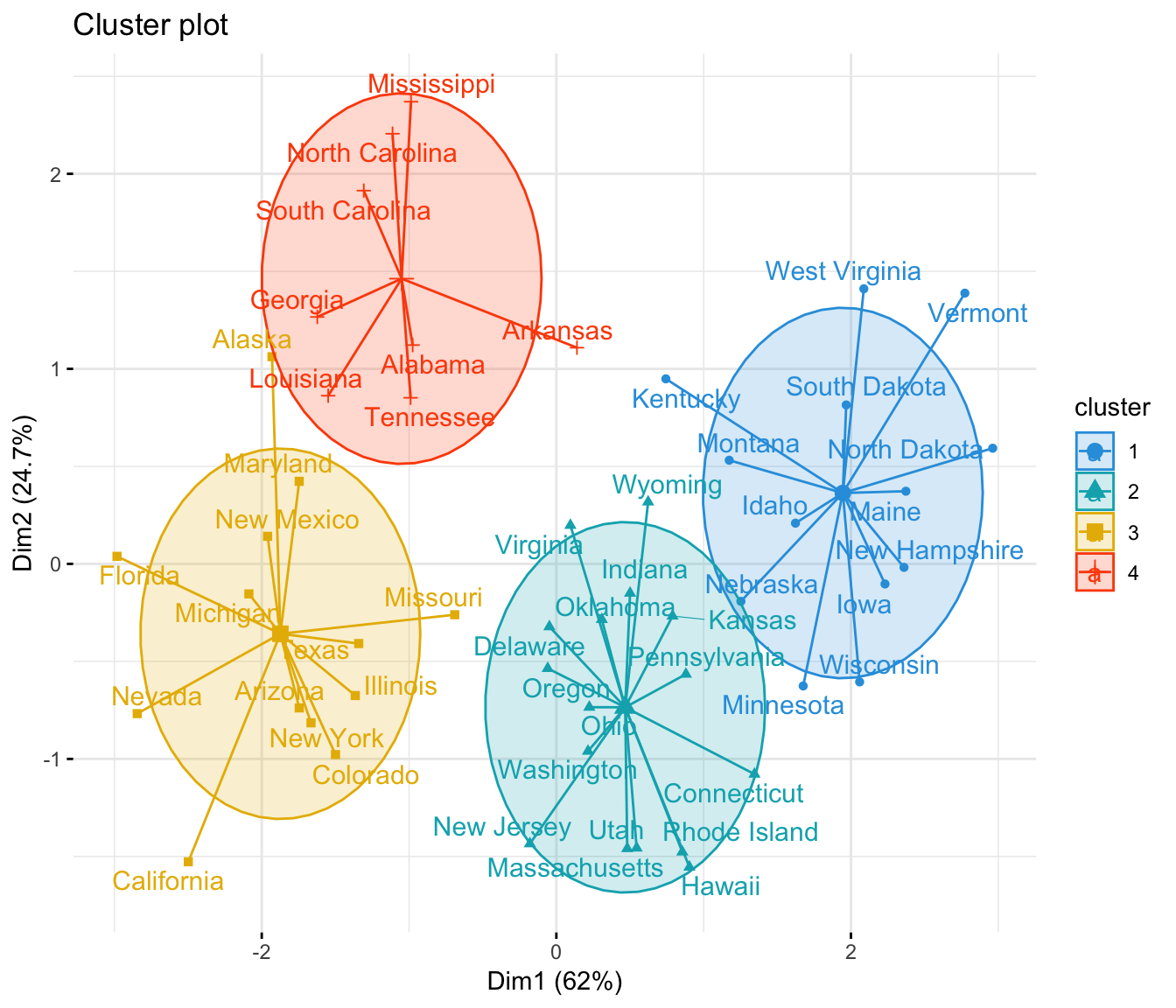

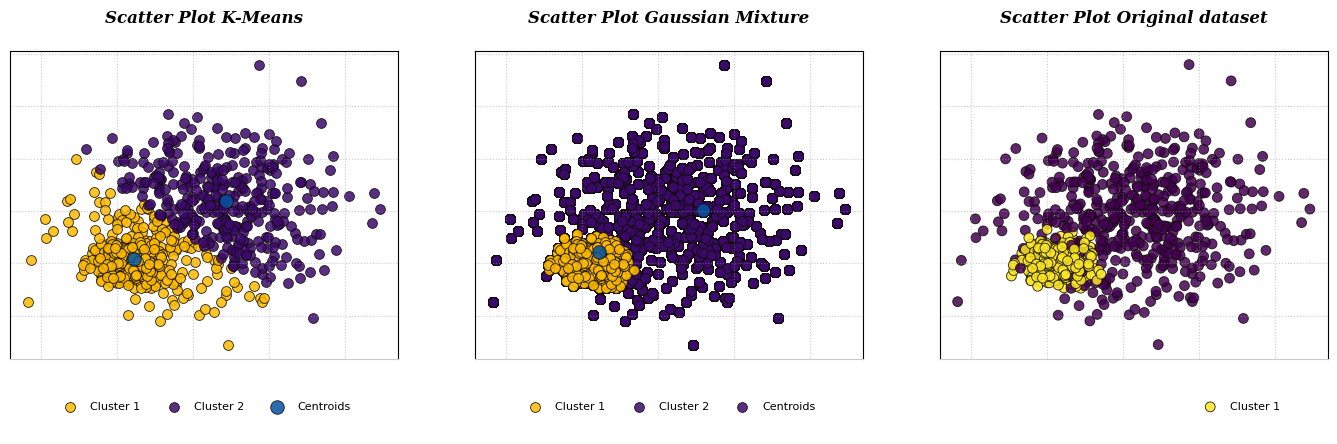

Figure 1 Gaussian Mixture Model toward dataWhile K-Means algorithm work by grouping instance into certain cluster based on the closest distance between the points to the centroid using Euclidean distance, K-Means algorithm also does not estimate the covariances of the cluster. (Figure 2)

Figure 2. K-Means Clustering toward dataGMM model is a probabilistic model that assumes the instances were generated from two or more certain Gaussians distribution whose parameters is unknown. All the instances generated from a single Gaussian distribution form a cluster that typically looks like an ellipsoid with different ellipsoidal shape, size, density, and orientation.

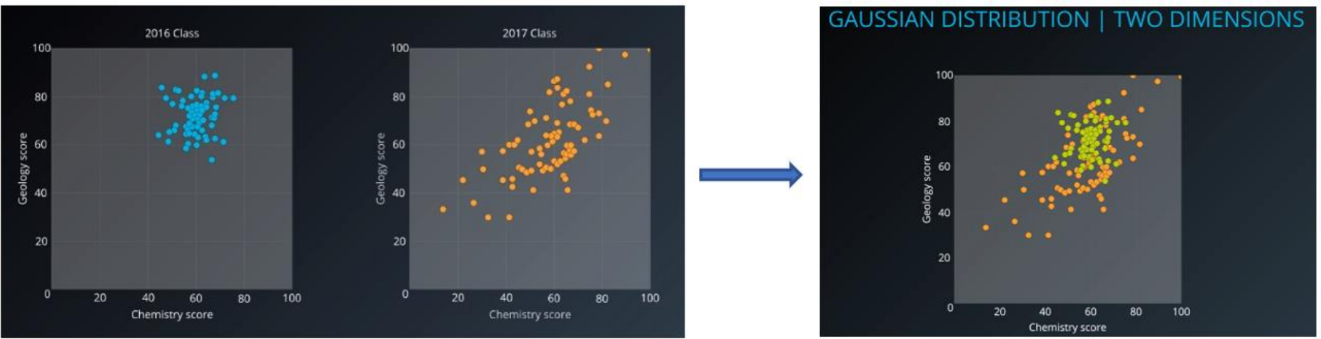

Figure 3.Gaussian cluster with different ellipsoidal, shape, size, density and orientationLet’s see how dataset with two or more Gaussians Distribution by visualize it into two dimensions (Figure 4). Sometimes the dataset contains the superposition of two Gaussians cluster, which Gaussian Mixture will tease it apart, whichever point to have the highest probability to have come from each Gaussians is classified as belonging to a separate cluster.

Figure 4. Two class with its own Multivariate Gaussian distribution (Left), Consider we mixed the dataset and remove the label, composite dataset would not be Gaussian, but we know it’s composed of two Gaussians distribution (Right).-

Hypothesis:

instances were generated from two or more certain Gaussians distribution whose parameters is unknown. -

Parameters:

Weights, Mean, Covariance, Responsibilities. -

Learning Algorithms:

Objective: Maximize weighted log-likelihood

by: Expectation-Maximization method -

Input:

X: Input data -

Output:

Centroids

Labels -

Hyperparameters:

k : number of centroids

n_init : number of initialization

max_iter : maximum iteration

tol : Change of norm weighted log-likelihood (converged parameter)

covariance type : {full, tied, spherical, diag}

Expectation-Maximization is a method in Gaussian algorithm in order to finding maximum likelihood solutions

for model with latent variable (McLachlan and Khrisnan, 1997). To give better understanding how EM method works,

given a K-Dimensional binary random variable z having a 1-of-K representation in which a particular element

We shall define the joint distribution

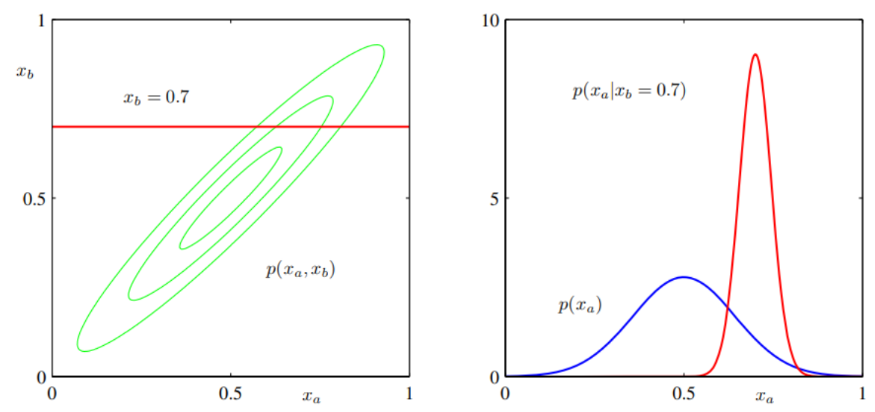

Here we shall suppose that we are given a Gaussian marginal distribution

Where the parameter

Similarly, the condition distribution of

Then Joint distribution is given by

Moreover, having joint distribution

This value of

Suppose we want to model dataset with Gaussian Mixture using dataset of observation {${x_1, x_2, …., x_N}$}, we can represent this data as

However, there’s significant problem associated with the maximum likelihood due to the presence of singularities. Suppose the one of components of the mixture model

This data point will then contribute a term of likelihood function of norm gaussian.

Which we consider the limit

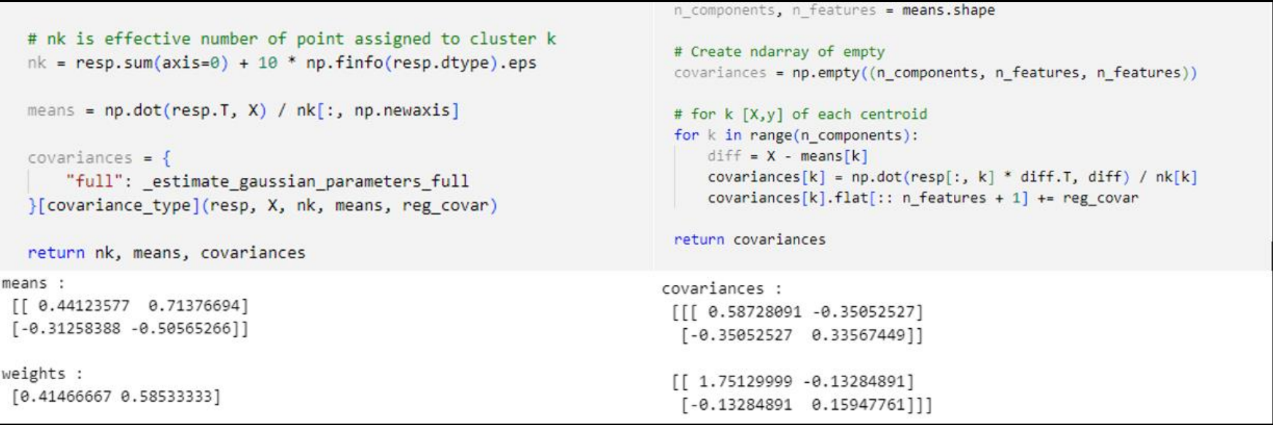

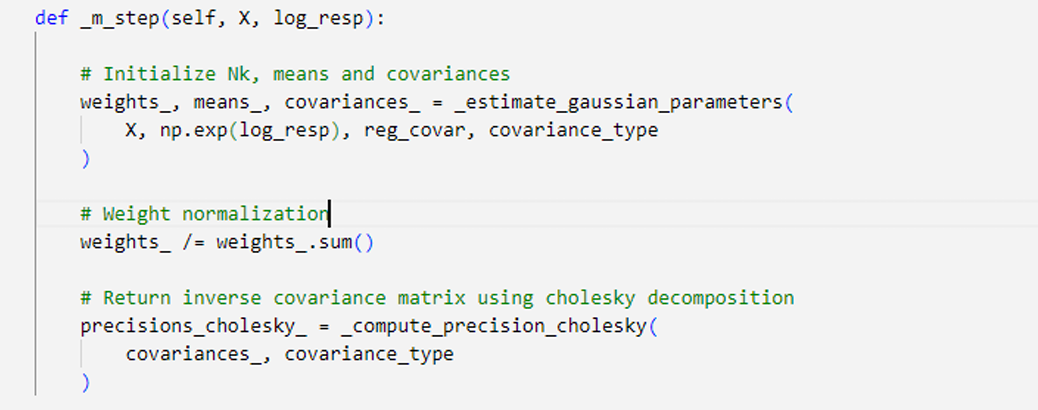

GMM algorithm first initialize mean

With

In the expectation (E-Step), we use the those initial values for the parameters to evaluate the posterior probabilities, or responsibilities

Then use these responsibilities

In order to initialize the values of responsibilities,

The initialization values of (

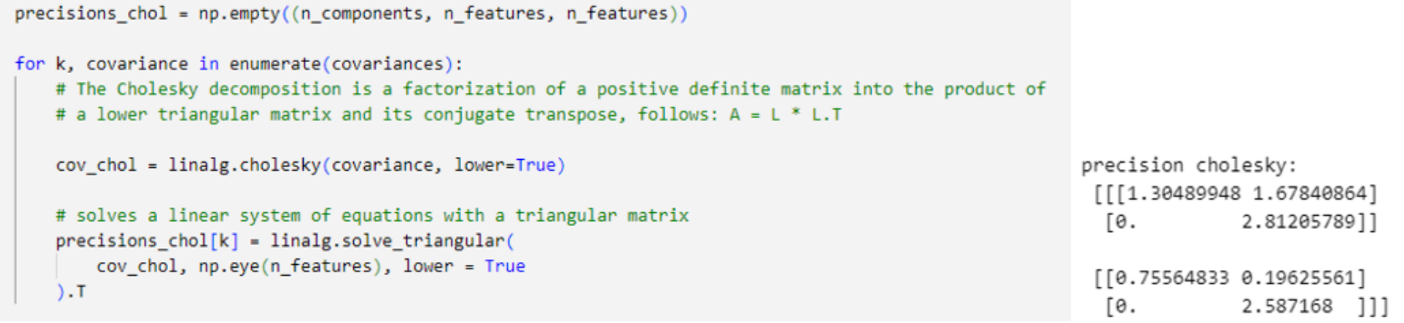

The Cholesky decomposition of a Hermitian positive-definite matrix A is a decomposition of the form A = [L][L]T , where L is Lower triangulation matrix with real and positive diagonal entries, and LT denotes the conjugate transpose of L. Every Hermitian positive-definite matrix (and thus also every real-valued symmetric positive-definite matrix) has a unique Cholesky decomposition. Using Cholesky decomposition avoid Singularity Effect as the main issue of reaching maximum likelihood by ensures precision matrix (inverse covariance) remains positive definite for a valid Gaussian distribution.

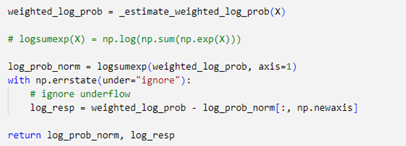

Expectation step finding the best normalized log likelihood in N x K components. Normalized log likelihood is calculated using equation 11, by performing scipy.logsumexp on. Expectation step also return log responsibilities

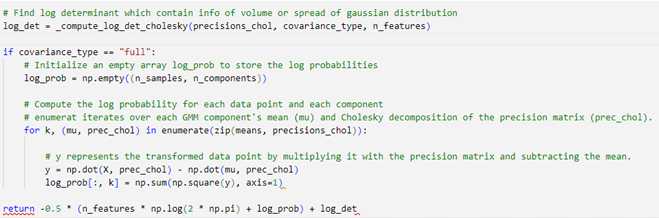

- Log Determinant

First step in expectation is to calculate log determinant of Cholesky Decomposition (Inverse covariance) which provide information about the volume or spread of the Gaussian distributions in the GMM.

- Log Gaussian Probabilities

log gaussian probabilities

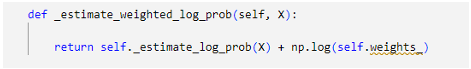

- Weighted Log likelihood

sum of Log Gaussian probabilities which refer to

This value will return as final value if converged is reached, and it will determine the probabilities of which cluster will be assigned to.

Figure 11 Algorithm of weighted log likelihood- Log Responsibilities

Log responsibilities will return as value of difference between weighted log likelihood and normalized log likelihood

This log responsibilities

Converged is reached when change of our normalized log-likelihood of i-iteration and our normalized log-probabilities of previous iteration is lower than tolerance (default = 0.001) that we determined before. Then, the parameter of

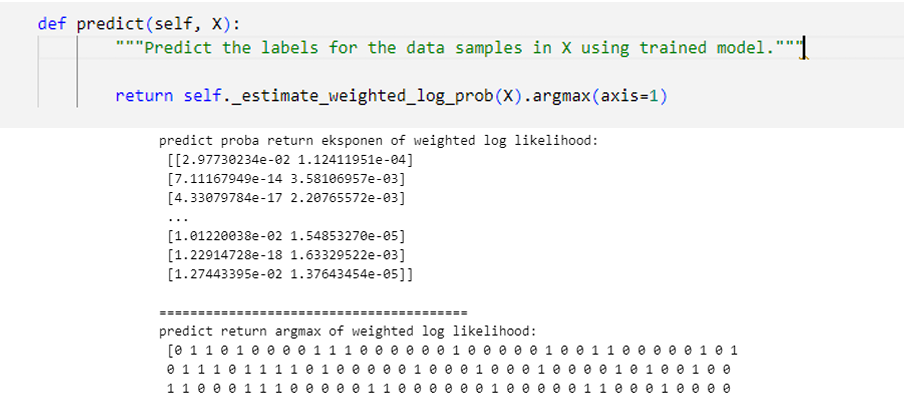

Figure 14. GMM Predict pseudocode

Predict function will return the argmax of log responsibilities that determine which cluster will be assigned for point -n based on which cluster has the highest probabilities.

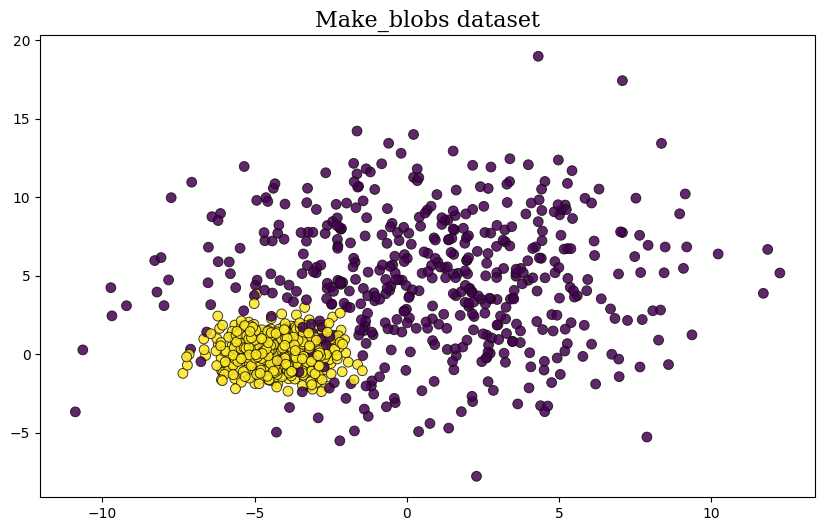

Figure 15. Log Responsibilities argmaxLet's try to create dataset from sklearn.dataset.make_blobs to investigate how the algorithm works.

n_samples = 1000

varied = datasets.make_blobs(n_samples=n_samples,

centers=2,

n_features=2,

cluster_std=[4, 1],

random_state=3)

X, y = varied[0], varied[1]

plt.figure( figsize=(10,6))

plt.scatter(X[:,0], X[:,1], c=y, s=50, cmap=plt.get_cmap('viridis'), **scatter_style)

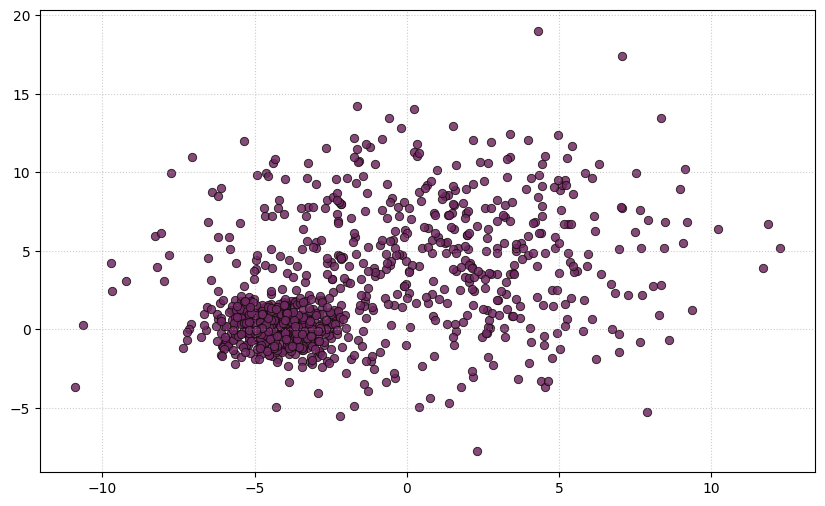

plt.title("Make_blobs dataset", **title)Let see how this data works as one cluster,

Figure 17. Original Dataset without clusterLet us now proceed to compare the operational principles of the K-Means clustering algorithm with those of the Gaussian Mixture Model algorithm, concerning their application to the original dataset.

- K-Means Clustering work by assigns each data point to the cluster whose centroid is closest to it, based on some distance metric (usually Euclidean distance).

- Besides, Gaussian Mixture represents each cluster as a probability distribution characterized by its mean and covariance matrix, expressing the likelihood of data points belonging to different clusters as probabilities.

K-Means aims for a hard assignment of data points to clusters based on distance, GMM takes a soft assignment approach.

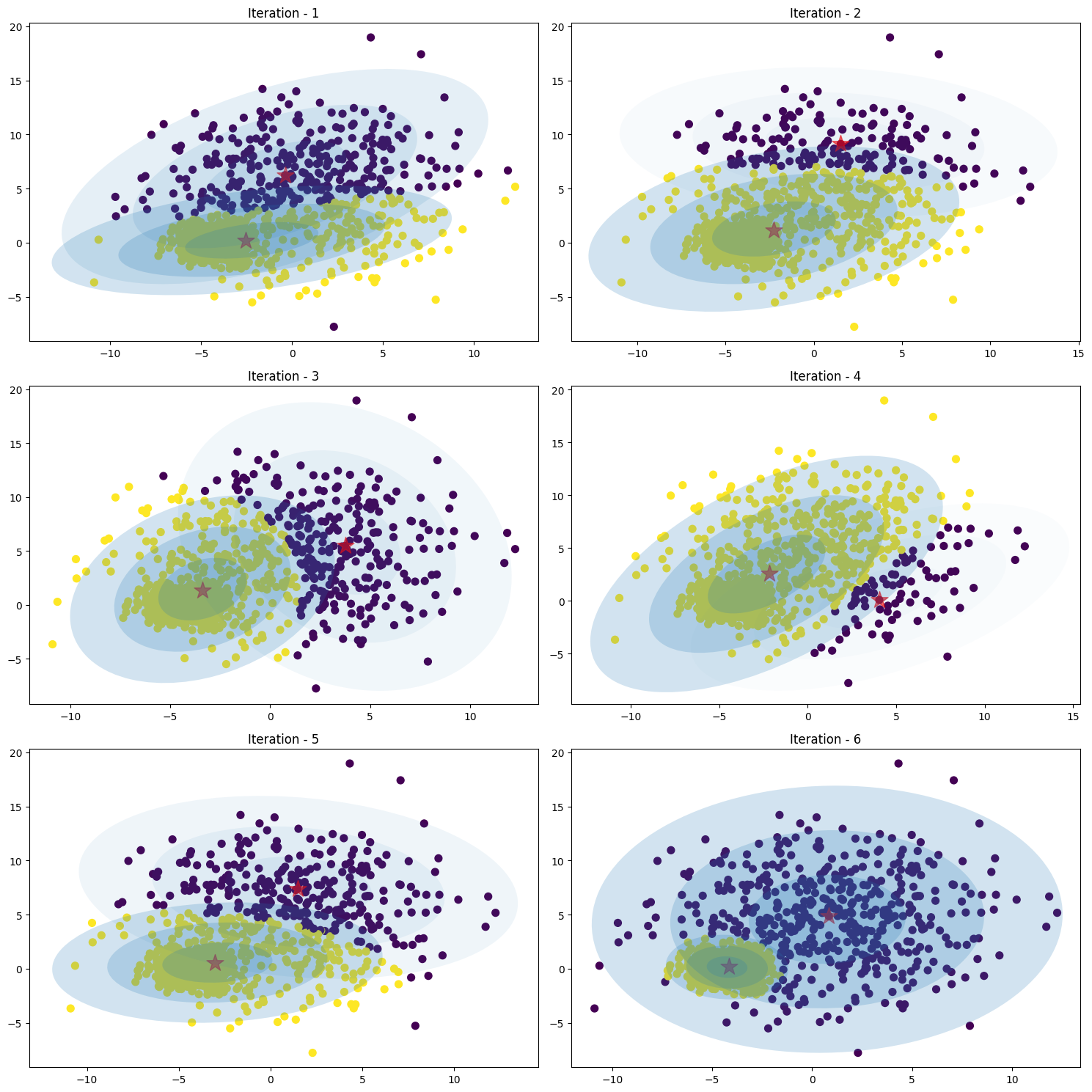

Image below is shown how the Gaussian mixture work without cholesky decomposition (inverse covariance) with number of iteration is [1, 5, 10, 15, 20, 25]

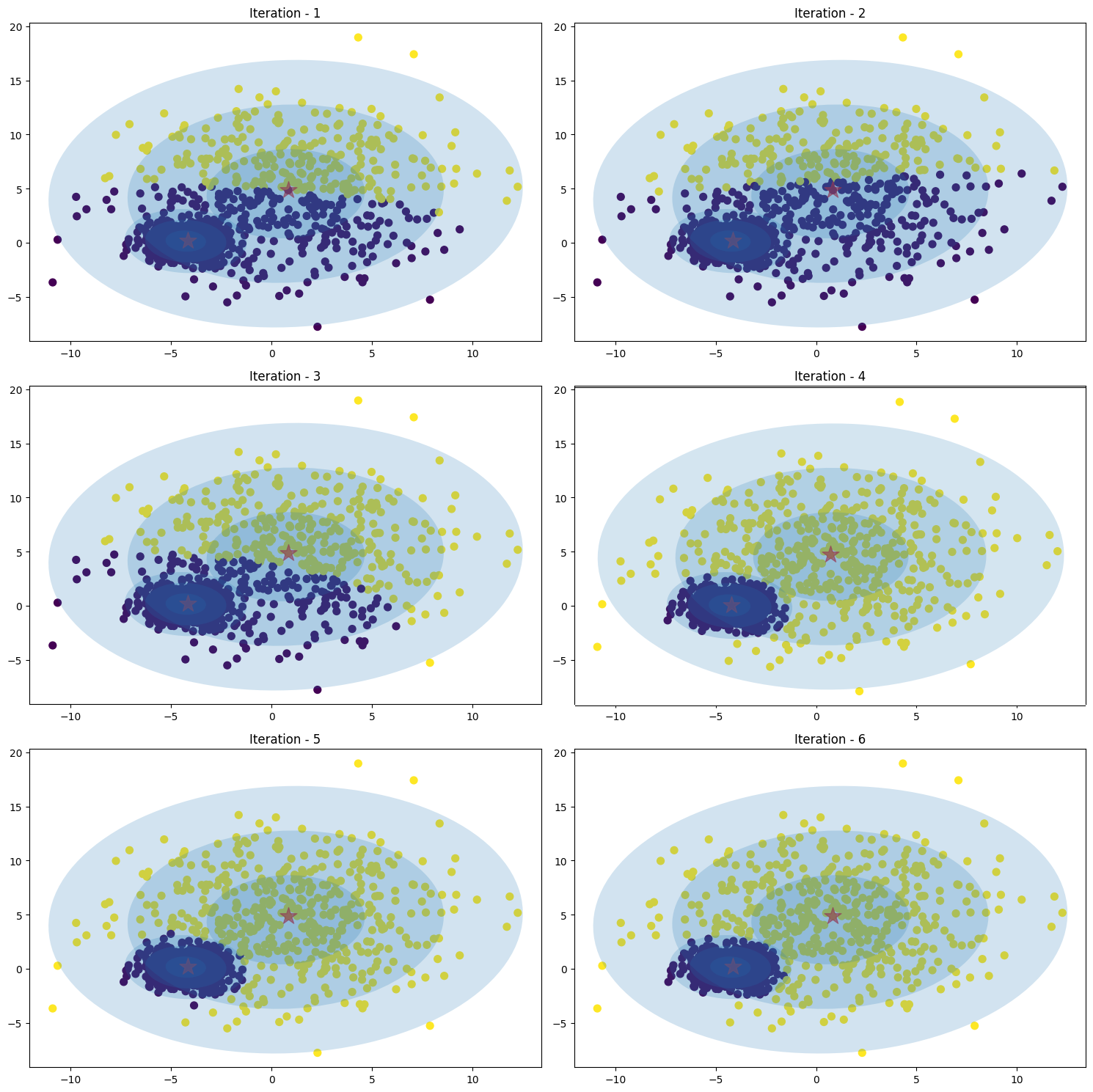

Figure 19. GMM ellipse without Cholesky decompositionImage below is shown how the Gaussian mixture work with cholesky decomposition (inverse covariance) with number of iteration is [1, 2, 3, 4, 5, 6]

Figure 20. GMM ellipse with Cholesky decomposition- Bishop, C.M. (2006). Pattern Recognition and Machine Learning. Springer.

- Géron, A. (2019). Hands-On Machine Learning with Scikit-Learn, Keras & TensorFlow. O'Reilly Media.

- Liu, M., Zhang, Q., Dai, Y., & Sun, C. (2018). Free-Resolution Probability Distributions Map-Based Precise Vehicle Localization in Urban Areas. IEEE Transactions on Intelligent Transportation Systems, 19(2), 546-559.

- Albon, C. (2020). Gaussian Mixture Models and Cluster Validation. Gaussian Mixture Models and Cluster Validation

- Scikit-Learn. Github