Learn Transformer model (Attention is all you need, Google Brain, 2017) from implementation code written by @hyunwoongko in 2021.

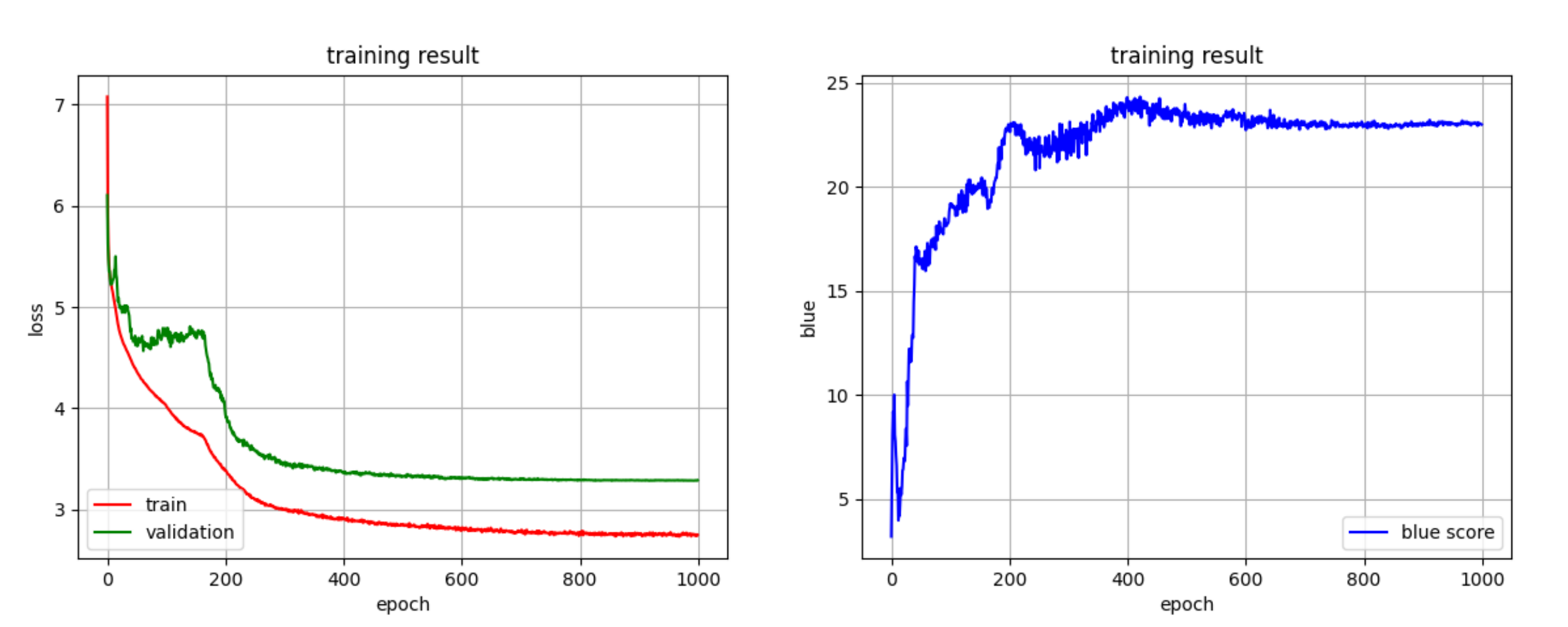

- Min train loss = 2.7348

- Min validation loss = 3.2860

- Max blue score = 24.3375

| Model | Dataset | BLEU Score |

|---|---|---|

| Hyunwoong Ko's | Multi30K EN-DE | 26.4 |

| My Implementation | Multi30K EN-DE | 24.3 |

- Attention is All You Need, 2017 - Google Brain

- The Illustrated Transformer - Jay Alammar

- Multi-Headed Attention implementation PyTorch

- Transformers from scratch blog

- 🤗 Hugging Face course

- How Transformers work in deep learning and NLP: an intuitive introduction

- Why multi-head self attention works: math, intuitions and 10+1 hidden insights

OpenAI GPT model using 🤗HuggingFace

BERT example from the Hugging Face transformer library