Motion Puzzle: Arbitrary Motion Style Transfer by Body Part

Deok-Kyeong Jang, Soomin Park, and Sung-Hee Lee

ACM Transactions on Grahpics (TOG) 2022, presented at ACM SIGGRAPH 2022

Paper: https://arxiv.org/abs/2202.05274

Project: https://lava.kaist.ac.kr/?page_id=6467

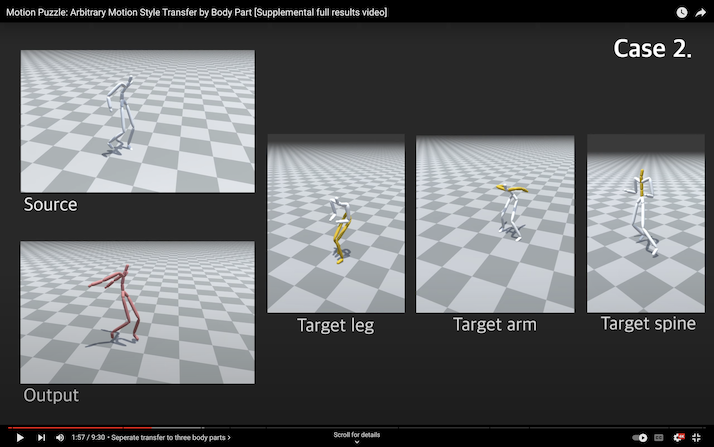

Abstract: This paper presents Motion Puzzle, a novel motion style transfer network that advances the state-of-the-art in several important respects. The Motion Puzzle is the first that can control the motion style of individual body parts, allowing for local style editing and significantly increasing the range of stylized motions. Designed to keep the human’s kinematic structure, our framework extracts style features from multiple style motions for different body parts and transfers them locally to the target body parts. Another major advantage is that it can transfer both global and local traits of motion style by integrating the adaptive instance normalization and attention modules while keeping the skeleton topology. Thus, it can capture styles exhibited by dynamic movements, such as flapping and staggering, significantly better than previous work. In addition, our framework allows for arbitrary motion style transfer without datasets with style labeling or motion pairing, making many publicly available motion datasets available for training. Our frame- work can be easily integrated with motion generation frameworks to create many applications, such as real-time motion transfer. We demonstrate the advantages of our framework with a number of examples and comparisons with previous work.

Click the figure to watch the demo video.

- Pytorch >= 1.10

- Tensorboard 2.9.0

- numpy 1.21.5

- scipy 1.8.0

- tqdm 4.64.0

- PyYAML 6.0.

- matplotlib 3.5.2

Clone this repository and create environment:

git clone https://github.com/DK-Jang/motion_puzzle.git

cd motion_puzzle

conda create -n motion_puzzle python=3.8

conda activate motion_puzzleFirst, install PyTorch >= 1.10 from PyTorch.

Install the other dependencies:

pip install -r requirements.txt - To train the Motion Puzzle network, please download the datasets.

- To run the demo, please download the dataset and pre-trained parameters both.

[Recommend] To download the train and test datasets and the pre-trained network, run the following commands:

bash download.sh datasets

bash download.sh pretrained-networkIf you want to generate train and test datasets from the scratch, download the CMU dataset from Dropbox.

Then, place the zip file directory within database/ and unzip. After that, run the following commands:

python ./preprocess/generate_mirror_bvh.py # generate mirrored bvh files

python ./preprocess/generate_dataset.py # generate train and test datasetAfter downloading the pre-trained parameterss, you can run the demo.

We use the post-processed results (*_fixed.bvh) for all the demo.

To generate motion style transfer results, run following commands:

python test.py --config ./model_ours/info/config.yaml \ # model configuration path

--content ./datasets/cmu/test_bvh/127_21.bvh \ # input content bvh file

--style ./datasets/cmu/test_bvh/142_21.bvh \ # input style bvh file

--output_dir ./output # output directoryGenerated motions(bvh format) will be placed under ./output.

*.bvh: ground-truth content and style motion,

*_recon.bvh: content reconstruction motion,

Style_*_Content_*.bvh: translated output motion,

Style_*_Content_*_fixed.bvh: translated output motion with post-processing including foot-sliding removal.

To transfer arbitrary unseen styles from 3 target motions, each for a different body part, to a single source motion.

You can easily change the style of each body part by changing the --style_leg and --style_spine and --style_arm bvh file names.

In addition, by modifying slightly the forward function of Generator, our model is able to transfer styles to maximum five body parts of source motion.

python test_3bodyparts.py --config model_ours/info/config.yaml \

--content ./datasets/edin_locomotion/test_bvh/locomotion_walk_sidestep_000_000.bvh \ # input content bvh file

--style_leg ./datasets/Xia/test_bvh/old_normal_walking_002.bvh \ # input style leg bvh file

--style_spine ./datasets/Xia/test_bvh/old_normal_walking_002.bvh \ # input style spine bvh file

--style_arm ./datasets/Xia/test_bvh/childlike_running_003.bvh \ # input style arm bvh file

--output_dir ./output_3bodypartsGenerated motions(bvh format) will be placed under ./output_3bodyparts.

*.bvh: ground-truth content and 3 target style motion,

Leg_*_Spine_*_Arm_*_Content_*.bvh: translated output motion,

Leg_*_Spine_*_Arm_*_Content_*_fixed.bvh: translated output motion with post-processing.

Interpolation between two different style motions. This example is all body parts interpolation with linear weight 0.5.

You can easily change the code to interpolate one or 2, 3 body parts' style.

python test_interpolation.py --config model_ours/info/config.yaml \

--content ./datasets/cmu/test_bvh/41_02.bvh \ # input content bvh file

--style1 datasets/cmu/test_bvh/137_11.bvh \ # input style1 bvh file

--style2 datasets/cmu/test_bvh/55_07.bvh \ # input style2 bvh file

--weight 0.5 \ # interpolation weight

--output_dir ./output_interpolationGenerated motions(bvh format) will be placed under ./output_interpolation.

*.bvh: ground-truth content and two style motions for interpolation,

All_*_Style1_*_Style2_*_Content_*_.bvh: interpolated output motion,

All_*_Style1_*_Style2_*_Content_*_fixed.bvh: interpolated output motion with post-processing.

- Quantitative evaluation

To train Motion Puzzle networks from the scratch, run the following commands.

Training takes about 5-6 hours on two 2080ti GPUs. Please see configs/config.yaml for training arguments.

python train.py --config configs/config.yamlTrained networks will be placed under ./model_ours/

We found that root information did not play a significant role in the performance of our framework. Therefore, in this github project, we removed the root information from the input representation. Also, we removed the loss function for preserving the root motion within the total loss.

If you find this work useful for your research, please cite our paper:

@article{Jang_2022,

doi = {10.1145/3516429},

url = {https://doi.org/10.1145%2F3516429},

year = 2022,

month = {jun},

publisher = {Association for Computing Machinery ({ACM})},

volume = {41},

number = {3},

pages = {1--16},

author = {Deok-Kyeong Jang and Soomin Park and Sung-Hee Lee},

title = {Motion Puzzle: Arbitrary Motion Style Transfer by Body Part},

journal = {{ACM} Transactions on Graphics}

}

This repository contains pieces of code from the following repositories:

A Deep Learning Framework For Character Motion Synthesis and Editing.

Unpaired Motion Style Transfer from Video to Animation.

In addition, all mocap datasets are taken from the work of Holden et al.