Awesome Vit Quantization and Acceleration

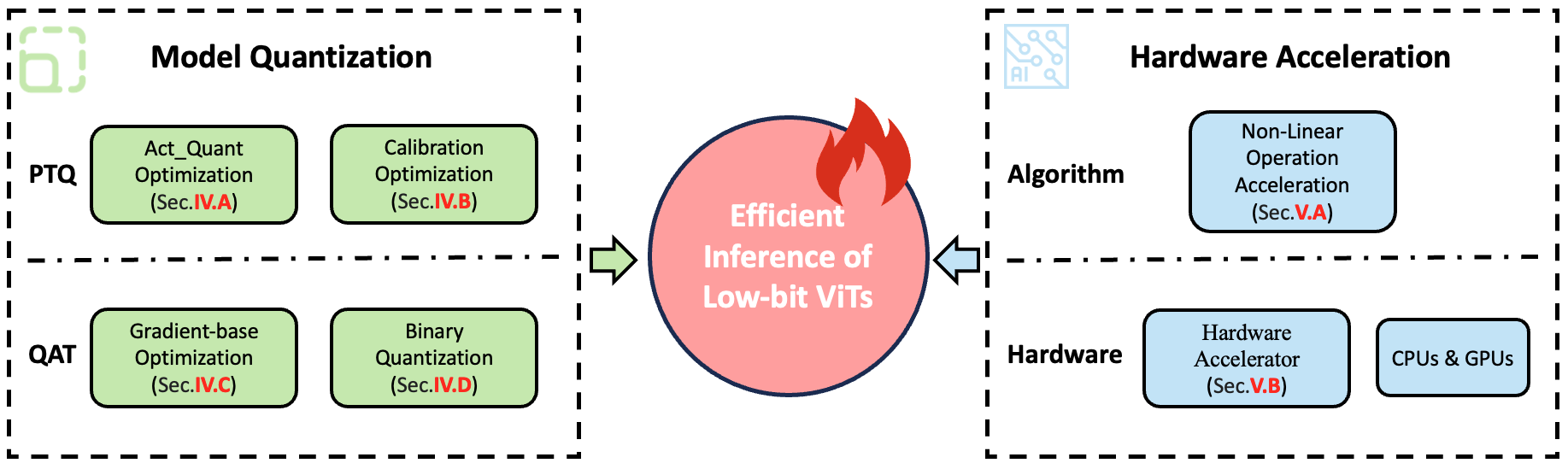

🔍 Dive into the cutting-edge with this curated list of papers on Vision Transformers (ViT) quantization and hardware acceleration , featured in top-tier AI conferences and journals. This collection is meticulously organized and draws upon insights from our comprehensive survey:

[Arxiv] Model Quantization and Hardware Acceleration for Vision

Transformers: A Comprehensive Survey

Activation Quantization Optimization

Date

Title

Paper

Code

2021.11

“PTQ4ViT: Post-training Quantization for Vision Transformers with Twin Uniform Quantization”

[ECCV‘22] [code]

2021.11

“FQ-ViT: Post-Training Quantization for Fully Quantized Vision Transformer”

[IJCAI’22] [code]

2022.12

“RepQ-ViT: Scale Reparameterization for Post-Training Quantization of Vision Transformers”

[ICCV‘23] [code]

2023.03

“Towards Accurate Post-Training Quantization for Vision Transformer”

[MM’22] -

2023.05

“TSPTQ-ViT: Two-scaled post-training quantization for vision transformer”

[ICASSP‘23] -

2023.11

“I&S-ViT: An Inclusive & Stable Method for Pushing the Limit of Post-Training ViTs Quantization”

[Arxiv] [code]

2024.01

“MPTQ-ViT: Mixed-Precision Post-Training Quantization for Vision Transformer”

[Arxiv] -

2024.01

“LRP-QViT: Mixed-Precision Vision Transformer Quantization via Layer-wise Relevance Propagation”

[Arxiv] -

2024.02

“RepQuant: Towards Accurate Post-Training Quantization of Large Transformer Models via Scale Reparameterization”

[Arxiv] -

2024.04

“Instance-Aware Group Quantization for Vision Transformers”

[Arxiv] -

2024.05

“P^2-ViT: Power-of-Two Post-Training Quantization and Acceleration for Fully Quantized Vision Transformer”

[Arxiv] [code]

Calibration Optimization For PTQ

Date

Title

Paper

Code

2021.06

“Post-Training Quantization for Vision Transformer”

[NIPS 2021] [code]

2021.11

“PTQ4ViT: Post-training Quantization for Vision Transformers with Twin Uniform Quantization”

[ECCV’22] [code]

2022.03

“Patch Similarity Aware Data-Free Quantization for Vision Transformers”

[ECCV‘22] [code]

2022.09

“PSAQ-ViT V2: Towards Accurate and General Data-Free Quantization for Vision Transformers”

[TNNLS’23] [code]

2022.11

“NoisyQuant: Noisy Bias-Enhanced Post-Training Activation Quantization for Vision Transformers”

[CVPR‘23] -

2023.03

“Towards Accurate Post-Training Quantization for Vision Transformer”

[MM’22] -

2023.05

“Finding Optimal Numerical Format for Sub-8-Bit Post-Training Quantization of Vision Transformers”

[ICASSP‘23] -

2023.08

“Jumping through Local Minima: Quantization in the Loss Landscape of Vision Transformers”

[ICCV’23] [code]

2023.10

“LLM-FP4: 4-Bit Floating-Point Quantized Transformers”

[EMNLP‘23] [code]

2024.05

“P^2-ViT: Power-of-Two Post-Training Quantization and Acceleration for Fully Quantized Vision Transformer”

[Arxiv] [code]

Gradient-base Optimization For QAT

Date

Title

Paper

Code

2022.01

“TerViT: An Efficient Ternary Vision Transformer”

[Arxiv] -

2022.10

“Q-ViT: Accurate and Fully Quantized Low-bit Vision Transformer”

[NIPS’22] [code]

2022.12

“Quantformer: Learning Extremely Low-Precision Vision Transformers”

[TPAMI‘22] -

2023.02

“Oscillation-free Quantization for Low-bit Vision Transformers”

[PMLR’23] [code]

2023.05

“Boost Vision Transformer with GPU-Friendly Sparsity and Quantization”

[CVPR‘23] -

2023.06

“Bit-Shrinking: Limiting Instantaneous Sharpness for Improving Post-Training Quantization”

[CVPR’23] -

2023.07

“Variation-aware Vision Transformer Quantization”

[Arxiv] [code]

2023.12

“PackQViT: Faster Sub-8-bit Vision Transformers via Full and Packed Quantization on the Mobile”

[NIPS‘23] -

Date

Title

Paper

Code

2022.11

“BiViT: Extremely Compressed Binary Vision Transformer”

[ICCV’23] -

2023.05

“BinaryViT: Towards Efficient and Accurate Binary Vision Transformers”

[Arxiv] -

2023.06

“BinaryViT: Pushing Binary Vision Transformers Towards Convolutional Models”

[CVPR‘23] [code]

2024.05

“BinaryFormer: A Hierarchical-Adaptive Binary Vision Transformer (ViT) for Efficient Computing”

[TII] -

Non-linear Operations Acceleration

Date

Title

Paper

Code

2021.11

“FQ-ViT: Post-Training Quantization for Fully Quantized Vision Transformer”

[IJCAI’22] [code]

2022.07

“I-ViT: Integer-only Quantization for Efficient Vision Transformer Inference”

[ICCV‘23] [code]

2023.06

“Practical Edge Kernels for Integer-Only Vision Transformers Under Post-training Quantization”

[MLSYS’23] -

2023.10

“SOLE: Hardware-Software Co-design of Softmax and LayerNorm for Efficient Transformer Inference”

[ICCAD‘23] -

2023.12

“PackQViT: Faster Sub-8-bit Vision Transformers via Full and Packed Quantization on the Mobile”

[NIPS’23] -

2024.05

“P^2-ViT: Power-of-Two Post-Training Quantization and Acceleration for Fully Quantized Vision Transformer”

[Arxiv] [code]

Date

Title

Paper

Code

2022.01

“VAQF: Fully Automatic Software-Hardware Co-Design Framework for Low-Bit Vision Transformer”

[Arxiv] -

2022.08

“Auto-ViT-Acc: An FPGA-Aware Automatic Acceleration Framework for Vision Transformer with Mixed-Scheme Quantization”

[FPL‘22] -

2023.10

“An Integer-Only and Group-Vector Systolic Accelerator for Efficiently Mapping Vision Transformer on Edge”

[TCAS-I’23] -

2023.10

“SOLE: Hardware-Software Co-design of Softmax and LayerNorm for Efficient Transformer Inference”

[ICCAD‘23] -

2024.05

“P^2-ViT: Power-of-Two Post-Training Quantization and Acceleration for Fully Quantized Vision Transformer”

[Arxiv] [code]

If you find our survey useful or relevant to your research, please kindly cite our paper:

@misc{du2024model,

title={Model Quantization and Hardware Acceleration for Vision Transformers: A Comprehensive Survey},

author={Dayou Du and Gu Gong and Xiaowen Chu},

year={2024},

eprint={2405.00314},

archivePrefix={arXiv},

primaryClass={cs.LG}

}