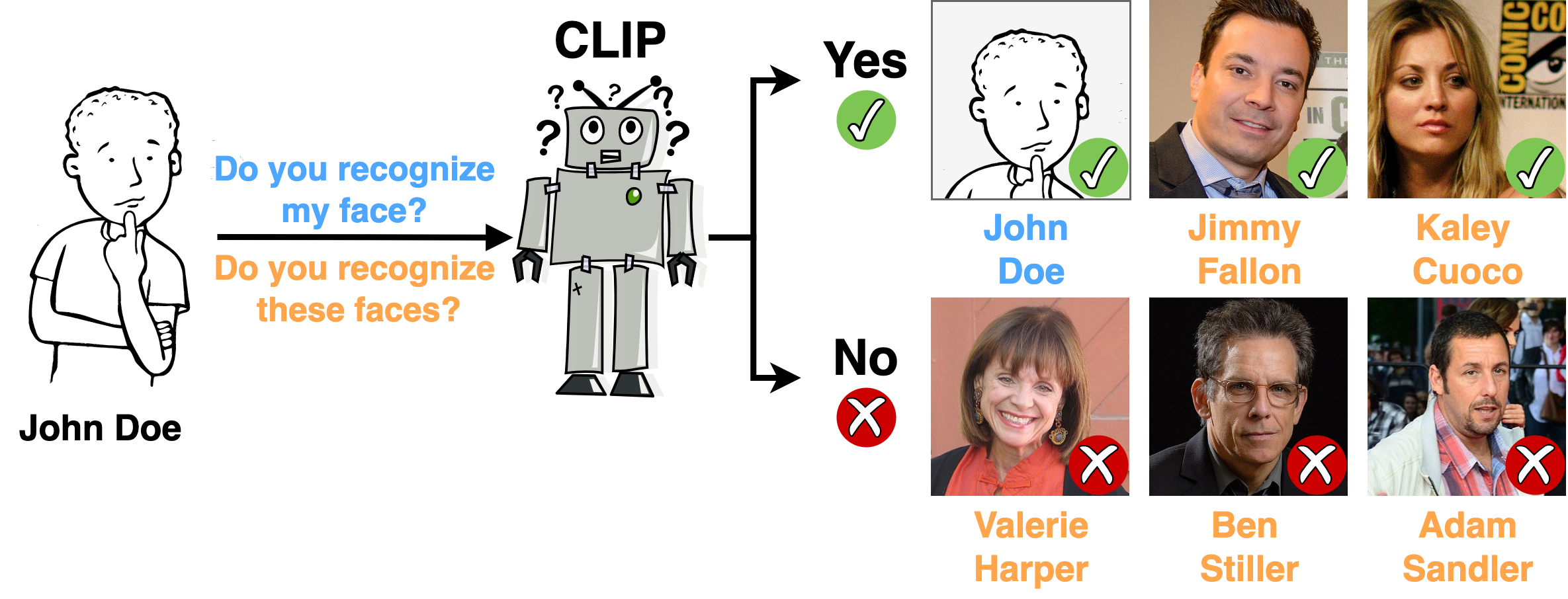

Illustration of our Identity Inference Attack (IDIA). True-positive (✓) and true-negative (✗) predictions of individuals to be part of the training data of CLIP. The IDIA was performed on a CLIP model trained on the Conceptual Captions 3M dataset where each person appeared only 75 times in a dataset with a total of 2.8 million image-text pairs. Celebrity images licensed as CC BY 2.0 (see Jimmy Fallon, Kaley Cuoco, Valerie Harper, Ben Stiller, Adam Sandler

Identity Inference Attack Examples (IDIA) of infrequent appearing celebrities. True-positive (✓) and true-negative (✗) predictions of European and American celebrities in the LAION-400M dataset. The number in parentheses indicates how often the person appeared in the LAION dataset, containing 400 million image-text pairs. The IDIA was performed on different CLIP models trained on the LAION-400M dataset with the same prediction results. Images licensed as CC BY 3.0 and CC BY 2.0 (see Bernhard Hoëcker, Bettina Lamprecht, Ilene Kristen, Carolin Kebekus, Crystal Chappell, Guido Cantz, Max Giermann, Kai Pflaume, Michael Kessler

Abstract: With the rise of deep learning in various applications, privacy concerns around the protection of training data has become a critical area of research. Whereas prior studies have focused on privacy risks in single-modal models, we introduce a novel method to assess privacy for multi-modal models, specifically vision-language models like CLIP. The proposed Identity Inference Attack (IDIA) reveals whether an individual was included in the training data by querying the model with images of the same person. Letting the model choose from a wide variety of possible text labels, the model reveals whether it recognizes the person and, therefore, was used for training. Our large-scale experiments on CLIP demonstrate that individuals used for training can be identified with very high accuracy. We confirm that the model has learned to associate names with depicted individuals, implying the existence of sensitive information that can be extracted by adversaries. Our results highlight the need for stronger privacy protection in large-scale models and suggest that IDIAs can be used to prove the unauthorized use of data for training and to enforce privacy laws.

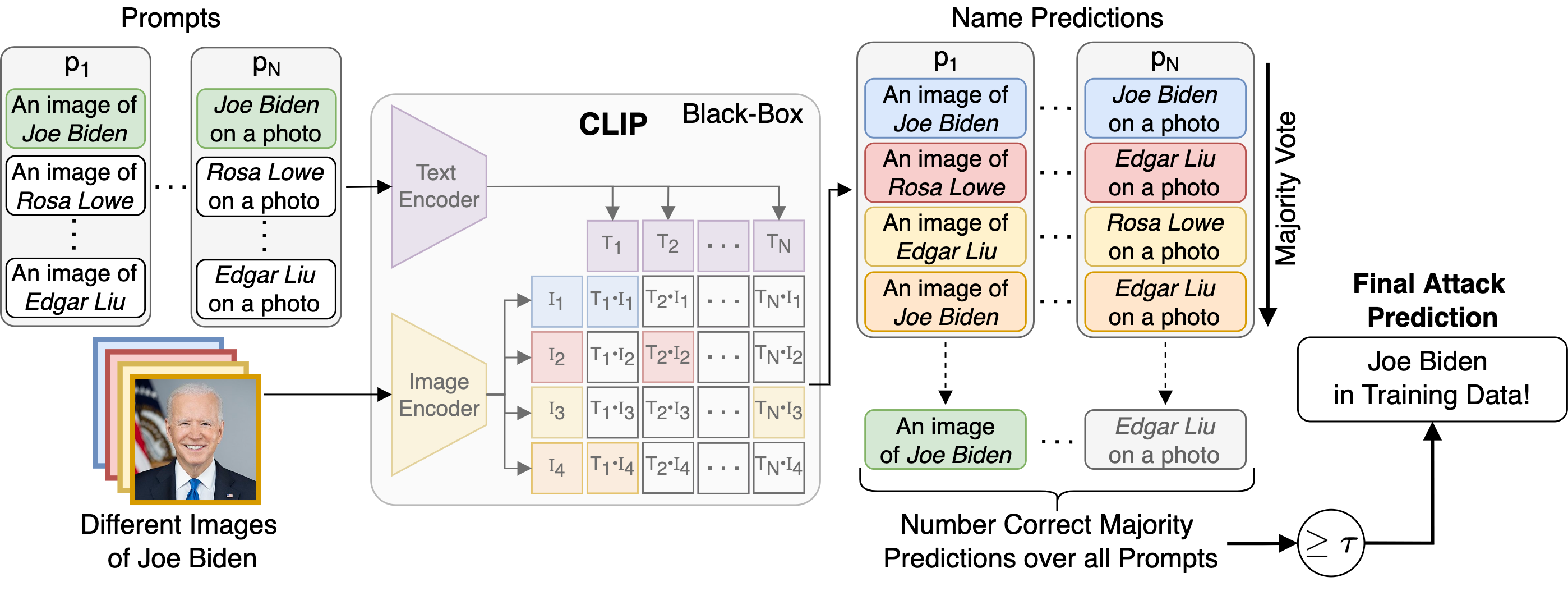

Identity Inference Attack (IDIA). Depiction of the workflow of our IDIA. Given different images and the name of a person, CLIP is queried with the images and multiple prompt templates containing possible names. After receiving the results of the queries, for each of the prompts, the name inferred for the majority of images is taken as the predicted name. If the number of correct predictions over all the prompts is greater or equal τ, the person is assumed to be in the training data. (Best Viewed in Color)

This part describes everything that is needed to setup and run the experiments on the LAION-400M dataset.

The first step is to download the images of the individuals that are not present in the LAION-400M dataset. To do this simply run the following script:

python laion400m_experiments/00_download_laion_european_non_members.pyAfter all setup steps are done the notebooks 01_analyze_laion400m.ipynb and 02_idia_laion_multiprompt.ipynb can be run.

This part describes everything that is needed to setup and run the experiments on the Conceptual Captions 3M dataset. First the dataset has to be downloaded from the official website. Because failed image download sometimes produce empty files, the 00_filter_empty_files.ipynb-notebook has to be used to remove all empty files for training the models.

Create an index using clip-retrieval. Then we can start clip-retrieval in multiple docker containers:

for i in 0 1 2 3 4 5 6 7 8 9; do ./docker_run.sh -d "0" -m mounts.docker -n clip-retrieval${i}_gpu0; doneThen we can run the clip-retrieval command in all those docker containers:

for i in 0 1 2 3 4 5 6 7 8 9; do docker exec -d clip-retrieval${i}_gpu0 clip-retrieval back --port 1337 --indices-paths configs/laion400m.json; doneBefore running the notebooks of the CC3M experiments you first have to download the pretrained models from the GitHub release page and extract them to cc3m_experiments/checkpoints. Don't forget to unzip the ResNet-50x4 model files after moving them in the checkpoints folder.

Download the pretrained ResNet-50 trained on FaceScrub rn50_facescrub.ckpt from the release page and extract it to facescrub_training/pretrained_models/rn50_facescrub.ckpt.

Download the image embeddings of the FaceScrub dataset calculated using the OpenAI CLIP model openai_facescrub_embeddings.pt and move the file to embeddings/openai_facescrub.pt

After all setup steps are done, the notebooks can be run in the order following the numbering.

If you build upon our work, please don't forget to cite us.

@article{hintersdorf22_does_clip_know_my_name,

author = {Hintersdorf, Dominik and Struppek, Lukas and Brack, Manuel and Friedrich, Felix and Schramowski, Patrick and Kersting, Kristian},

title = {Does CLIP Know my Face?},

journal = {arXiv preprint},

volume = {arXiv:2209.07341},

year = {2022},

}

Jimmy Fallon, by Montclair Film, 2013, cropped, CC BY 2.0

Kaley Cuoco, by MelodyJSandoval, 2009, cropped, CC BY 2.0

Valerie Harper, by Maggie, 2007, cropped, CC BY 2.0

Ben Stiller, by Montclair Film (Photography by Neil Grabowsky), 2019, cropped, CC BY 2.0

Adam Sandler, by Glyn Lowe Photo Works, 2014, cropped, CC BY 2.0

Bernhard Hoëcker, by JCS, 2012, cropped, CC BY 3.0

Bettina Lamprecht, by JCS, 2012, cropped, CC BY 3.0

Ilene Kristen, by Greg Hernandez, 2014, cropped, CC BY 2.0

Carolin Kebekus, by Harald Krichel, 2019, cropped, CC BY 3.0

Crystal Chappell, by Greg Hernandet, 2010, cropped, CC BY 2.0

Guido Cantz, by JCS, 2015, cropped, CC BY 3.0

Max Giermann, by JCS, 2017, cropped, CC BY 3.0

Kai Pflaume, by JCS, 2012, cropped, CC BY 3.0

Michael Kessler, by JCS, 2012, cropped, CC BY 3.0