The official implementation for Candidate Set Re-ranking for Composed Image Retrieval with Dual Multi-modal Encoder.

Site navigation > Introduction | Setting up | Usage | Recreating analysis plots | Citation

News and upcoming updates

- Feb-2024 Camera-ready version is now available (click the paper badge above to view).

- Jan-2024 Our paper has been accepted for publication in TMLR, camera-ready version coming soon.

- Jan-2024 Readme instructions released.

- Jan-2024 Code and pre-trained checkpoints released.

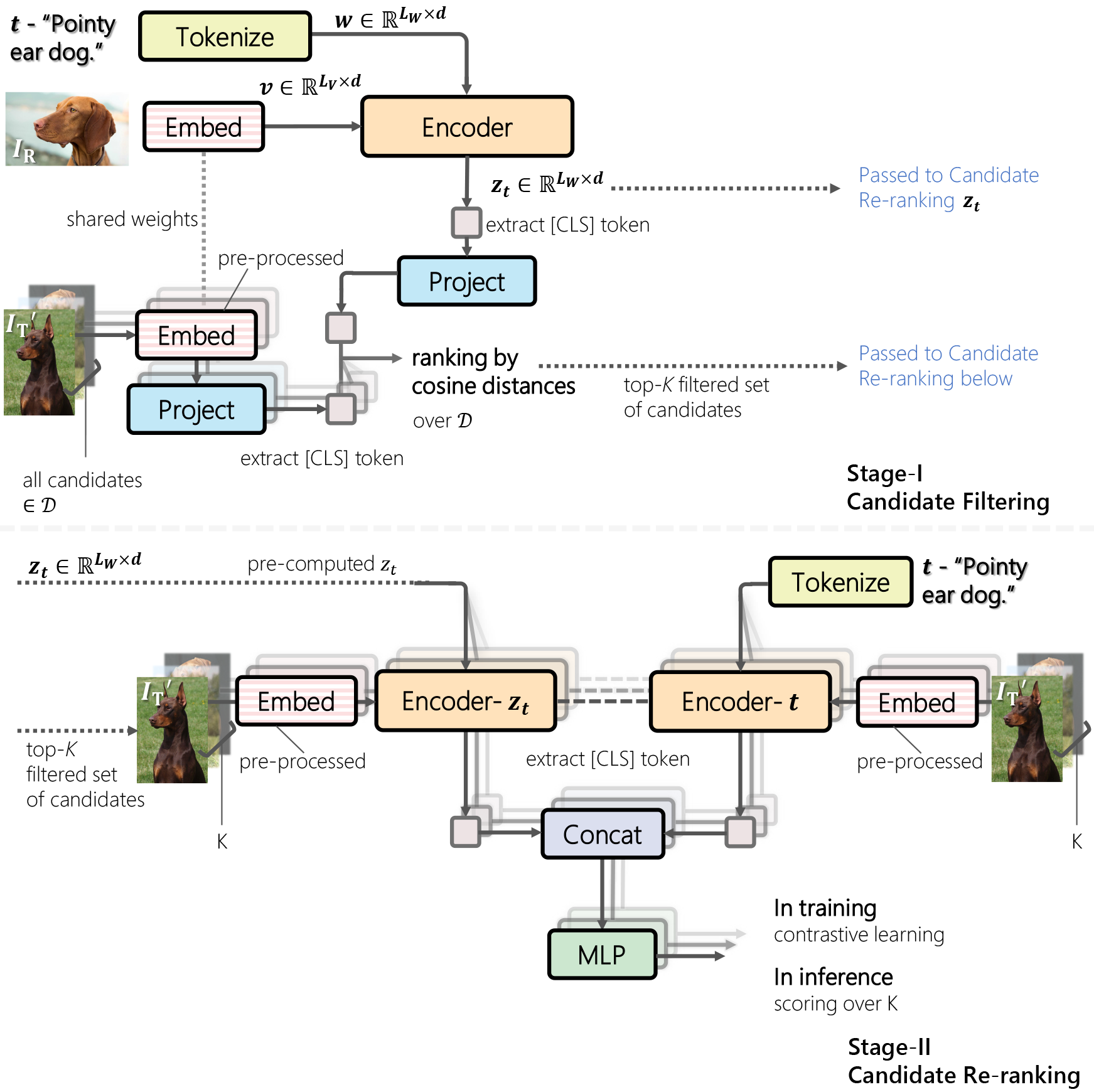

Our two-stage training pipeline is illustrated as follows.

Stage-I candidate filtering yields the top-K candidate list per query, which is then re-ranked in stage-II.

Here, stage-I is faster in inference, while stage-II is slower but much more discriminative.

Click to view the abstract

Composed image retrieval aims to find an image that best matches a given multi-modal user query consisting of a reference image and text pair. Existing methods commonly pre-compute image embeddings over the entire corpus and compare these to a reference image embedding modified by the query text at test time. Such a pipeline is very efficient at test time since fast vector distances can be used to evaluate candidates, but modifying the reference image embedding guided only by a short textual description can be difficult, especially independent of potential candidates. An alternative approach is to allow interactions between the query and every possible candidate, i.e., reference-text-candidate triplets, and pick the best from the entire set. Though this approach is more discriminative, for large-scale datasets the computational cost is prohibitive since pre-computation of candidate embeddings is no longer possible. We propose to combine the merits of both schemes using a two-stage model. Our first stage adopts the conventional vector distancing metric and performs a fast pruning among candidates. Meanwhile, our second stage employs a dual-encoder architecture, which effectively attends to the input triplet of reference-text-candidate and re-ranks the candidates. Both stages utilize a vision-and-language pre-trained network, which has proven beneficial for various downstream tasks.

First, clone the repository to a desired location.

Prerequisites

The following commands will create a local anaconda environment with the necessary packages installed.

If you have worked with our previous codebase Bi-BLIP4CIR, you can directly use its environment, as the required packages are identical.

conda create -n cirr_dev -y python=3.8

conda activate cirr_dev

pip install -r requirements.txt

Datasets

Experiments are conducted on two standard datasets -- Fashion-IQ and CIRR, please see their repositories for download instructions.

The downloaded file structure should look like this.

Optional: Set up Comet

We use Comet to log the experiments. If you are unfamiliar with it, see the quick start guide.

You will need to obtain an API Key for --api-key and create a personal workspace for --workspace.

If these arguments are not provided, the experiment will be logged only locally.

Note

Our code has been tested on torch 1.11.0 and 2.1.1. Presumably, any version in between shall be fine.

Modify requirements.txt to specify your specific wheel of PyTorch+CUDA versions.

Note that BLIP supports transformers<=4.25, otherwise errors will occur.

The entire training & validation process involves the following steps:

- Stage 1

- training;

- validating; extracting and saving the top-K candidate list (termed the "top-k file");

- Stage 2

- training;

- validating;

- (for CIRR) generating test split predictions for online submission.

See

All experiments are conducted using one NVIDIA A100, in practice, we observe the maximum VRAM usage to be around 70G (CIRR, stage II training).

Note

To reproduce our reported results, please first download the checkpoints and top-k files and save them to paths noted in DOWNLOAD.md.

We provide all intermediate checkpoints and top-k files, so you could skip training steps and (for instance) develop directly on our Stage 2 model.

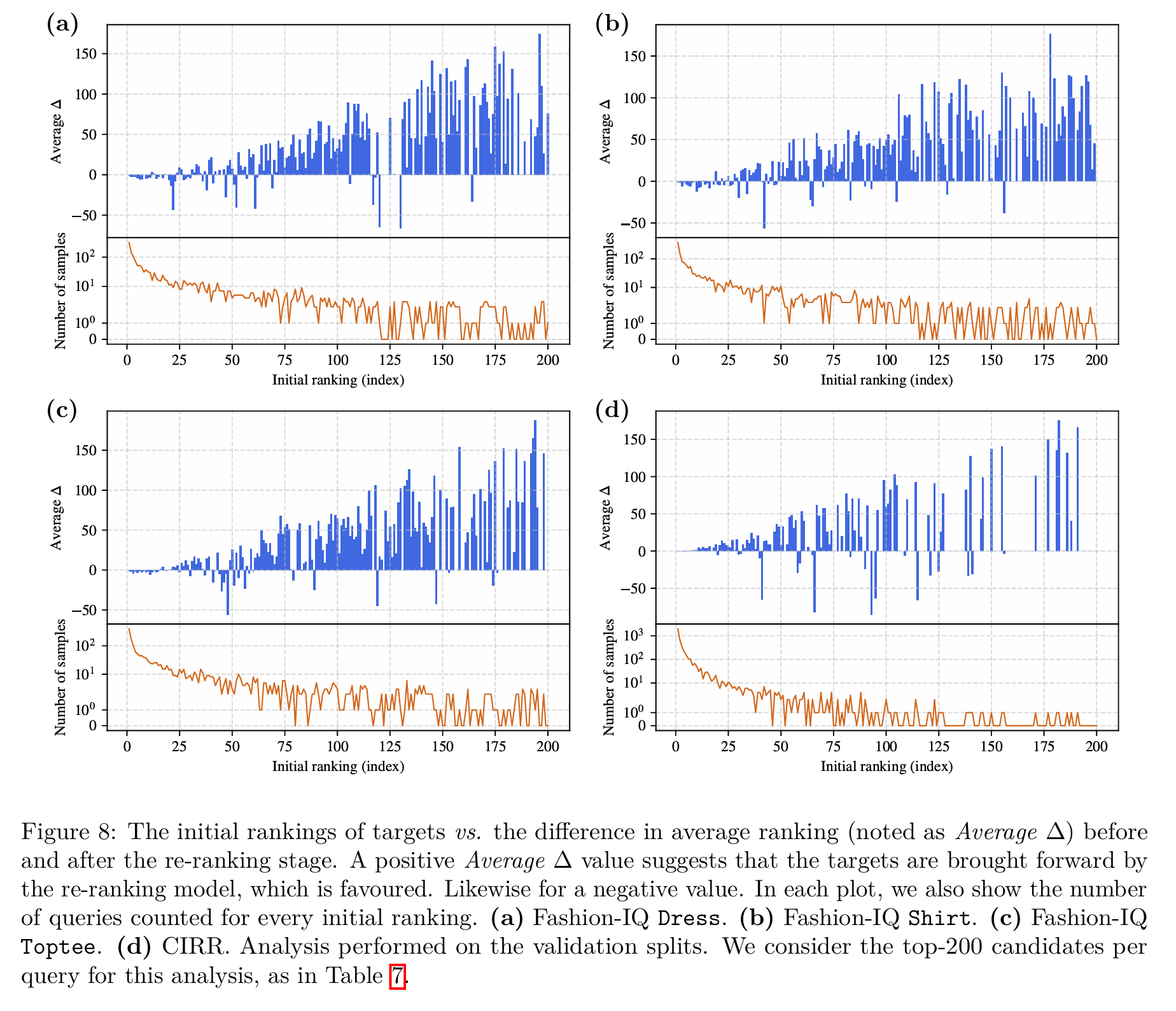

See this jupyter notebook on recreating Appendix A.4, Figure 8 in our paper.

This figure demonstrates in detail the strength of the re-ranking stage (the indexes of positive targets are often brought forward significantly).

Note that the script requires the labels produced by the validation functions (func: compute_fiq_val_metrics | compute_cirr_val_metrics), which we have saved into .pt files and provided in the folder.

If you find this code useful for your research, please consider citing our work.

@article{liu2024candidate,

title = {Candidate Set Re-ranking for Composed Image Retrieval with Dual Multi-modal Encoder},

author = {Zheyuan Liu and Weixuan Sun and Damien Teney and Stephen Gould},

journal = {Transactions on Machine Learning Research},

issn = {2835-8856},

year = {2024},

url = {https://openreview.net/forum?id=fJAwemcvpL}

}

@InProceedings{Liu_2024_WACV,

author = {Liu, Zheyuan and Sun, Weixuan and Hong, Yicong and Teney, Damien and Gould, Stephen},

title = {Bi-Directional Training for Composed Image Retrieval via Text Prompt Learning},

booktitle = {Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV)},

month = {January},

year = {2024},

pages = {5753-5762}

}MIT License applied. In line with licenses from Bi-BLIP4CIR, CLIP4Cir and BLIP.

Our implementation is based on Bi-BLIP4CIR, CLIP4Cir and BLIP.

- Raise a new GitHub issue

- Contact us

-112467)