This branch contains the Flash artifact for the evaluations in SIGCOMM22 paper "Flash: Fast, Consistent Data Plane Verification for Large-Scale Network Settings".

Table of Contents

To run the evaluations, the following platform and software suits are required:

- Hardware requirements: A server with 8+ CPU cores and 32GB+ memory is prefered

- Operating system: Ubuntu Server 20.04 (Other operating systems are untested, but should also work as long as the bellow software suits are avaliable)

- JDK 17

- Maven v3.8+

- Git v2.25.1+

- Python v3.8+

- Python3-pip v20.0.0+

- curl v7.68.0+

Note:

- Make sure

javaandmvnis added to your $PATH environment variable, so that the Flash build script can find them. - Maven version must be higher than v3.8, else maven does not work with JDK 17.

- The

build.shscript can help to setup the environment above, please refer tobuild.shto prepare the environment or follow the following instructions to usebuild.shdirectly.

Flash artifact is publicly avaliable, clone the repo to any directory to get all required sources for evaluation.

$ git clone https://github.com/snlab/flash.git

The evaluations for SIGCOMM22 are in the branch sigcomm22-artifact, switch to the repo folder and checkout the branch.

$ cd flash

$ git checkout sigcomm22-artifactTo ease the evaluation process, we provide a build script to build Flash and prepare the datasets for evaluations.

$ ./build.shThe build.sh script will install all necessary libraries, and download all datasets for evaluations, then build the java project.

Note:

The script build.sh installs JDK 17 by apt, which will ask for geographic information, please input correct zone information while installation.

The ./evaluator file is the entrypoint for all evaluations, which takes an argument -e for the evaluation name.

$ ./evaluator -h

usage: evaluator [-h] -e EVALUATION

options:

-h, --help show this help message and exit

-e EVALUATION The EVALUATION to be runRun the evaluation:

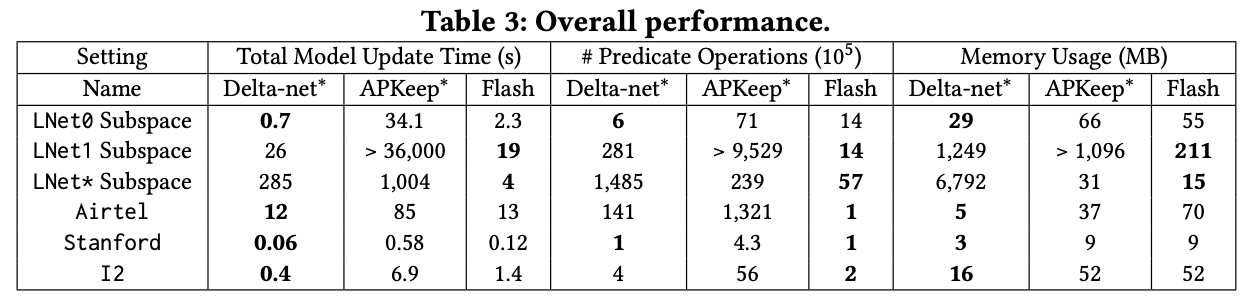

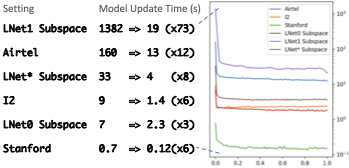

$ ./evaluator -e overallExpected output: The evaluation generates a file "overall.txt" lists data corresponding to Table 3 and Figure 6 in paper. The consoleMsg.log provides more detailed information.

$ ./evaluator -e deadSettingsExpected output: It provides an interface to try some settings (in Table 3 and Figure 6) are not solved within 1-hour.

Run the evaluation:

Execute the following command to run the evaluation:

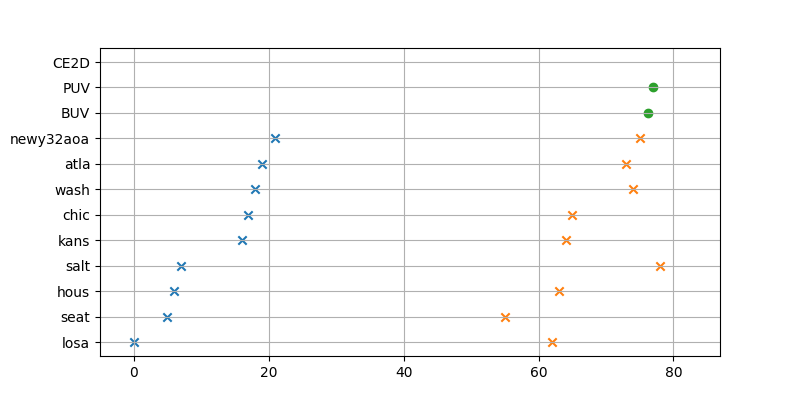

$ ./evaluator -e I2CE2DExpected output:

The evaluation generates a scatter figuer in output/I2CE2D.png (Figure 7(a) in paper).

Run the evaluation:

Execute the following command to run the evaluation:

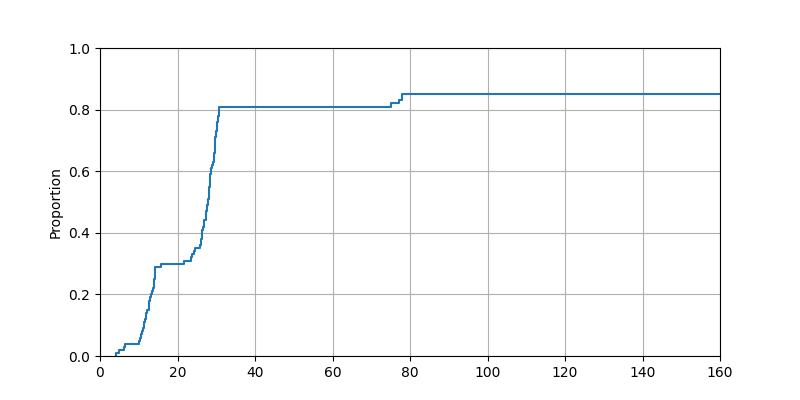

$ ./evaluator -e I2EarlyDetectionExpected output:

The evaluation generates a CDF figuer in output/I2EarlyDetection.png (Figure 7(b) in paper).

The figure may vary due to randomness.

To ease the evaluation process, we use a snapshot of update trace thus the CDF line is different from paper but the results still conform with paper.

Run the evaluation:

Execute the following command to run the evaluation:

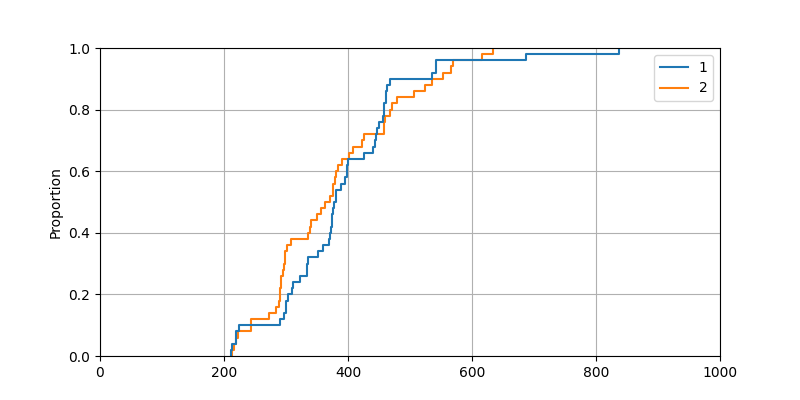

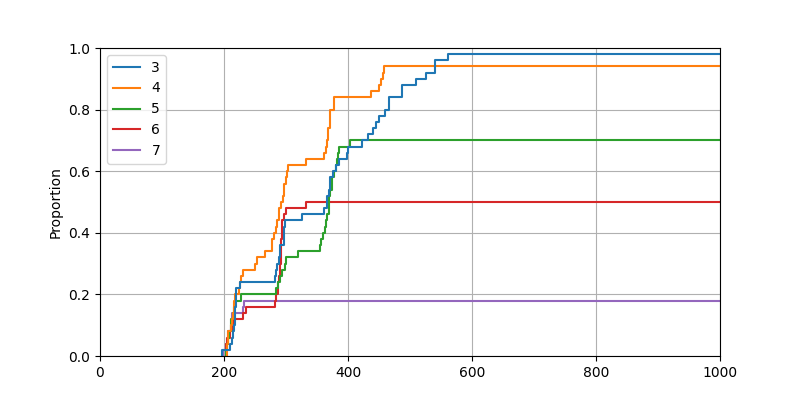

$ ./evaluator -e I2LongTailExpected output:

The evaluation generates a CDF figuer in output/I2LongTail{,1}.png (Figure 7(c/d) in paper).

The figure may vary due to randomness.

Run the evaluation:

Execute the following command to run the evaluation:

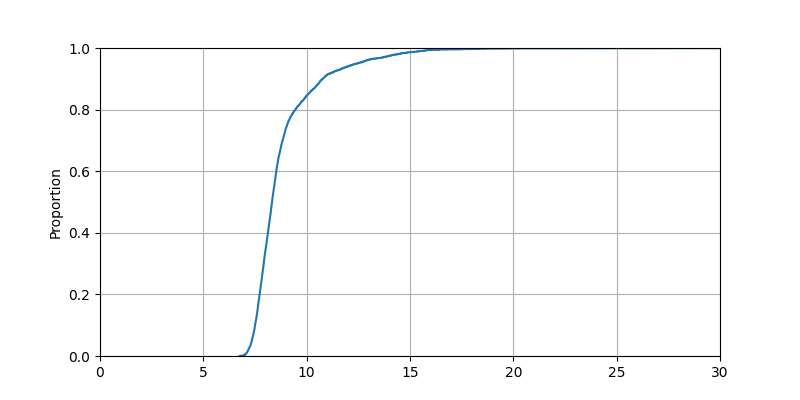

$ ./evaluator -e LNet1AllPairExpected output:

The evaluation generates a CDF figuer in output/LNet1AllPair.png (Figure 8 in paper).

Note: The CDF line of the above figure is smoother than the Figure 8 of the paper due to the code cleaning up. We'll update Figure 8 to the newer result.

$ ./evaluator -e batchSize

$ python3 py/BatchSize.py ./Expected output:

The first line of command generates few log files, e.g., "LNet0bPuUs.txt". Then one can use the second command to draw a figure output/batchSize.png (Figure 9 in paper).

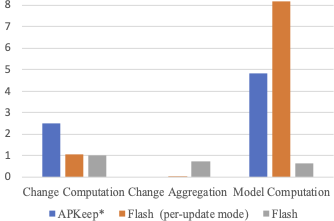

$ ./evaluator -e breakdownExpected output: The consoleMsg.log provides time break down (Figure 10 in paper).