This workshop is scheduled for July 9th, 2024.

Table of Contents

Join us for an engaging Spark workshop tailored for beginners, where we'll dive into both theoretical concepts and practical application. Delve into the fundamental principles of Spark, learning about its architecture, data processing capabilities, and use cases. Then, roll up your sleeves for hands-on practice, where you'll apply what you've learned in real-world scenarios. By the end of the workshop, you'll have a basic understanding of Spark and the confidence to start building your own data processing pipelines.

This section enumerates the primary frameworks/libraries employed to initiate the workshop.

Begin by following these detailed guidelines to set up your local environment seamlessly. These instructions will walk you through the process, ensuring a smooth start to your local development journey.

Please Pre-install the following software:

- Git: https://git-scm.com/book/en/v2/Getting-Started-Installing-Git

- Docker Desktop: https://www.docker.com/products/docker-desktop/ (Please refer to the instructions below based on your operating system.)

- (Optional) IDE (e.g., VS Code or IntelliJ)

Two methods are available for installing Docker Desktop on Windows: using WSL or Hyper-V. Both approaches are outlined below in Step 1.

-

Choose one of the described options and install all necessary functions.

(Recommended) Running Docker Desktop on Windows with WSL

Open Windows Powershell as Administrator and run the following commands:

wsl --install

The first time you launch a newly installed Linux distribution, a console window will open and you'll be asked to wait for files to de-compress and be stored on your machine. Once you have installed WSL, you will need to create a user account and password for your newly installed Linux distribution.

⚠️ Occasionally, Ubuntu installation may terminate unexpectedly, displaying an Error Code (e.g., Error code: Wsl/InstallDistro/E_UNEXPECTED). In such cases, you may need to enable Virtual Machine within your CPU Configurations in the BIOS or activate Virtual Environment in Windows Features!

Running Docker Desktop on Windows with Hyper-V backend

Open Windows Powershell as Administrator and run the following commands:

Enable-WindowsOptionalFeature -Online -FeatureName Microsoft-Hyper-V -All Enable-WindowsOptionalFeature -Online -FeatureName Containers -All Enable-WindowsOptionalFeature -Online -FeatureName VirtualMachinePlatform -All

-

Donwload the Docker Desktop file from the Docker Website and follow instructions.

-

After installation open Docker Desktop.

-

Donwload the Docker Desktop file from the Docker Website and follow instructions.

-

After installation open Docker Desktop.

Please follow the instructions to setup your local programming environment. These guidelines will ensure that you have all the necessary tools and configurations in place for a smooth and efficient development experience during our workshop.

-

Obtain a free Personal Access Token (PAT) from https://docs.github.com/PAT and use it to authenticate yourself locally.

-

Clone the repository of our spark workshop.

git clone https://github.com/CorrelAid/spark_workshop.git

-

Open a bash terminal and navigate directly to the root directory of your locally cloned repository. From there, install and activate the PySpark Environment using the following command:

sh run_setup.sh

-

Access the port via a web browser:

http://localhost:10001/?token=<token>

-

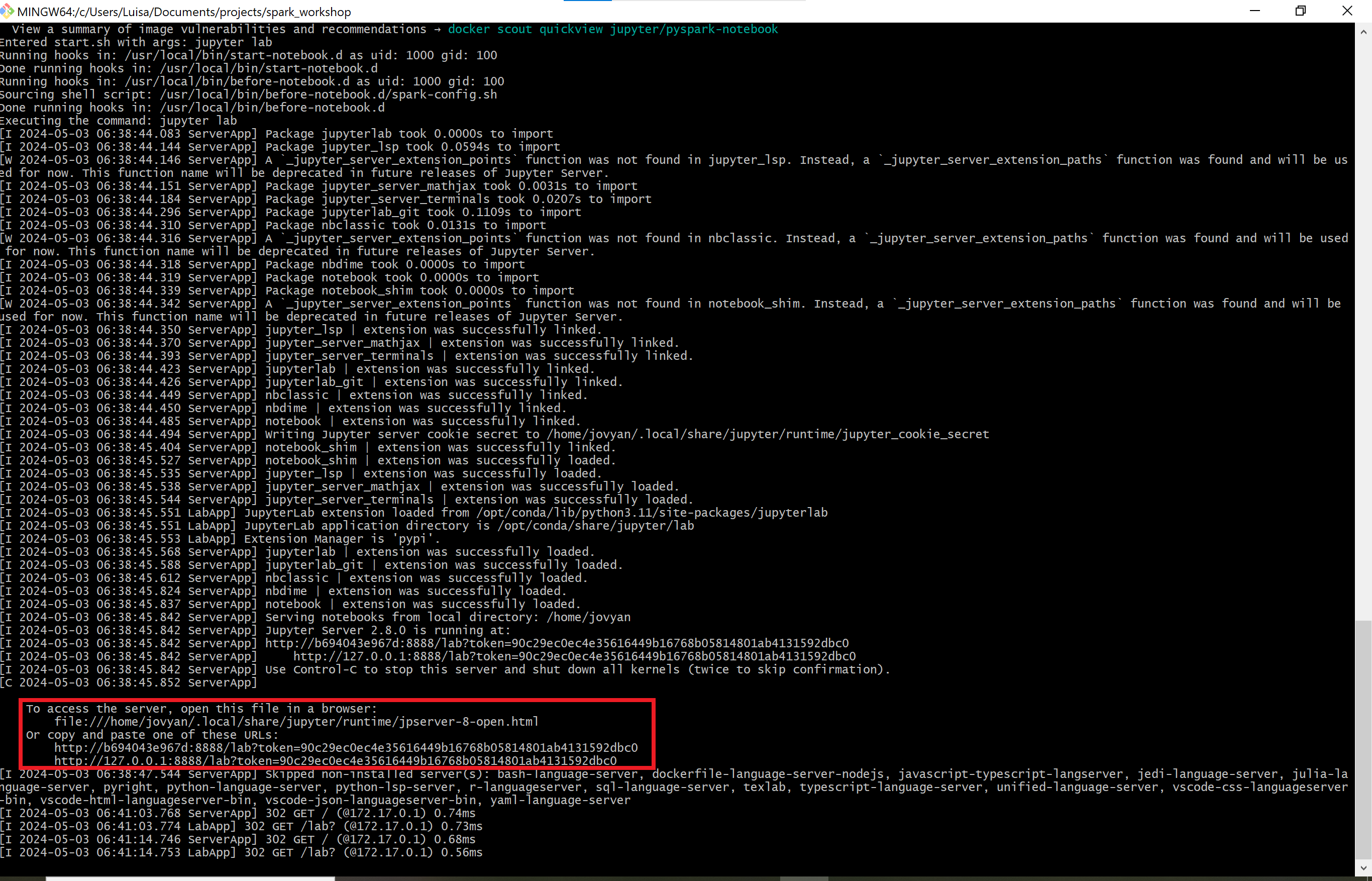

Enter the token as the password. You can find the token displayed in the terminal, as illustrated in the image below.:

If you are not sure what the token is you can open another bash terminal and execute:

docker logs pyspark_workshop | grep -o 'token=[^ ]*'

This command will display all logs from your recently created Docker container containing the token. Simply look for the section where "token=" is mentioned.

Sample Output: Screenshot of Terminal Display Post-Execution of run_setup.sh - Featuring Token and Port Information

This section features helpful links aimed at assisting with workshop tasks.

PySpark Documentation: DataFrame Functions

PySpark by Examples

PySpark: The 9 most useful functions to know

PySpark YouTube Tutorial: DataFrame Functions

When all else fails, just ask: either directly to us or to ChatGPT. 😉

Distributed under the MIT License. See LICENSE.txt for more information.

The data originates from a freely available dataset on Kaggle: https://kaggle/grocery-dataset

Pia Baronetzky

Daniel Manny

Jie Bao - @jbao

Luisa-Sophie Gloger - @LAG1819

Project Link: https://github.com/CorrelAid/spark_workshop