Model Parallelism has two types: Inter-layer and intra-layer. We note Inter-layer model parallelism as MP, and intra-layer model parallelism as TP (tensor parallelism).

some researchers may call TP parameter parallelism or intra-layer model parallelism.

Popular intra-model parallelism methods include 2D, 2.5D, 3D model-parallelism as well as Megatron(1D). There are only few work related to 2D, 2.5D and 3D now (only Colossal-AI).

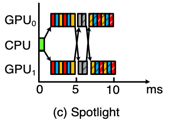

The partition of PP and MP are similar, but has different executing behaviors. Basically pipeline parallelism has two families: PipeDream family and GPipe family.

I classify parallelism methods according to their partition ways.

| Name | Description | Organization or author | Paper | Framework | Year | Auto Methods |

|---|---|---|---|---|---|---|

| ColocRL(REINFORCE) | Use reinforce learning to discover model partitions | Google Brain | mlr.press | Tensorflow | PMLR 70, 2017 | Reinforce |

| A hierarchical model for device placement (HDP) | Use Scotch to do graph partitioning | link | Tensorflow | ICLR 2018 | Reinforce LSTM | |

| GPipe | No implementation, see torchgpipe | arxiv | None | 2018 on arxiv, NIPS2019 | averagely partition or manually | |

| torchgpipe | An A GPipe implementation in PyTorch | UNIST | arxiv | pytorch | 2020 on arxiv | balance stages by profiling |

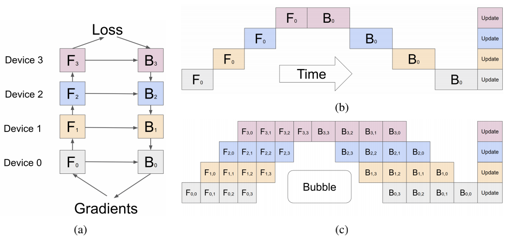

| GDP | A general deep RL method for automating device placements on arbitrary graphs. Orthogonal to DP,MP,PP | arxiv | Unknown | 2019 on arxiv | Reinforce Transformer | |

| Pesto | partition model based on inter-layer model parallelism | Stony Brook University | acm | Tensorflow | Middleware '21 | integer linear program |

| vPipe | A pipeline only system designed for NAS network. Complementary to hybrid parallelism | HKU | ieee | PyTorch | TPDS vol.33 no.3 2022 | Swap, Recompute, Partition(SRP) planner. P: Kernighan-Lin algorithm |

| Name | Description | Organization or author | Paper | Framework | Year | Auto Methods |

|---|---|---|---|---|---|---|

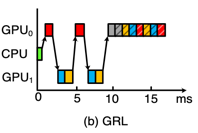

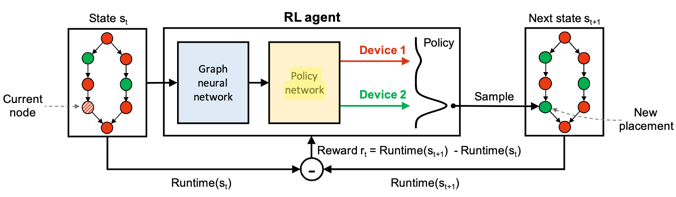

| Spotlight | Model device placement as a Markov decision process (MDP). | University of Toronto | mlr.press | Unknown | PMLR 80, 2018 | Reinforce LSTM |

| Placeto | Looks like Spotlight with MDP, but have different Policy. | MIT | nips | Tensorflow | NIPS 2019 | Reinforce |

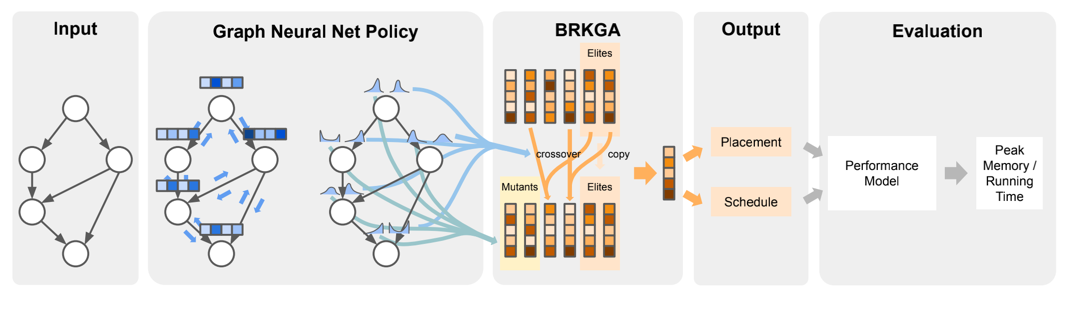

| REGAL | a deep reinforcement learning approach to minimizing the execution cost of neural network computation graphs in an optimizing compiler. | openreview | Unknown | ICLR 2020 | RL with Genetic Algorithm | |

| PipeDream | This repository contains the source code implementation of PipeDream and PipeDream-2BW | Microsoft Fiddle | arxiv, | PyTorch | 2018 on arxiv, SOSP 2019 | Dynamic Programming with Profile |

| PipeDream-2BW | See above one | Microsoft | arxiv, mlr.press | PyTorch | PMLR 139, 2021 | Dynamic Programming with Profile |

| DNN-partitioning | published at NeurIPS 2020. | Microsoft Fiddle | arxiv | proof-of-concept implementation | NIPS 2020 | Dynamic Programming and Integer Programming |

| HetPipe | Enabling Large DNN Training on (Whimpy) Heterogeneous GPU Clusters through Integration of Pipelined Model Parallelism and Data Parallelism | UNIST | usenix | PyTorch (not open sourced) | USENIX 2020 | use CPLEX to solve linear programming problem |

| DAPPLE | An Efficient Pipelined Data Parallel Approach for Training Large Model. Succeed from GPipe | Alibaba | arxiv | DAPPLE | 2020 on arxiv; PPoPP 21 | Dynamic Programming |

| PipeTransformer | Automated Elastic Pipelining for Distributed Training of Transformers | University of South California | arxiv | PyTorch | ICML 21 | Dynamic Programming |

| Chimera | Efficiently training large-scale neural networks with bidirectional pipelines | Department of Computer Science, ETH Zurich Switzerland | dl.acm | PyTorch | SC 2021 | Performance model with brute force |

| TAPP | Use a Seq2Seq based on attention mechanism to predict stage for layers. | Hohai University | mdpi | Unknown | Appl.sci. 2021, 11 | Reinforce Seq2Seq based on attention |

| RaNNC | RaNNC is an automatic parallelization middleware used to train very large-scale neural networks. | DIRECT and University of Tokyo | arxiv | PyTorch | IPDPS 2021 | dynamic programming |

| HeterPS | distributed deep learning with RL based scheduling in heterogeneous environment. | Baidu | arxiv | Paddle | 2021 | Reinforce learning based |

| FTPipe | FTPipe can automatically transform sequential implementation into a multi-GPU one. | Technion-Israel Institute of Technology | usenix | PyTorch | 2021 | multiprocessor scheduling problem with profiling. |

| Name | Description | Organization or author | Paper | Framework | Year | Auto Methods |

|---|---|---|---|---|---|---|

| OptCNN | auto parallelism method for CNN | Zhihao Jia | mlr.press | FlexFlow | PMLR 80, 2018 | Dynamic Programming based graph search algorithm |

| FlexFlow | a deep learning framework that accelerates distributed DNN training by automatically searching for efficient parallelization strategies | Zhihao Jia | stanford | FlexFlow, compatible with PyTorch, Keras | SysML 2019 | MCMC |

| Tofu | Supporting Very Large Models using Automatic Dataflow Graph Partitioning | New York University | dl.acm | Not OpenSourced | Euro-Sys 2019 | same as OptCNN |

| AccPar | Tensor partitioning for heterogeneous deep learning accelerators. | Linghao Song from USC | usc.edu | Need Manually Deploy | 2019 on arxiv, HPCA 2020 | Dynamic Programming |

| TensorOpt | Exploring the Tradeoffs in Distributed DNN Training with Auto-Parallelism | CUHK & Huawei | arxiv | MindSpore | 2020 on arxiv | Dynamic Programming based graph search algorithm |

| ROC | Another paper from Zhihao, Jia. Designed for GNN | Zhihao Jia | mlsys | On top of Flexflow | MLSys 2020 | uses a novel online linear regression model to achieve efficient graph partitioning, and introduces a dynamic programming algorithm to minimize data transfer cost. |

| Double Recursive | A Double recursive algorithm to search strategies | Huawei | link | MindSpore | Euro-Par 2021 | Double Recursive |

| PaSE | PaSE uses a dynamic programming based approach to find an efficient strategy within a reasonable time. | Baidu Research | ieee | prototype | IPDPS 2021 | Dynamic Programming |

| P^2 | offer a novel syntax-guided program synthesis framework that is able to decompose reductions over one or more parallelism axes to sequences of collectives in a hierarchy- and mapping-aware way | University of Cambridge & DeepMind | arxiv | Simulation Experiment | 2021 on arxiv, MLSys 2022 | Synthesize tool with simulation |

| AutoMap | Uses Search and Learn to do find Megatron-like strategies | DeepMind | arxiv | JAX python API, XLA backend | 2021 on arxiv, NIPS 2021 | Search: Monte Carlo Tree Search; Learn: Interactive Network |

| Name | Description | Organization or author | Paper | Framework | Year | Auto Methods |

|---|---|---|---|---|---|---|

| Auto-MAP | It works on HLO IR. Use Linkage Group to prune search space Use DQN RL to search DD, MP, PP stategies. | Alibaba | arxiv | RAINBOW DQN | 2020 | Reinforce Learning |

| Piper | This code package contains algorithms (proof-of-concept implementation) and input files (profiled DNN models / workloads) from the paper "Piper: Multidimensional Planner for DNN Parallelization" published at NeurIPS 2021. An extension of DNN partitioning | Microsoft Fiddle | link | proof-of-concept implementation | NIPS 2021 | two-level dynamic programming |

| GSPMD | a system that uses simple tensor sharding annotations to achieve different parallelism paradigms in a unified way | arxiv | Tensorflow XLA | 2021 | sharding propagation | |

| DistIR | Horizontal TP. An intermediate representation and simulator for efficient neural network distribution | Stanford University & Microsoft Fiddle | arxiv | PyTorch | MLSys 2021 | Grid-Search Simulator |

| Neo | A software-hardware co-designed system for high-performance distributed training of large-scale DLRM. | arxiv | PyTorch | 2021 | 1. Greedy 2. Karmarker-Karp Algorithm | |

| Adaptive Paddle | Elastic training, fault tolerant, Cost-model based Sharding propagation | Baidu | arxiv | Paddle | 2021 | Cost model based. Details un-given. |

| Alpa | Automating Inter- and Intra-Operator Parallelism for Distributed Deep Learning | UC Berkley, Google, etc. | arxiv | Jax, XLA | 2022 | Integer Linear for Intra, Dynamic programming for inter |

| Name | Description | Organization or author | Paper | Framework | Year | Auto Methods |

|---|---|---|---|---|---|---|

| TASO | automatically optimize DNN computation with graph substitution | Zhihao Jia |

| Name | Method Type | Parallelism | Year |

|---|---|---|---|

| ColocRL | Reinforcement | MP | 2017 |

| HDP | Reinforcement | MP | 2018 |

| GDP | Reinforcement | MP | 2019 |

| REGAL | Reinforcement | MP | 2020 |

| TAPP | Reinforcement | DP+PP | 2021 |

| Spotlight | Reinforcement | DP+MP | 2018 |

| Placeto | Reinforcement | DP+MP | 2019 |

| HeterPS | Reinforcement | DP+PP | 2021 |

| AutoMap | Deep Learning to predict rank | DP+TP | 2021 |

| Auto-MAP | Reinforcement | DP or TP or PP | 2020 |

| FlexFlow | MCMC | DP+TP | 2019 |

| ROC | uses a novel online linear regression model to achieve efficient graph partitioning, and introduces a dynamic programming algorithm to minimize data transfer cost. | DP+TP | 2020 |

| Name | Method Type | Parallelism | Year |

|---|---|---|---|

| Pesto | integer linear | MP | 2021 |

| vpipe | SRP algorithm + KL (DP) | PP | 2022 |

| PipeDream | dynamic programming | DP+PP | 2019 |

| DNN-partitioning | dynamic programming + integer programming | DP+PP | 2020 |

| PipeDream-2BW | dynamic programming | DP+PP | 2021 |

| HetPipe | dynamic programming | DP+PP | 2020 |

| DAPPLE | dynamic programming | DP+PP | 2021 |

| PipeTransformer | dynamic programming | DP+PP | 2021 |

| Chimera | Grid-Search | DP+PP | 2021 |

| RaNNC | dynamic programming | DP+PP | 2021 |

| FTPipe | Multiprocessor scheduling problem with profiling | DP+PP | 2021 |

| OptCNN | dynamic programming | DP+TP | 2018 |

| Tofu | dynamic programming | DP+TP | 2019 |

| AccPar | dynamic programming | DP+TP | 2020 |

| TensorOpt | dynamic programming | DP+TP | 2020 |

| Double Recursive | Double recursive | DP+TP | 2021 |

| PaSE | dynamic programming | DP+TP | 2021 |

| P^2 | Synthesize tool with simulation | DP+TP | 2021 |

| Piper | two-level dynamic programming | DP+TP+PP | 2021 |

| GSPMD | heuristic-propagation | DP+TP+PP | 2021 |

| DistIR | grid search | DP+TP+PP | 2021 |

| Neo | Greedy + Karmarker-karp algorithm | DP+TP+PP | 2021 |

| Alpa | Integer programming + Dynamic Programming | DP+TP+PP | 2022 |

2021.12.9 DeepMind proposes Gopher, a 280 billion parameter transformer language model. Trained by 4096 16GB TPUv3. link

2021.12.8 Baidu and Peng Cheng proposes Wenxin (文心), a 260 billion parameter knowledge-aware pretrained model (a.k.a. ERNIE 3.0 Titan). Trained with Adaptive Paddle in the Table above.

2021.10.26 Inspur formally proposes 245.7 billion parameter on AICC 2021.s