This repository contains source code, pretrained models, and experimental setup in the manuscript:

- Huy Phan, Oliver Y. Chén, Philipp Koch, Zongqing Lu, Ian McLoughlin, Alfred Mertins, and Maarten De Vos. Towards More Accurate Automatic Sleep Staging via Deep Transfer Learning. IEEE Transactions on Biomedical Engineeriing (TBME), August 2020

- Change path to

seqsleepnet/ - Run

preprare_data_sleepedf_sc.mto prepare SleepEDF-SC data (the path to the data must be provided, refer to the script for comments). The.matfiles generated are stored inmat/directory. - Run

genlist_sleepedf_sc.mto generate list of SleepEDF-SC files for network training based on the data split indata_split_sleepedf_sc.mat. The files generated are stored intf_data/directory. - Run

preprare_data_sleepedf_st.mto prepare SleepEDF-ST data (the path to the data must be provided refer to the script for comments). The.matfiles generated are stored inmat/directory. - Run

genlist_sleepedf_st.mto generate list of SleepEDF-ST files for network training based on the data split indata_split_sleepedf_st.mat. The files generated are stored intf_data/directory.

-

Change path to

seqsleepnet/tensorflow/seqsleepnet/ -

Run the example bash scripts:

finetune_all.sh: finetune entire a pretrained networkfinetune_softmax_SPB.sh: finetune softmax + sequence processing block (SPB)finetune_softmax_EPB.sh: finetune softmax + epoch processing block (EPB)finetune_softmax.sh: finetune softmaxtrain_scratch.sh: train a network from scratch

Note: when the --pretrained_model parameter is empty, the network will be trained from scratch. Otherwise, the specified pretrained model will be loaded and finetuned with the finetuning strategy specified in the --finetune_mode

Note: DeepSleepNet pretrained models are quite heavy. They were uploaded separately and can be downloaded from here: https://zenodo.org/record/3375235

After training/finetuning and testing the network on test data:

- Change path to

seqsleepnet/ordeepsleepnet/ - Refer to

examples_evaluation.mfor examples that calculates the performance metrics.

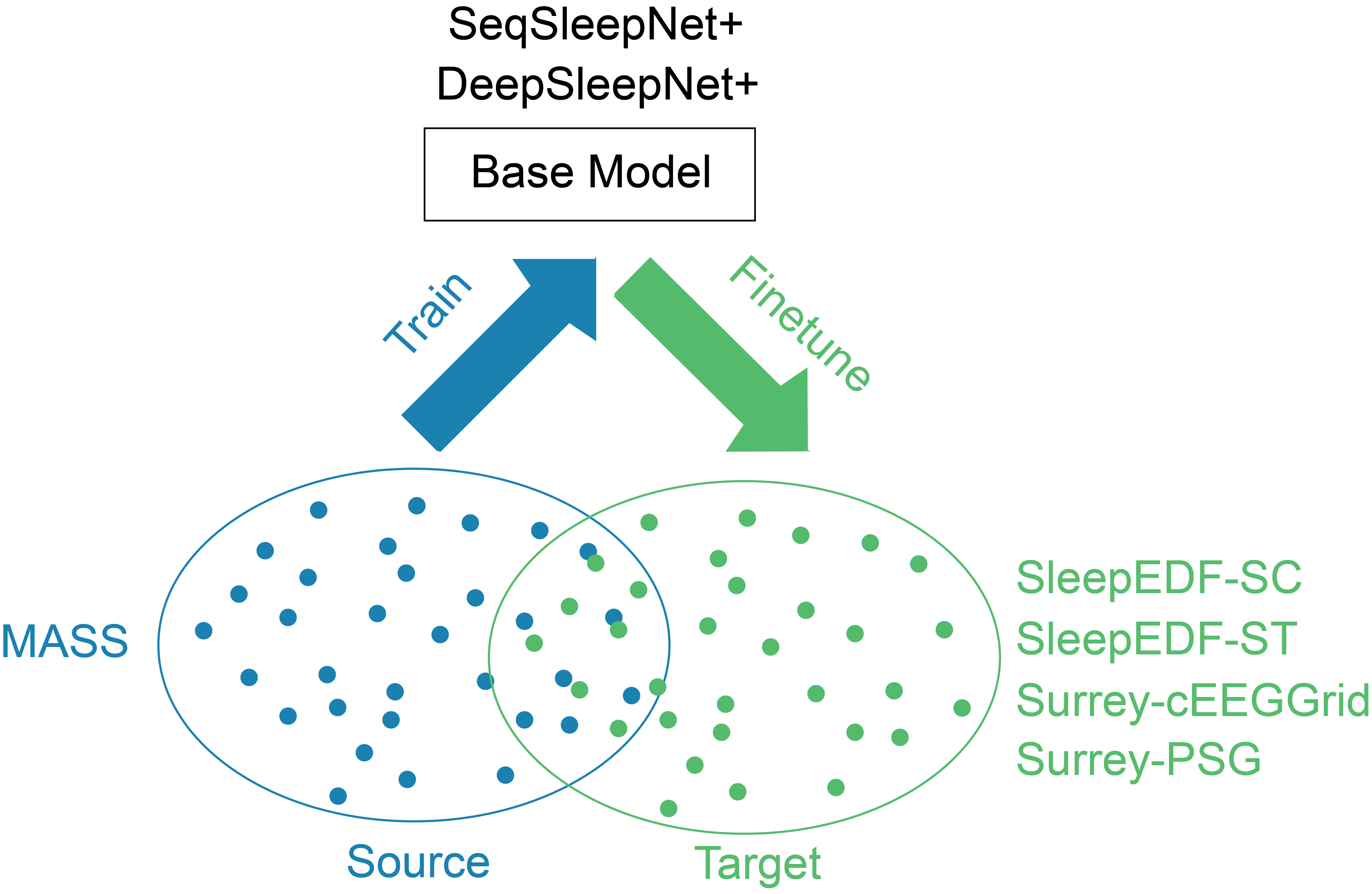

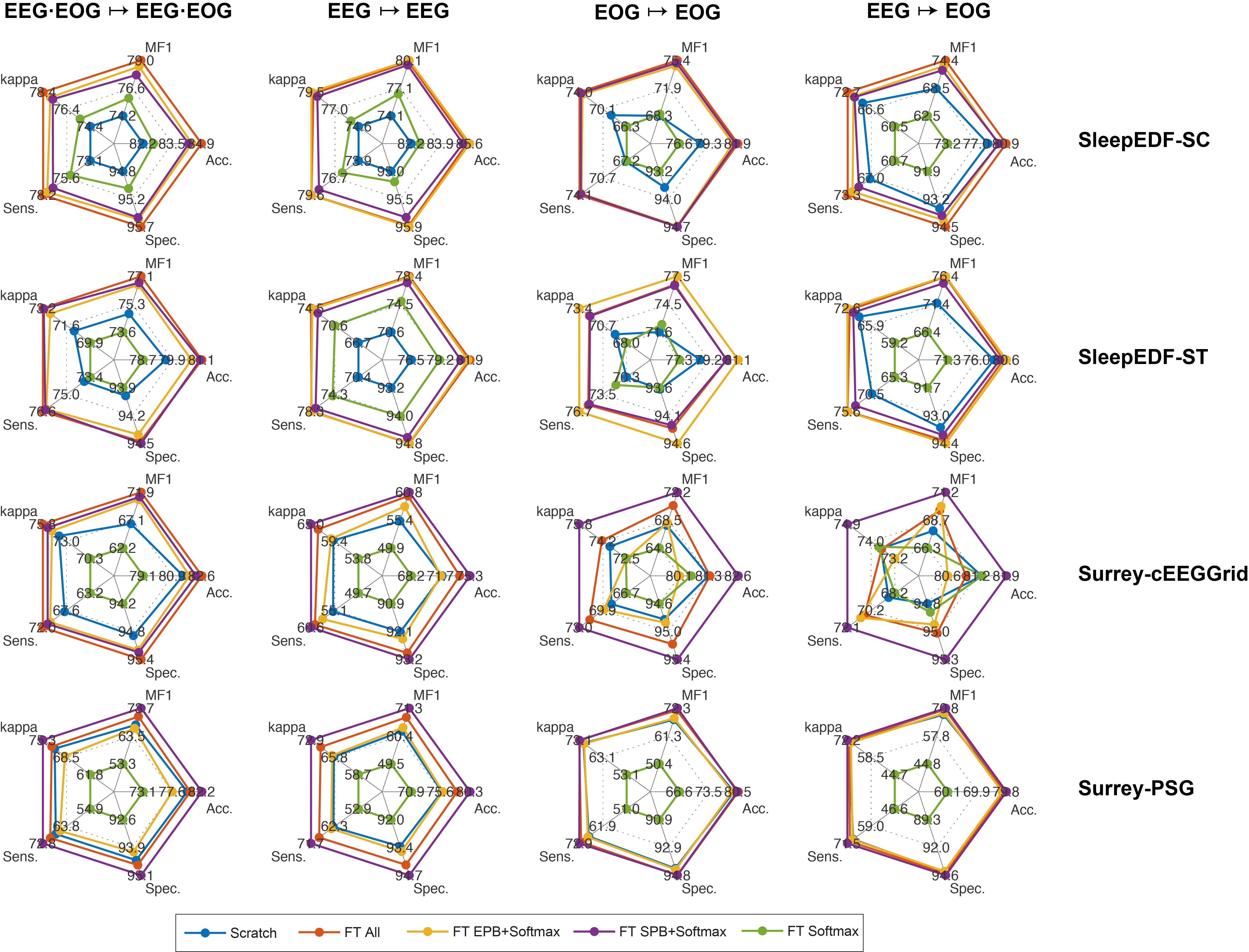

- Finetuning results with SeqSleepNet:

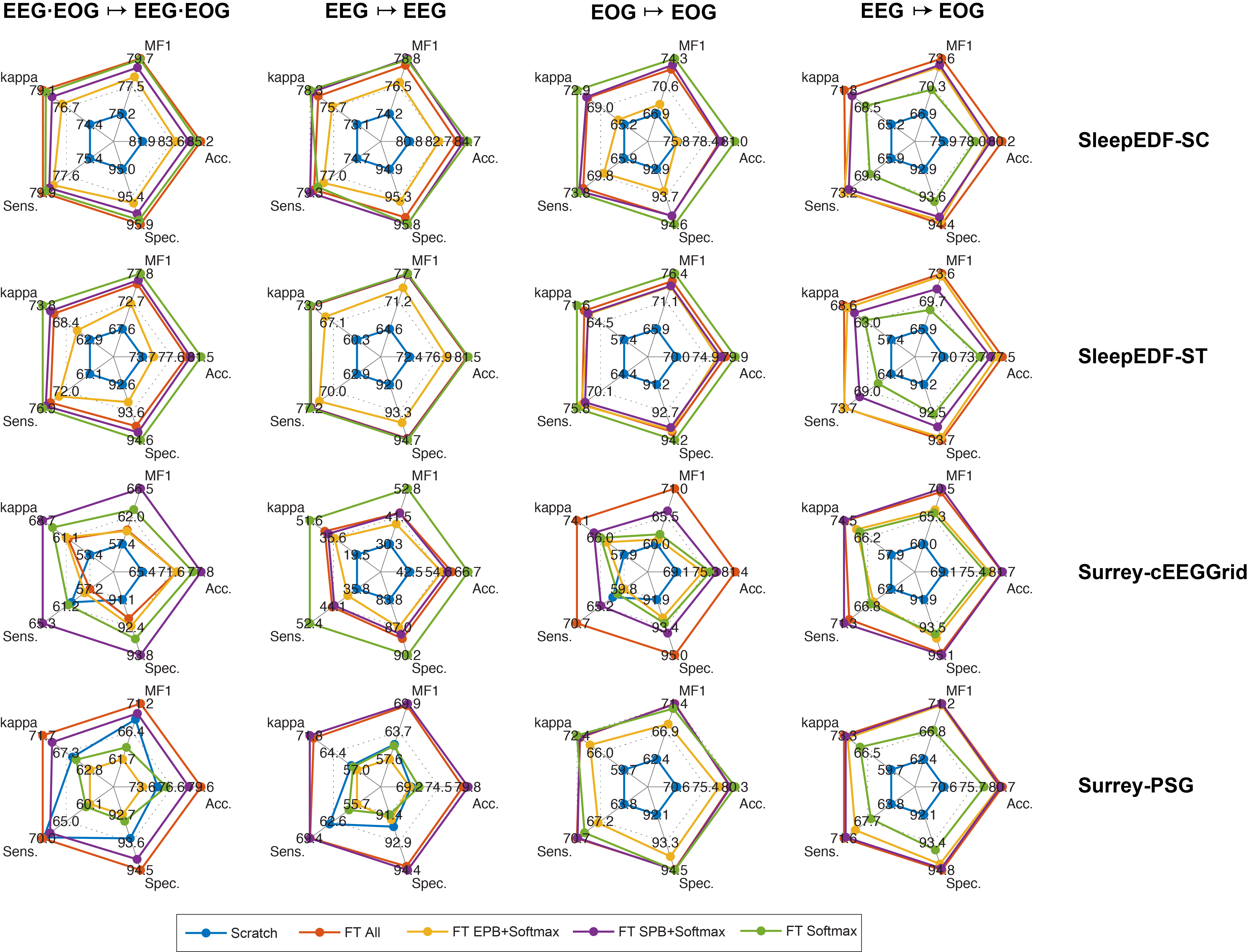

- Finetuning results with DeepSleepNet:

- Matlab v7.3 (for data preparation)

- Python3

- Tensorflow GPU versions 1.4 - 1.14 (for network training and evaluation)

- numpy

- scipy

- sklearn

- h5py

The SleepEDF expanded database can be download from https://physionet.org/content/sleep-edfx/1.0.0/. The latest version of this database contains 153 subjects in the SC subset. This experiment was conducted with the previous version of the SC subset which contains 20 subjects intentionally to simulate the situation of a small cohort. If you download the new version, make sure to use 20 subjects SC400-SC419.

On the ST subset of the database, the experiments were conducted with 22 placebo recordings. Make sure that you refer to https://physionet.org/content/sleep-edfx/1.0.0/ST-subjects.xls to obtain the right recordings and subjects.

The experiments only used the in-bed parts (from light off time to light on time) of the recordings to avoid the dominance of Wake stage as suggested in

- S. A. Imtiaz and E. Rodriguez-Villegas, An open-source toolbox for standardized use of PhysioNet Sleep EDF Expanded Database. Proc. EMBC, pp. 6014-6017, 2015.

Meta information (e.g. light off and light on times to extract the in-bed parts data from the whole day-night recordings the meta information is provided in sleepedfx_meta.

Huy Phan

School of Electronic Engineering and Computer Science

Queen Mary University of London

Email: h.phan{at}qmul.ac.uk

MIT © Huy Phan