This repository is used to participate in a competition organized by Intel®.

EigenLibrary:libeigen / eigen · GitLab。SYCLLibrary:、icpxCompiler:Intel® oneAPI Base Toolkit: Essential oneAPI Tools & Libraries。

Need to generate the calculation grid (like gen_gird.cpp) to X.txt and Y.txt.

icpx -fsycl src/main.cpp

./a.outIf you encounter the following problems:

error: Double type is not supported on this problem.

in kernel: 'xxxxxx'

error: backend compiler failed build.

Enter the following command on the command line before building the project:

export OverrideDefaultFP64Settings=1

export IGC_EnableDPEmulation=1chmod 755 q; chmod 755 run.sh;if [ -x "$(command -v qsub)" ]; then ./q run.sh; else ./run.sh; ficonst int DIV = 1000; // The number of divisions per second

const int SEC = 200; // Total simulation time (seconds)

const double Re = 200; // Reynolds number of the fluid

const double err = 0.005; // Minimum error value required for psi iterationsOutput to the ./output/ folder in the same directory, including PSI, U, V three kinds of data, the number contained in the file name represents the number of seconds of simulation.

You can use Matlab or Python for data analysis, such as using plot_flt.m and rainbow_figure.m in this repository.

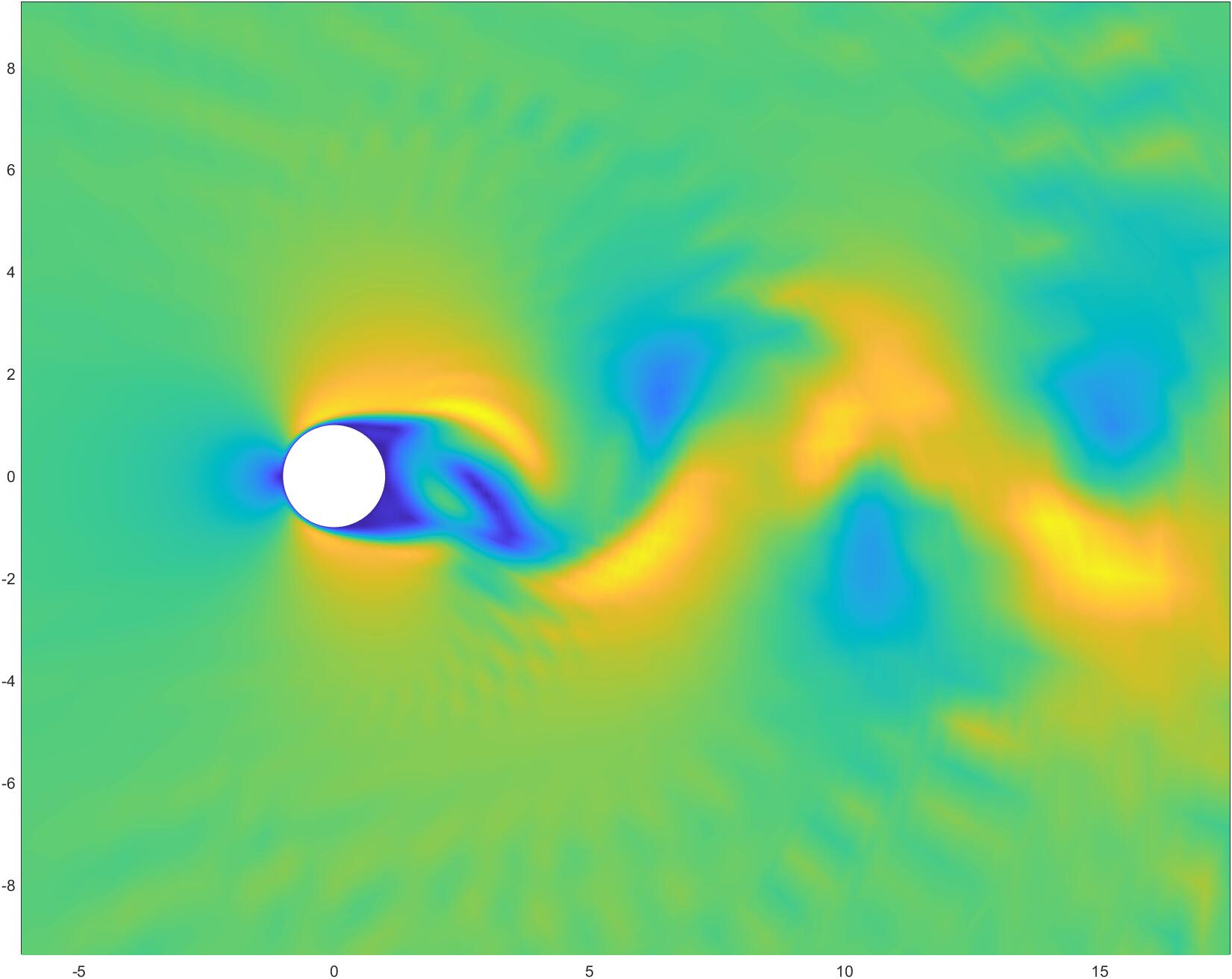

The following is a schematic diagram of the speed when Re = 200, T = 100s (the color depth represents the speed):

The specific optimization is reflected in the three functions of the vorticity FTCS iteration push()、the flow function iteration psi_iteration()、and the velocity solution velocity(), because these three parts perform a large number of matrix operations, and the amortized complexity of each iteration is boundary() in the same iteration has a complexity of only

Here is the iterated discrete form of the stream function

$$

\begin{align}

\psi_{i, j}^{k+1}&=\frac{1}{2(\alpha+\gamma)}\left[\left(\alpha-\frac{\delta}{2}\right) \psi_{i-1, j}^{k}+\left(\alpha+\frac{\delta}{2}\right) \psi_{i+1, j}^{k}+\left(\gamma-\frac{\varepsilon}{2}\right) \psi_{i, j-1}^{k}+\left(\gamma+\frac{\varepsilon}{2}\right) \psi_{i, j+1}^{k}\right] \

&+\frac{1}{2(\alpha+\gamma)}\left[2 \beta\left(\psi^{k}{i+1, j+1}-\psi^{k}{i+1, j-1}-\psi^{k}{i-1, j+1}+\psi^{k}{i-1, j-1}\right)-\omega_{i, j}\right]

\end{align}

$$

MatrixXd defaults to column priority, which leads to discontinuous memory in each row when converted to an array by MatrixXd::data(). I exchange the summation symbol and transform the formula to traverse by column to increase addressing efficiency.

We can use assembly code to calculate the clock cycle:

static __inline unsigned long long rdtsc(void) {

unsigned hi, lo;

__asm __volatile__("rdtsc" : "=a"(lo), "=d"(hi));

return ((unsigned long long)lo) | (((unsigned long long)hi) << 32);

} Taking the push() function as an example, it takes a lot of time to read data into the device memory, and about 73% of the time is spent on reading in a large matrix. In addition, there is still a lot of time spent on addressing the matrix. For codes that do not use heterogeneous computing, each location in push() consumes an average of 1172 clock cycles for data reading, and an average of 1543 clock cycles for floating-point calculations.