[TIP 2023] TTVFI

This is the official PyTorch implementation of the paper Learning Trajectory-Aware Transformer for Video Frame Interpolation.

Contents

- Introduction

- Requirements and dependencies

- Model and Results

- Dataset

- Demo

- Test

- Train

- Citation

- Contact

Introduction

Contribution

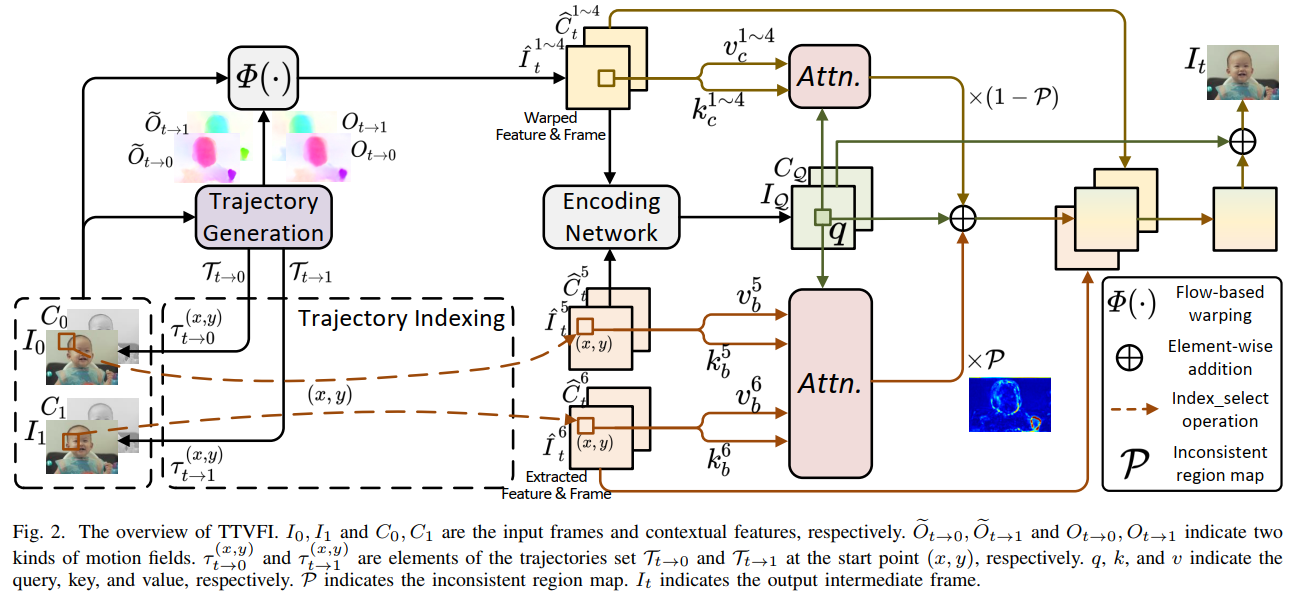

- We propose a novel trajectory-aware Transformer, which enables more accurate features learning of synthesis network by introducing Transformer into VFI tasks. Our method focuses on regions of video frames with motion consistency differences and performs attention with two kinds of well-designed visual tokens along the motion trajectory.

- We propose a consistent motion learning module to generate the consistent motion in trajectory-aware Transformer, which is used to generate the trajectories and guide the learning of the attention mechanism in different regions.

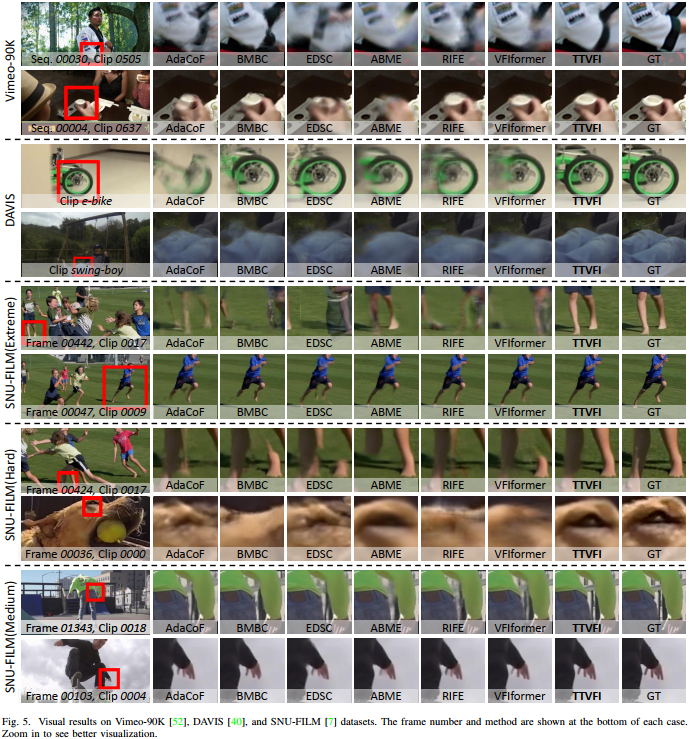

- Extensive experiments demonstrate that the proposed TTVFI can outperform existing state-of-the-art methods in four widely-used VFI benchmarks.

Overview

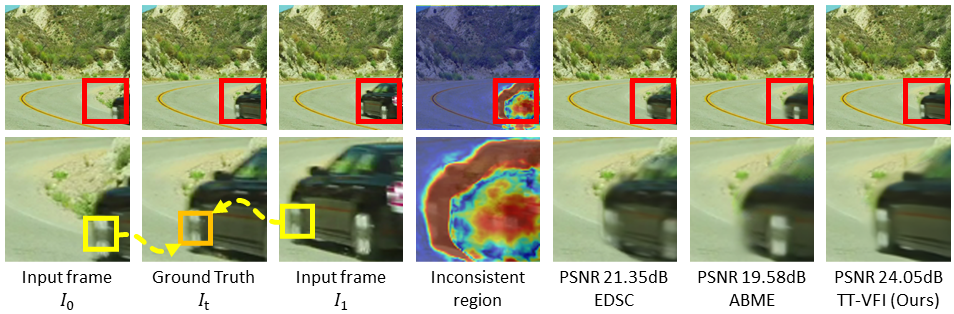

Visual

Requirements and dependencies

- python 3.6 (recommend to use Anaconda)

- pytorch == 1.2.0

- torchvision == 0.4.0

- opencv-python == 4.5.5

- scikit-image == 0.17.2

- scipy == 1.1.0

- setuptools == 58.0.4

- Pillow == 8.4.0

- imageio == 2.15.0

- numpy == 1.19.5

Model and Results

Pre-trained models can be downloaded from onedrive, google drive, and baidu cloud(j3nd).

- TTVFI_stage1.pth: trained from first stage with consistent motion learning.

- TTVFI_stage2.pth: trained from second stage with trajectory-aware Transformer on Viemo-90K dataset.

The output results on Vimeo-90K testing set, DAVIS, UCF101 and SNU-FILM can be downloaded from onedrive, google drive, and baidu cloud(j3nd).

Dataset

-

Training set

- Viemo-90K dataset. Download the both triplet training and test set. The

tri_trainlist.txtfile listing the training samples in the download zip file.- Make Vimeo-90K structure be:

├────vimeo_triplet ├────sequences ├────00001 ├────... ├────00078 ├────tri_trainlist.txt ├────tri_testlist.txt

- Viemo-90K dataset. Download the both triplet training and test set. The

-

Testing set

- Viemo-90K testset. The

tri_testlist.txtfile listing the testing samples in the download zip file. - DAVIS, UCF101, and SNU-FILM dataset.

- Make DAVIS, UCF101, and SNU-FILM structure be:

├────DAVIS ├────input ├────gt ├────UCF101 ├────1 ├────... ├────SNU-FILM ├────test ├────GOPRO_test ├────YouTube_test ├────test-easy.txt ├────... ├────test-extreme.txt

- Viemo-90K testset. The

Demo

- Clone this github repo

git clone https://github.com/ChengxuLiu/TTVFI.git

cd TTVFI

- Generate the Correlation package required by PWCNet:

cd ./models/PWCNet/correlation_package_pytorch1_0/

./build.sh

- Download pre-trained weights (onedrive|google drive|baidu cloud(j3nd)) under

./checkpoint

cd ../../..

mkdir checkpoint

- Prepare input frames and modify "FirstPath" and "SecondPath" in

./demo.py - Run demo

python demo.py

Test

- Clone this github repo

git clone https://github.com/ChengxuLiu/TTVFI.git

cd TTVFI

- Generate the Correlation package required by PWCNet:

cd ./models/PWCNet/correlation_package_pytorch1_0/

./build.sh

- Download pre-trained weights (onedrive|google drive|baidu cloud(j3nd)) under

./checkpoint

cd ../../..

mkdir checkpoint

- Prepare testing dataset and modify "datasetPath" in

./test.py - Run test

mkdir weights

# Vimeo

python test.py

Train

- Clone this github repo

git clone https://github.com/ChengxuLiu/TTVFI.git

cd TTVFI

- Generate the Correlation package required by PWCNet:

cd ./models/PWCNet/correlation_package_pytorch1_0/

./build.sh

- Prepare training dataset and modify "datasetPath" in

./train_stage1.pyand./train_stage2.py - Run training of stage1

mkdir weights

# stage one

python train_stage1.py

- The models of stage1 are saved in

./weightsand fed into stage2 (modify "pretrained" in./train_stage2.py) - Run training of stage2

# stage two

python train_stage2.py

- The models of stage2 are also saved in

./weights

Citation

If you find the code and pre-trained models useful for your research, please consider citing our paper. 😊

@article{liu2023ttvfi,

title={Ttvfi: Learning trajectory-aware transformer for video frame interpolation},

author={Liu, Chengxu and Yang, Huan and Fu, Jianlong and Qian, Xueming},

journal={IEEE Transactions on Image Processing},

year={2023},

publisher={IEEE}

}

Contact

If you meet any problems, please describe them in issues or contact:

- Chengxu Liu: liuchx97@gmail.com